| (18 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

| − | [ | + | [[Category:ECE662]] |

| + | [[Category:decision theory]] | ||

| + | [[Category:discriminant function]] | ||

| + | [[Category:lecture notes]] | ||

| + | [[Category:pattern recognition]] | ||

| + | [[Category:slecture]] | ||

| − | |||

| + | <center><font size= 4> | ||

| + | '''[[ECE662]]: Statistical Pattern Recognition and Decision Making Processes''' | ||

| + | </font size> | ||

| + | |||

| + | Spring 2008, [[user:mboutin|Prof. Boutin]] | ||

| + | |||

| + | [[Slectures|Slecture]] | ||

| + | |||

| + | <font size= 3> Collectively created by the students in [[ECE662:BoutinSpring08_OldKiwi|the class]]</font size> | ||

| + | </center> | ||

| + | |||

| + | ---- | ||

| + | =Lecture 6 Lecture notes= | ||

| + | Jump to: [[ECE662_Pattern_Recognition_Decision_Making_Processes_Spring2008_sLecture_collective|Outline]]| | ||

| + | [[Lecture 1 - Introduction_OldKiwi|1]]| | ||

| + | [[Lecture 2 - Decision Hypersurfaces_OldKiwi|2]]| | ||

| + | [[Lecture 3 - Bayes classification_OldKiwi|3]]| | ||

| + | [[Lecture 4 - Bayes Classification_OldKiwi|4]]| | ||

| + | [[Lecture 5 - Discriminant Functions_OldKiwi|5]]| | ||

| + | [[Lecture 6 - Discriminant Functions_OldKiwi|6]]| | ||

| + | [[Lecture 7 - MLE and BPE_OldKiwi|7]]| | ||

| + | [[Lecture 8 - MLE, BPE and Linear Discriminant Functions_OldKiwi|8]]| | ||

| + | [[Lecture 9 - Linear Discriminant Functions_OldKiwi|9]]| | ||

| + | [[Lecture 10 - Batch Perceptron and Fisher Linear Discriminant_OldKiwi|10]]| | ||

| + | [[Lecture 11 - Fischer's Linear Discriminant again_OldKiwi|11]]| | ||

| + | [[Lecture 12 - Support Vector Machine and Quadratic Optimization Problem_OldKiwi|12]]| | ||

| + | [[Lecture 13 - Kernel function for SVMs and ANNs introduction_OldKiwi|13]]| | ||

| + | [[Lecture 14 - ANNs, Non-parametric Density Estimation (Parzen Window)_OldKiwi|14]]| | ||

| + | [[Lecture 15 - Parzen Window Method_OldKiwi|15]]| | ||

| + | [[Lecture 16 - Parzen Window Method and K-nearest Neighbor Density Estimate_OldKiwi|16]]| | ||

| + | [[Lecture 17 - Nearest Neighbors Clarification Rule and Metrics_OldKiwi|17]]| | ||

| + | [[Lecture 18 - Nearest Neighbors Clarification Rule and Metrics(Continued)_OldKiwi|18]]| | ||

| + | [[Lecture 19 - Nearest Neighbor Error Rates_OldKiwi|19]]| | ||

| + | [[Lecture 20 - Density Estimation using Series Expansion and Decision Trees_OldKiwi|20]]| | ||

| + | [[Lecture 21 - Decision Trees(Continued)_OldKiwi|21]]| | ||

| + | [[Lecture 22 - Decision Trees and Clustering_OldKiwi|22]]| | ||

| + | [[Lecture 23 - Spanning Trees_OldKiwi|23]]| | ||

| + | [[Lecture 24 - Clustering and Hierarchical Clustering_OldKiwi|24]]| | ||

| + | [[Lecture 25 - Clustering Algorithms_OldKiwi|25]]| | ||

| + | [[Lecture 26 - Statistical Clustering Methods_OldKiwi|26]]| | ||

| + | [[Lecture 27 - Clustering by finding valleys of densities_OldKiwi|27]]| | ||

| + | [[Lecture 28 - Final lecture_OldKiwi|28]] | ||

| + | ---- | ||

| + | ---- | ||

LECTURE THEME : | LECTURE THEME : | ||

- Discriminant Functions | - Discriminant Functions | ||

| Line 12: | Line 60: | ||

skeleton= set of points whose distance to the set <math>\mu_1, ..., \mu_k</math> is achieved by at least two different <math>\mu_{i}'s</math>, i.e., we have <math>dist(x,set)=min \{dist(x,\mu_i)\}</math> | skeleton= set of points whose distance to the set <math>\mu_1, ..., \mu_k</math> is achieved by at least two different <math>\mu_{i}'s</math>, i.e., we have <math>dist(x,set)=min \{dist(x,\mu_i)\}</math> | ||

| − | and want <math>\exists i_1 \neq i_2</math> such that | + | and want <math>\exists i_1 \neq i_2</math> such that |

| − | <math>dist(x,set)=dist(x,\ | + | <math>dist(x,set)=dist(x,\mu_i1) =dist(x,\mu_i2)</math> |

The skeleton is a decision boundary defining regions (chambers) <math>R_i</math> where we should decide <math>w_i</math>. | The skeleton is a decision boundary defining regions (chambers) <math>R_i</math> where we should decide <math>w_i</math>. | ||

| Line 22: | Line 70: | ||

<math>\vec{n}</math> is a normal vector to the plane. Because if <math>\vec{x_1}</math> and <math>\vec{x_2}</math> are in this plane, | <math>\vec{n}</math> is a normal vector to the plane. Because if <math>\vec{x_1}</math> and <math>\vec{x_2}</math> are in this plane, | ||

| + | |||

<math>\Longrightarrow \vec{n} \cdot \vec{x_1} = const, \vec{n} \cdot \vec{x_2} = const</math> | <math>\Longrightarrow \vec{n} \cdot \vec{x_1} = const, \vec{n} \cdot \vec{x_2} = const</math> | ||

| Line 28: | Line 77: | ||

<math>\therefore \vec{n} \bot ( \vec{x_1} - \vec{x_2})</math> | <math>\therefore \vec{n} \bot ( \vec{x_1} - \vec{x_2})</math> | ||

| − | |||

| − | |||

| − | |||

| − | |||

Any linear structure can be written as | Any linear structure can be written as | ||

| − | + | <math>\sum_{i=1}^{n} c_i x_i + const = 0</math> | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | Ex. of planes in <math>\Re^{2}</math> | |

| − | + | Example: for two classes <math>w_1</math>, <math>w_2</math> hyperplane is defined by | |

| − | + | <math>\{ \vec{x} | g_1(\vec{x}) - g_2(\vec{x}) = 0 \}</math> | |

| − | + | ||

where, | where, | ||

| − | | | + | <math>g_i(\vec{x})=-\frac{1}{2\sigma^2} \|\vec{x}-\mu_i\|_{L_2}^2+ \ln P(w_i)</math> |

| − | + | <math>-\frac{1}{2\sigma^2} ((\vec{x}-\mu_i)^{\top}(\vec{x}-\mu_i)) + \ln P(w_i)</math> | |

| − | + | <math>-\frac{1}{2\sigma^2} (\vec{x}^{\top}\vec{x} - \vec{x}^{\top}\mu_i -\mu_i^{\top}\vec{x} + \mu_i^{\top}\mu_i ) + \ln P(w_i)</math> | |

| − | but | + | but <math>\vec{x}^{\top}\vec{\mu_i}</math> is scalar <math>\Longrightarrow \left( \vec{x}^{\top} \vec{\mu_i}\right)^{\top} = \vec{\mu_i}^{\top}\vec{x} = \vec{x}^{\top}\vec{\mu_i}</math> |

| − | + | <math>\Longrightarrow g_i(\vec{x}) = -\frac{1}{2\sigma^2} \|\vec{x}\|^2 + \frac{1}{\sigma^2} \vec{x} \cdot \vec{\mu_i} - \frac{\mu_i^{\top}\mu_i}{2\sigma^2} + \ln P(w_i)</math> | |

| − | + | ||

| − | + | First term is independent of <math>i</math>, therefore we can remove first term from <math>g_i\left( \vec{x}\right) | |

| − | + | </math> | |

| − | + | <math>\Longrightarrow g_i(\vec{x}) = \frac{1}{\sigma^2} \vec{x} \cdot \vec{\mu_i} - \frac{\vec{\mu_i} \cdot \vec{\mu_i}}{2 \sigma^2} + \ln P(w_i)</math> | |

| − | + | which is a degree one polynomial in <math>\vec{x}</math>. | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | which is a degree one polynomial in | + | |

A classifier that uses a linear discriminant function is called "linear machine". | A classifier that uses a linear discriminant function is called "linear machine". | ||

| Line 99: | Line 109: | ||

The hyperplane between two classes is defined by | The hyperplane between two classes is defined by | ||

| − | + | <math>g_1(\vec{x}) - g_2(\vec{x}) = 0</math> | |

| − | + | <math>\Leftrightarrow \frac{1}{\sigma^2} \vec{x}^{\top}\mu_1 - \frac{\mu_1^{\top}\mu_1}{2\sigma^2} + \ln P(w_1) | |

| + | </math> | ||

| − | + | <math>- \frac{1}{\sigma^2} \vec{x}^{\top}\mu_2 + \frac{\mu_2^{\top}\mu_2}{2\sigma^2} - \ln P(w_2) = 0</math> | |

| − | | | + | <math>\Leftrightarrow \frac{1}{\sigma^2} \vec{x} \cdot (\vec{\mu_1} - \vec{\mu_2}) =\frac{ \|\vec{\mu_1} \|^2}{2\sigma^2} - \frac{ \|\vec{\mu_2} \|^2}{2\sigma^2} + \ln P(w_2) -\ln P(w_1)</math> |

| − | + | Case 1: When <math>P(w_1)=P(w_2)</math> | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | Case 1: When | + | |

| − | + | ||

| − | + | ||

| − | + | ||

[[Image:lec6_case1_OldKiwi.png]] | [[Image:lec6_case1_OldKiwi.png]] | ||

| − | The hyperplane (black line) in this case goes through the middle | + | The hyperplane (black line) in this case goes through the middle line of the vector (gray line). |

| − | Case 2: | + | Case 2: <math>\Sigma_i = \Sigma</math> for all i's: |

| − | + | Recall: we can take <math>g_i(\vec{x}) = -\frac{1}{2}\left( \vec{x}-\vec{\mu_i} \right)^{\top} \Sigma^{-1}\left(\vec{x}-\vec{\mu_i}\right) - \frac{n}{2}\ln 2\pi - \frac{1}{2} \ln |\Sigma| + \ln P(w_i)</math> | |

| − | + | ||

| − | + | but <math>\Sigma_i = \Sigma, - \frac{1}{2} \ln{2\pi}</math> are independent of <math>i</math> | |

| − | |||

| − | |||

| − | + | Therefore, we remove these terms from <math>g_i(\vec{x})</math>, then new <math>g_i(\vec{x})</math> will look like | |

| − | + | <math>\Longrightarrow g_i\left( \vec{x} \right) = - \frac{1}{2} \left( \vec{x} - \vec{\mu_i} \right)^{\top} \Sigma^{-1} \left( \vec{x} - \vec{\mu_i} \right) + \ln{P(w_i)}</math> | |

| − | + | ||

| − | + | So, if all <math>P\left( w_i \right)</math>'s are the same, assign <math>\vec{x}</math> to the class with the "nearest" mean. | |

| − | + | Rewriting <math>g_i(\vec{x})</math>, | |

| − | + | <math>g_i(\vec{x}) = - \frac{1}{2} ( \vec{x}^{\top} \Sigma^{-1}\vec{x} - 2 \vec{\mu_i}^{\top} \Sigma^{-1}\vec{x} + \vec{\mu_i}^{\top}\Sigma^{-1}\vec{\mu_i}) + \ln{P(w_i)}</math> | |

| − | + | ||

| − | + | Here we know that <math>\vec{x}^{\top} \Sigma^{-1}\vec{x}</math> is independent of <math>i</math>, therefore we can remove this term from <math>g_i(\vec{x})</math> | |

| − | + | <math>\Longrightarrow g_i(\vec{x}) = \vec{\mu_i}^{\top} \Sigma^{-1}\vec{x} - \frac{1}{2} \vec{\mu_i}^{\top}\Sigma^{-1}\vec{\mu_i} + \ln{P(w_i)}</math> | |

| − | + | ||

| − | + | Again this is a linear function of <math>\vec{x}</math> | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | Again this is a linear function of | + | |

The equation of the hyperplane: | The equation of the hyperplane: | ||

| − | + | <math>(\vec{\mu_1}-\vec{\mu_2})^{\top}\Sigma^{-1}\vec{x} = \frac{1}{2} \vec{\mu_2}^{\top}\Sigma^{-1}\vec{\mu_2} - \frac{1}{2}\vec{\mu_1}^{\top}\Sigma^{-1}\vec{\mu_1} + \ln P(w_1) - \ln P(w_2)</math> | |

| − | + | ||

| − | + | ||

| − | + | ||

In sum, whatever the covariance structures are, as long as they are the same for all classes, the final discriminant functions would be linear (square terms dropped). | In sum, whatever the covariance structures are, as long as they are the same for all classes, the final discriminant functions would be linear (square terms dropped). | ||

| − | Below, you see an illustration of this case. If you have ellipses that have the same length and direction of the principal axis, you can modify them | + | Below, you see an illustration of this case. If you have ellipses that have the same length and direction of the principal axis, you can modify them simultaneously to use Case 1. |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

[[Image:lecture_6_1_OldKiwi.jpg]] | [[Image:lecture_6_1_OldKiwi.jpg]] | ||

| − | The hyperplane (green line) is perpendicular to the red line conecting the two means. It moves along the red line depending on the value of | + | The hyperplane (green line) is perpendicular to the red line conecting the two means. It moves along the red line depending on the value of <math>P(w_1)</math> and <math>P(w_2)</math>. If <math>P(w_1)=P(w_2)</math> the hyperplane is located on the middle of the distance between the means. |

Here's an animated version of the above figure: | Here's an animated version of the above figure: | ||

| Line 216: | Line 180: | ||

[[Image:Lecture6_GaussbothClasses_UneqPrior2_OldKiwi.jpg]] | [[Image:Lecture6_GaussbothClasses_UneqPrior2_OldKiwi.jpg]] | ||

| + | A video to visualize the decision hypersurface with changes to the Gaussian parameters is shown on the [[Bayes_Decision_Rule_Old_Kiwi|Bayes Decision Rule Video]] page. | ||

| − | + | Case 3: When <math>\Sigma_i^{-1}</math> is arbitrary | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | Case 3: When | + | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

[[Image:Lecture6_sigma_arbitrary_OldKiwi.JPG]] | [[Image:Lecture6_sigma_arbitrary_OldKiwi.JPG]] | ||

| − | |||

We can take | We can take | ||

| − | + | <math>g_i(\vec{x}) = - \frac{1}{2} ( \vec{x} - \vec{\mu_i})^{\top}\Sigma_i^{-1}(\vec{x}-\vec{\mu_i})-\frac{1}{2} \ln \|\Sigma_i\|+ \ln P(w_i)</math> | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | the decision surface between | + | the decision surface between <math>w_1</math> and <math>w_2</math>: |

| − | is a degree 2 polynom in | + | is a degree 2 polynom in <math>\vec{x}</math> |

| − | Note: decision boundaries must not be connected if | + | Note: decision boundaries must not be connected if <math>P(w_1)=P(w_2)</math> decision boundary has two disconnected points. |

[[Image:lec6_fig_case3_OldKiwi.jpg]] | [[Image:lec6_fig_case3_OldKiwi.jpg]] | ||

| Line 254: | Line 205: | ||

For difference cases and their figures, refer to page 42 and 43 of DHS. | For difference cases and their figures, refer to page 42 and 43 of DHS. | ||

| + | ---- | ||

| + | Previous: [[Lecture_5_-_Discriminant_Functions_OldKiwi|Lecture 5]] | ||

| + | Next: [[Lecture_7_-_MLE_and_BPE_OldKiwi|Lecture 7]] | ||

| − | + | [[ECE662:BoutinSpring08_OldKiwi|Back to ECE662 Spring 2008 Prof. Boutin]] | |

| − | + | ||

Latest revision as of 10:17, 10 June 2013

ECE662: Statistical Pattern Recognition and Decision Making Processes

Spring 2008, Prof. Boutin

Collectively created by the students in the class

Lecture 6 Lecture notes

Jump to: Outline| 1| 2| 3| 4| 5| 6| 7| 8| 9| 10| 11| 12| 13| 14| 15| 16| 17| 18| 19| 20| 21| 22| 23| 24| 25| 26| 27| 28

LECTURE THEME : - Discriminant Functions

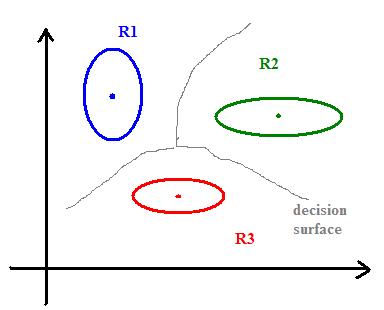

To separate several classes, we can draw the "skeleton" (Blum) of shape defined by mean vectors:

skeleton= set of points whose distance to the set $ \mu_1, ..., \mu_k $ is achieved by at least two different $ \mu_{i}'s $, i.e., we have $ dist(x,set)=min \{dist(x,\mu_i)\} $

and want $ \exists i_1 \neq i_2 $ such that $ dist(x,set)=dist(x,\mu_i1) =dist(x,\mu_i2) $

The skeleton is a decision boundary defining regions (chambers) $ R_i $ where we should decide $ w_i $.

What is the equation of these hyperplanes?

Recall the hyperplane equation: $ \{ \vec{x} | \vec{n} \cdot \vec{x} = const \} $

$ \vec{n} $ is a normal vector to the plane. Because if $ \vec{x_1} $ and $ \vec{x_2} $ are in this plane,

$ \Longrightarrow \vec{n} \cdot \vec{x_1} = const, \vec{n} \cdot \vec{x_2} = const $

$ \Longrightarrow \vec{n} \cdot (\vec{x_1} - \vec{x_2}) = const - const = 0 $

$ \therefore \vec{n} \bot ( \vec{x_1} - \vec{x_2}) $

Any linear structure can be written as

$ \sum_{i=1}^{n} c_i x_i + const = 0 $

Ex. of planes in $ \Re^{2} $

Example: for two classes $ w_1 $, $ w_2 $ hyperplane is defined by

$ \{ \vec{x} | g_1(\vec{x}) - g_2(\vec{x}) = 0 \} $

where, $ g_i(\vec{x})=-\frac{1}{2\sigma^2} \|\vec{x}-\mu_i\|_{L_2}^2+ \ln P(w_i) $

$ -\frac{1}{2\sigma^2} ((\vec{x}-\mu_i)^{\top}(\vec{x}-\mu_i)) + \ln P(w_i) $

$ -\frac{1}{2\sigma^2} (\vec{x}^{\top}\vec{x} - \vec{x}^{\top}\mu_i -\mu_i^{\top}\vec{x} + \mu_i^{\top}\mu_i ) + \ln P(w_i) $

but $ \vec{x}^{\top}\vec{\mu_i} $ is scalar $ \Longrightarrow \left( \vec{x}^{\top} \vec{\mu_i}\right)^{\top} = \vec{\mu_i}^{\top}\vec{x} = \vec{x}^{\top}\vec{\mu_i} $

$ \Longrightarrow g_i(\vec{x}) = -\frac{1}{2\sigma^2} \|\vec{x}\|^2 + \frac{1}{\sigma^2} \vec{x} \cdot \vec{\mu_i} - \frac{\mu_i^{\top}\mu_i}{2\sigma^2} + \ln P(w_i) $

First term is independent of $ i $, therefore we can remove first term from $ g_i\left( \vec{x}\right) $

$ \Longrightarrow g_i(\vec{x}) = \frac{1}{\sigma^2} \vec{x} \cdot \vec{\mu_i} - \frac{\vec{\mu_i} \cdot \vec{\mu_i}}{2 \sigma^2} + \ln P(w_i) $

which is a degree one polynomial in $ \vec{x} $.

A classifier that uses a linear discriminant function is called "linear machine".

The hyperplane between two classes is defined by

$ g_1(\vec{x}) - g_2(\vec{x}) = 0 $

$ \Leftrightarrow \frac{1}{\sigma^2} \vec{x}^{\top}\mu_1 - \frac{\mu_1^{\top}\mu_1}{2\sigma^2} + \ln P(w_1) $

$ - \frac{1}{\sigma^2} \vec{x}^{\top}\mu_2 + \frac{\mu_2^{\top}\mu_2}{2\sigma^2} - \ln P(w_2) = 0 $

$ \Leftrightarrow \frac{1}{\sigma^2} \vec{x} \cdot (\vec{\mu_1} - \vec{\mu_2}) =\frac{ \|\vec{\mu_1} \|^2}{2\sigma^2} - \frac{ \|\vec{\mu_2} \|^2}{2\sigma^2} + \ln P(w_2) -\ln P(w_1) $

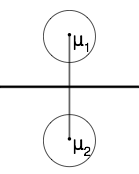

Case 1: When $ P(w_1)=P(w_2) $

The hyperplane (black line) in this case goes through the middle line of the vector (gray line).

Case 2: $ \Sigma_i = \Sigma $ for all i's:

Recall: we can take $ g_i(\vec{x}) = -\frac{1}{2}\left( \vec{x}-\vec{\mu_i} \right)^{\top} \Sigma^{-1}\left(\vec{x}-\vec{\mu_i}\right) - \frac{n}{2}\ln 2\pi - \frac{1}{2} \ln |\Sigma| + \ln P(w_i) $

but $ \Sigma_i = \Sigma, - \frac{1}{2} \ln{2\pi} $ are independent of $ i $

Therefore, we remove these terms from $ g_i(\vec{x}) $, then new $ g_i(\vec{x}) $ will look like

$ \Longrightarrow g_i\left( \vec{x} \right) = - \frac{1}{2} \left( \vec{x} - \vec{\mu_i} \right)^{\top} \Sigma^{-1} \left( \vec{x} - \vec{\mu_i} \right) + \ln{P(w_i)} $

So, if all $ P\left( w_i \right) $'s are the same, assign $ \vec{x} $ to the class with the "nearest" mean.

Rewriting $ g_i(\vec{x}) $,

$ g_i(\vec{x}) = - \frac{1}{2} ( \vec{x}^{\top} \Sigma^{-1}\vec{x} - 2 \vec{\mu_i}^{\top} \Sigma^{-1}\vec{x} + \vec{\mu_i}^{\top}\Sigma^{-1}\vec{\mu_i}) + \ln{P(w_i)} $

Here we know that $ \vec{x}^{\top} \Sigma^{-1}\vec{x} $ is independent of $ i $, therefore we can remove this term from $ g_i(\vec{x}) $

$ \Longrightarrow g_i(\vec{x}) = \vec{\mu_i}^{\top} \Sigma^{-1}\vec{x} - \frac{1}{2} \vec{\mu_i}^{\top}\Sigma^{-1}\vec{\mu_i} + \ln{P(w_i)} $

Again this is a linear function of $ \vec{x} $

The equation of the hyperplane: $ (\vec{\mu_1}-\vec{\mu_2})^{\top}\Sigma^{-1}\vec{x} = \frac{1}{2} \vec{\mu_2}^{\top}\Sigma^{-1}\vec{\mu_2} - \frac{1}{2}\vec{\mu_1}^{\top}\Sigma^{-1}\vec{\mu_1} + \ln P(w_1) - \ln P(w_2) $

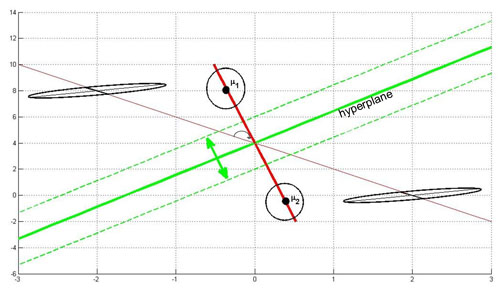

In sum, whatever the covariance structures are, as long as they are the same for all classes, the final discriminant functions would be linear (square terms dropped).

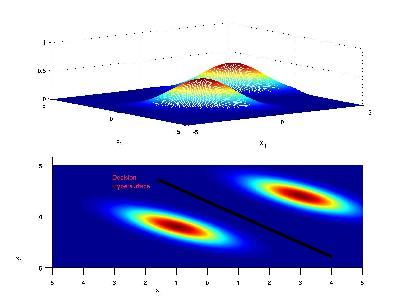

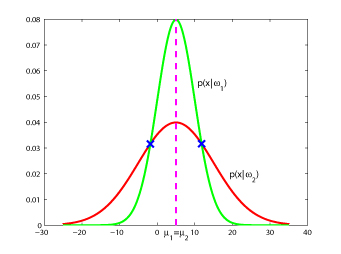

Below, you see an illustration of this case. If you have ellipses that have the same length and direction of the principal axis, you can modify them simultaneously to use Case 1.

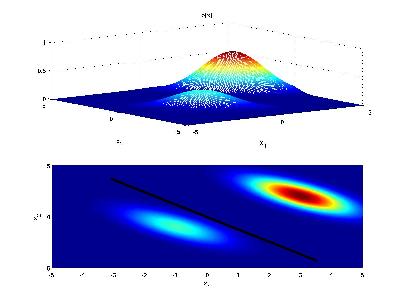

The hyperplane (green line) is perpendicular to the red line conecting the two means. It moves along the red line depending on the value of $ P(w_1) $ and $ P(w_2) $. If $ P(w_1)=P(w_2) $ the hyperplane is located on the middle of the distance between the means.

Here's an animated version of the above figure:

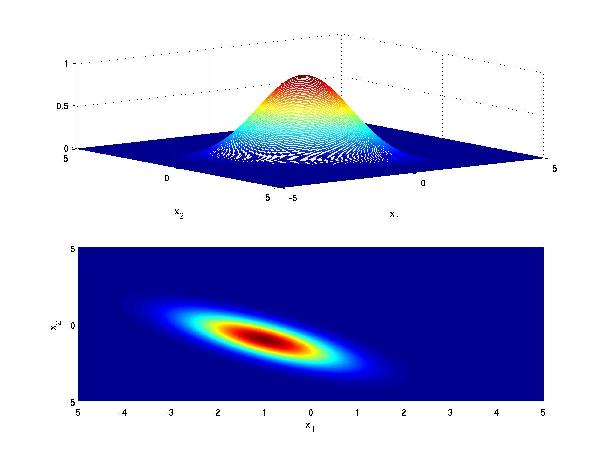

Another visualization for Case 2 is as follows: Consider class 1 which provides a multivariate Gaussian density on a 2D feature vector, when conditioned on that class.

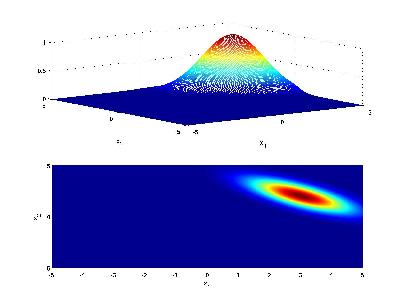

Now consider class 2 with a similar Gaussian conditional density, but with different mean

If the priors for each class are the same (i.e. 0.5), we have that the decision hypersurface cuts directly between the two means, with a direction parallel to the eliptical shape of the modes of the Gaussian densities shaped by their (identical) covariance matrices.

Now if the priors for each class are unequal, we have that the decision hypersurface cuts between the two means with a direction as before, but now will be located further from the more likely class. This biases the estimator in favor of the more likely class.

A video to visualize the decision hypersurface with changes to the Gaussian parameters is shown on the Bayes Decision Rule Video page.

Case 3: When $ \Sigma_i^{-1} $ is arbitrary

We can take

$ g_i(\vec{x}) = - \frac{1}{2} ( \vec{x} - \vec{\mu_i})^{\top}\Sigma_i^{-1}(\vec{x}-\vec{\mu_i})-\frac{1}{2} \ln \|\Sigma_i\|+ \ln P(w_i) $

the decision surface between $ w_1 $ and $ w_2 $:

is a degree 2 polynom in $ \vec{x} $

Note: decision boundaries must not be connected if $ P(w_1)=P(w_2) $ decision boundary has two disconnected points.

Class =w1, when -5<x<15

Class=w2, when x<-5 or x>15

For difference cases and their figures, refer to page 42 and 43 of DHS.