| Line 37: | Line 37: | ||

The intuition of logistic regression, in this example, is to assign a continuous probability to every possible value of hair length so that for longer hair length the probability of being a female is close to 1 and for shorter hair length the probability of being a female is close to 0. It is done by taking the linear combination of the feature and a constant and feeding it into a logistic function: | The intuition of logistic regression, in this example, is to assign a continuous probability to every possible value of hair length so that for longer hair length the probability of being a female is close to 1 and for shorter hair length the probability of being a female is close to 0. It is done by taking the linear combination of the feature and a constant and feeding it into a logistic function: | ||

| − | <center><math>a_i = \beta_0+\beta_1 x_i=\beta^T x_i</math></center> | + | <center><math> |

| + | \begin{align} | ||

| + | a_i &= \beta_0+\beta_1 x_i\\ | ||

| + | &=\beta^T x_i | ||

| + | \end{align} | ||

| + | </math></center> | ||

<center><math>L(a) = \frac{1}{1+e^{(-a)}}</math></center> | <center><math>L(a) = \frac{1}{1+e^{(-a)}}</math></center> | ||

| Line 48: | Line 53: | ||

<center>[[Image:Cbr_intuition_2.png]]</center> | <center>[[Image:Cbr_intuition_2.png]]</center> | ||

| + | |||

| + | Having this curve, we could develop an decision rule: | ||

| + | |||

| + | <center><math>\text{This person is } | ||

| + | \begin{cases} | ||

| + | female, & \text{if }L(x_i)\ge 0.5\\ | ||

| + | male, & \text{if }L(x_i) < 0.5 | ||

| + | \end{cases} | ||

| + | </math></center> | ||

| + | |||

| + | More generally, the feature can be more than one dimension | ||

| + | |||

| + | <center><math>x = (1,x_1,x_2,...,x_n)^T</math></center> | ||

| + | |||

| + | <center><math>\beta = (\beta_0,\beta_1,\beta_2,...,\beta_n)^T</math></center> | ||

| + | |||

| + | '''Note:''' | ||

| + | *the decision boundary is: | ||

| + | <center><math>\beta^T x_i = 0</math></center> | ||

| + | |||

| + | :For 1-D it's a point, 2-D it's a line and etc. | ||

| + | |||

| + | *asdf | ||

| + | |||

| + | Having this setup, the goal is to find a <math>\beta</math> to let the curve fit the data optimally. It comes about Maximum Likelihood Estimation and Newton's method. | ||

= Maximum Likelihood Estimation = | = Maximum Likelihood Estimation = | ||

| + | |||

| + | |||

= Numerical optimization = | = Numerical optimization = | ||

Revision as of 19:45, 13 May 2014

Logistic regression

A slecture by ECE student Borui Chen

Partly based on the ECE662 Spring 2014 lecture material of Prof. Mireille Boutin.

Contents

Introduction

In the field of machine learning, one big topic is classification problem. A linear classifier is an algorithm that make the classification decision on a new test data point base on a linear combination of the features.

There are two classes of linear classifier: Generative model and Discriminative model:

- The generative model measures the joint distribution of the data and class.

- Examples are Naive Bayes Classifier, Linear Discriminant Analysis.

- The discriminivative model makes no assumption on the joint distribution of the data. Instead, it takes the data as given and tries to maximize the conditional density (Prob(class|data)) directly.

- Examples are Logistic Regression, Perceptron and Support Vector Machine.

Intuition and derivation of Logistic Regression

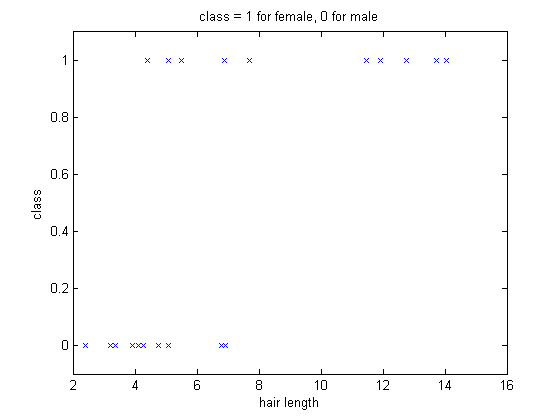

Consider a simple classification problem. The goal is to tell whether a person is male or female base on one feature: hair length. The data is given as $ (x_i,y_i) $ where i is the index number of the training set, and $ x_i $ is hair length in centimeters and $ y_i=1 $ indicates the person is male and 0 if female. Assume women has longer hair length the distribution of training data will look like this:

Clearly if a person's hair length is comparably long, being a female is more likely. If there is another person with hair length 10, we could say it's more likely to be a female. However, we don't have a description about how long is long enough to say a person is female. So we introduce the probability.

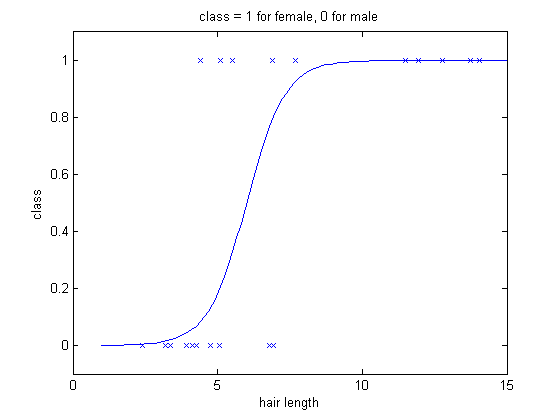

The intuition of logistic regression, in this example, is to assign a continuous probability to every possible value of hair length so that for longer hair length the probability of being a female is close to 1 and for shorter hair length the probability of being a female is close to 0. It is done by taking the linear combination of the feature and a constant and feeding it into a logistic function:

Then the logistic function of $ x_i $ would be:

After some fitting optimization algorithm, the curve looks like the following:

Having this curve, we could develop an decision rule:

More generally, the feature can be more than one dimension

Note:

- the decision boundary is:

- For 1-D it's a point, 2-D it's a line and etc.

- asdf

Having this setup, the goal is to find a $ \beta $ to let the curve fit the data optimally. It comes about Maximum Likelihood Estimation and Newton's method.