| (67 intermediate revisions by 2 users not shown) | |||

| Line 9: | Line 9: | ||

</font size> | </font size> | ||

| − | A [ | + | A [http://www.projectrhea.org/learning/slectures.php slecture] by [[ECE]] student Chiho Choi |

| − | Partly based on the [[ | + | Partly based on the [[2014_Spring_ECE_662_Boutin_Statistical_Pattern_recognition_slectures|ECE662 Spring 2014 lecture]] material of [[user:mboutin|Prof. Mireille Boutin]]. |

</center> | </center> | ||

---- | ---- | ||

| − | + | [[Media:Parzen_window_method_and_classification.pdf|pdf version is available.]] | |

| + | |||

| + | |||

| + | == Density estimation using Parzen window == | ||

---- | ---- | ||

| − | Unlike parametric density estimation methods, non-parametric approaches locally estimate density function by a small number of neighboring samples [ | + | Unlike parametric density estimation methods, non-parametric approaches locally estimate density function by a small number of neighboring samples [3] and therefore show less accurate estimation results. In spite of their accuracy, however, the performance of classifiers designed using these estimates is very satisfactory. |

| Line 23: | Line 26: | ||

It can be rewritten as | It can be rewritten as | ||

<div style="text-align: center;"> <math>\int_R p(\textbf{x}') d\textbf{x}' \simeq p(\textbf{x})V \simeq \frac{k}{n}</math>, </div> | <div style="text-align: center;"> <math>\int_R p(\textbf{x}') d\textbf{x}' \simeq p(\textbf{x})V \simeq \frac{k}{n}</math>, </div> | ||

| − | if we assume a small local region <math>R</math>, a large number of samples <math>n</math>, and <math>k</math> of <math>n</math> falling in <math>R</math>. | + | if we assume a small local region <math>R</math>, a large number of samples <math>n</math>, and <math>k</math> of <math>n</math> falling in <math>R</math>. |

| + | |||

Suppose that the region <math>R</math> is a <math>d</math>-dimensional hypercube around <math>\textbf{x}_i \in \mathbb{R}^n</math> in the rest of this slecture, and let the volume <math>V_n</math>: | Suppose that the region <math>R</math> is a <math>d</math>-dimensional hypercube around <math>\textbf{x}_i \in \mathbb{R}^n</math> in the rest of this slecture, and let the volume <math>V_n</math>: | ||

<div style="text-align: center;"> <math>V_n = h_n^d</math> </div> | <div style="text-align: center;"> <math>V_n = h_n^d</math> </div> | ||

| Line 29: | Line 33: | ||

<div style="text-align: center;"> <math>\varphi(\textbf{u}) = \left\{ \begin{array}{ccc} 1, & |u_j| \leq \frac{1}{2} & j = 1, ..., d \\ 0, & else. & \end{array} \right.</math> </div> | <div style="text-align: center;"> <math>\varphi(\textbf{u}) = \left\{ \begin{array}{ccc} 1, & |u_j| \leq \frac{1}{2} & j = 1, ..., d \\ 0, & else. & \end{array} \right.</math> </div> | ||

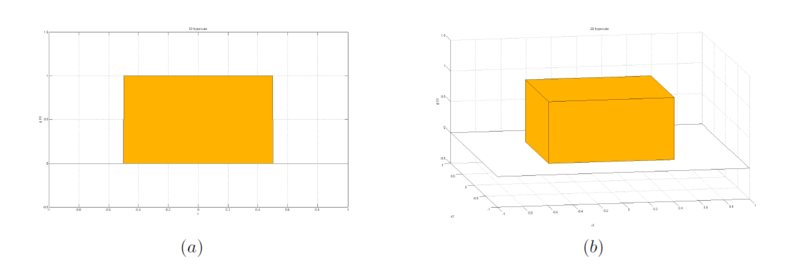

| − | [[Image: | + | and displayed in Figure 1(a) and 1(b) below such cases where <math>d</math> = 1 and <math>d</math> = 2, respectively. |

| − | <center>Figure 1: | + | |

| + | <center> | ||

| + | [[Image:pw_figure1a.png|800px]] | ||

| + | |||

| + | Figure 1. Given window function. (a) where <math>d</math> = 1, (b) where <math>d</math> = 2. | ||

| + | </center> | ||

| + | |||

| + | We simply shift this window function for <math>\textbf{x}_i</math> to determine if <math>\textbf{x}_i</math> belongs to the volume <math>V_n</math>, <math>\varphi(\frac{\textbf{x} - \textbf{x}_i}{h_n})</math>, and can compute the number of samples <math>k_n</math> falling in this volume using it: | ||

| + | |||

| + | <div style="text-align: center;"><math> k_n = \displaystyle\sum_{i=1}^n \varphi(\frac{\textbf{x} - \textbf{x}_i}{h_n}).</math> </div> | ||

| + | In Parzen window method, therefore, the estimate for density <math>p_n(\textbf{x})</math> is | ||

| + | <center><math> p_n(\textbf{x}) = \frac{k_n/n}{V_n} = \frac{1}{n} \displaystyle\sum_{i=1}^n \frac{1}{V_n} \varphi(\frac{\textbf{x} - \textbf{x}_i}{h_n})</math>. </center> | ||

| + | |||

| + | In order to check how window length effects on <math>p_n(\textbf{x})</math>, we define <math>\delta_n(\textbf{x} - \textbf{x}_i) </math> by <math> \frac{1}{V_n} \varphi(\frac{\textbf{x} - \textbf{x}_i}{h_n})</math> as an approximation of a unit impulse centered at <math>\textbf{x}_i</math> [2] and write <math>p_n(\textbf{x})</math> [1] by: | ||

| + | <center><math> p_n(\textbf{x}) = \frac{1}{n} \displaystyle\sum_{i=1}^n \delta_n(\textbf{x} - \textbf{x}_i)</math>. </center> | ||

| + | |||

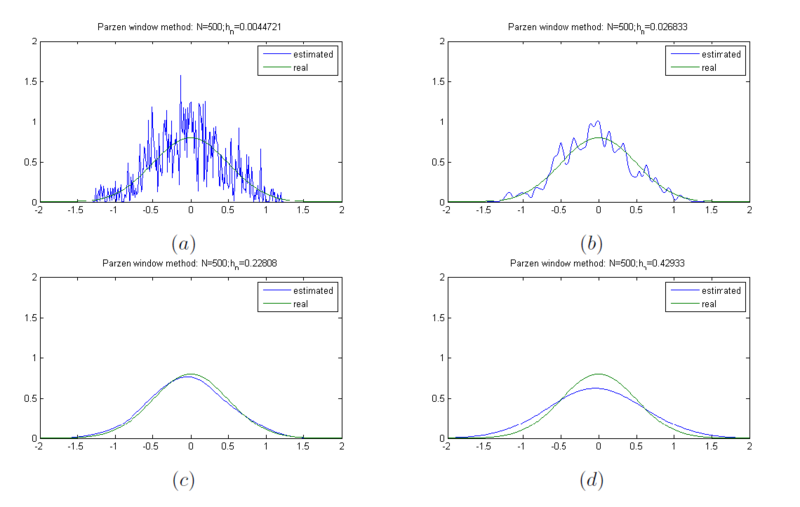

| + | From this observation, we can infer the relationship between <math>h_n</math> and <math>p_n(\textbf{x})</math>. If <math>h_n</math> is very large, the amplitude of <math>\delta_n</math> is relatively small because <math>V_n = h_n^d</math>. Thus, <math>p_n(\textbf{x})</math> becomes a very smooth estimate for <math>p(\textbf{x})</math> (see Figure 2(d)). In contrast, if <math>h_n</math> is very small, the amplitude of <math>\delta_n</math> is large. As a result, <math>p_n(\textbf{x})</math> is a noisy estimate for <math>p(\textbf{x})</math> (see Figure 2(a)). Therefore, when we have a limited number of samples, to find out an optimal value of <math>h_n</math> is very important to accurately estimate <math>p(\textbf{x})</math>. Let us assume that we have an unlimited number of samples. Then, <math>V_n</math> goes to zero as <math>n</math> increases, and hence <math>p_n(\textbf{x})</math> converges to <math>p(\textbf{x})</math> [1] no matter what <math>h_n</math> is. | ||

| + | |||

| + | <center> | ||

| + | [[Image:pw_figure2.png|800px]] | ||

| + | |||

| + | Figure 2. Density estimate using Parzen window where N = 500. (a) <math>h_n</math> = 0.0044, (b) <math>h_n</math> = 0.0268, (c) <math>h_n</math> = 0.2280, and (d) <math>h_n</math> = 0.4293. | ||

| + | </center> | ||

| + | |||

| + | |||

| + | <center> | ||

| + | [[Image:pw_figure3.png|900px]] | ||

| + | |||

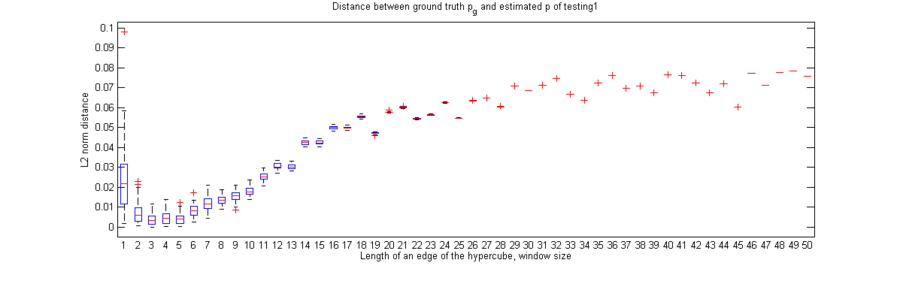

| + | Figure 3. L2 norm distance between ground truth <math>p</math> and estimated <math>p_n</math>. | ||

| + | </center> | ||

| + | |||

| + | In Figure 2, we can see the effect of the window length on the density estimate results. It demonstrates that a large or small <math>h_n</math> value causes inaccurate estimation for <math>p(\textbf{x})</math>. Also, as can be seen in Figure 3, the optimal window length in this experiment can be obtained around 3rd - 5th element (<math>\textit{i.e.}</math>, <math>h_n</math> = 0.15 - 0.35) which shows minimum distance between ground truth <math>p</math> and estimated <math>p_n</math>. As stated earlier, the choice of <math>h_n</math> is very important, especially when the number of samples is small. | ||

| + | |||

| + | |||

| + | == Convergence of the mean and variance == | ||

---- | ---- | ||

| + | Since '''x''' is a vector of random samples <math>\textbf{x}_1, ..., \textbf{x}_n</math>, <math>p_n</math> has mean <math>E(p_n(\textbf{x}))</math> and variance <math>Var(p_n(\textbf{x}))</math>. Thus, <math>p_n(\textbf{x})</math> converges to <math>p(\textbf{x})</math> [1], if | ||

| + | <center><math>\displaystyle\lim_{n\rightarrow\infty} E(p_n(\textbf{x})) = p(\textbf{x})</math> and <math>\displaystyle\lim_{n\rightarrow\infty} Var(p_n(\textbf{x})) = 0</math>. </center> | ||

| + | Let us prove the convergence of the mean and variance [2], respectively. | ||

| − | + | <center><math>\begin{array}{rclr} | |

| − | + | E(p_n(\textbf{x}))& = &\frac{1}{n} \displaystyle\sum_{i=1}^n E\left( \frac{1}{V_n} \varphi(\frac{\textbf{x} - \textbf{x}_i}{h_n})\right)&\\ | |

| − | + | & = &\frac{1}{n} \displaystyle\sum_{i=1}^n \int \frac{1}{V_n} \varphi(\frac{\textbf{x} - \textbf{x}_i}{h_n}) p(\textbf{x}) d\textbf{x}&\\ | |

| − | + | & = &\displaystyle\int \frac{1}{V_n} \varphi \left( -\frac{1}{h_n} (\textbf{x} - \textbf{x}_i)\right) p(\textbf{x}) d\textbf{x}&\\ | |

| − | + | & = &\frac{1}{V_n} \varphi \left( -\frac{\textbf{x}}{h_n} \ast p(\textbf{x})\right) &\\ | |

| − | + | &&&\\ | |

| + | if (n\rightarrow\infty)\Leftrightarrow (h_n\rightarrow0) & = & \delta(-\textbf{x}) \ast p(\textbf{x})&\\ | ||

| + | & = &p(\textbf{x}) &\blacksquare | ||

| + | \end{array}</math></center> | ||

| + | <center><math> \begin{array}{rclr} | ||

| + | Var(p_n(\textbf{x}))& = &Var\left(\displaystyle\sum_{i=1}^n \frac{1}{nV_n} \varphi(\frac{\textbf{x} - \textbf{x}_i}{h_n})\right)&\\ | ||

| + | & = &n Var\left(\frac{1}{nV_n} \varphi(\frac{\textbf{x} - \textbf{x}_i}{h_n})\right)&\\ | ||

| + | & = &n \left\{E\left(\frac{1}{n^2}\frac{1}{V_n^2} \varphi^2(\frac{\textbf{x} - \textbf{x}_i}{h_n})\right) - E\left(\frac{1}{nV_n}\varphi(\frac{\textbf{x} - \textbf{x}_i}{h_n})\right)^2\right\}&\\ | ||

| + | & \leq & n E\left(\frac{1}{n^2}\frac{1}{V_n^2} \varphi^2(\frac{\textbf{x} - \textbf{x}_i}{h_n})\right)&\\ | ||

| + | & = &\frac{1}{n} \displaystyle\int \frac{1}{V_n^2} \left(\varphi^2(\frac{\textbf{x} - \textbf{x}_i}{h_n})\right) p(\textbf{x})d\textbf{x} &\\ | ||

| + | & \leq &\frac{1}{nV_n} \sup \varphi \displaystyle\int \frac{1}{V_n} \left(\varphi(\frac{\textbf{x} - \textbf{x}_i}{h_n})\right) p(\textbf{x})d\textbf{x} &\\ | ||

| + | & = &\frac{1}{nV_n} \sup \varphi \left(p(\textbf{x}) \ast \frac{1}{V_n} \varphi(\frac{\textbf{x}}{h_n})\right)&\\ | ||

| + | & = &\frac{1}{nV_n} \sup \varphi \left(E(p_n(\textbf{x})\right)&\blacksquare\\ | ||

| + | \end{array}</math></center> | ||

| + | |||

| + | In order to make this formula converges to zero as <math>n</math> goes to infinity, according to the lecture note [2], we can make <math>nV_n \rightarrow \infty</math> and <math>V_n\rightarrow 0</math> <math>(\textit{e.g.}, V_n = \frac{1}{\sqrt{n}}</math>). | ||

| + | |||

| + | |||

| + | == Classification using Parzen window method == | ||

---- | ---- | ||

| + | A decision making using a classifier based on Parzen window estimation can be performed by simple majority voting method. Here, we check how it works. According to Professor Mireille Boutin [2], we pick the class such that <math>Prob(w_{i0}|x_0) \geq Prob(w_i|x_0) \forall i=1,...,c</math> from Bayes' rule. In other words, | ||

| + | |||

| + | <center><math> \begin{array}{cl} | ||

| + | \Longleftrightarrow & \rho(x_0|w_{i0}) Prob(w_{i0} \geq \rho(x_0|w_{i}) Prob(w_{i})\\ | ||

| + | \Longleftrightarrow & \rho(x_0, w_{i0}) \geq \rho(x_0, w_{i}) \\ | ||

| + | \Longleftrightarrow & \displaystyle\sum_{i=1}^n \varphi\left(\frac{\textbf{x}_l - \textbf{x}_0}{h_n}\right) \geq \displaystyle\sum_{i=1}^n \varphi\left(\frac{\textbf{x}_l - \textbf{x}_0}{h_n}\right).\\ | ||

| + | & (\textbf{x}_l \textrm{in class} w_{i0})~~~~~(\textbf{x}_l \textrm{in class} w_{i}) | ||

| + | \end{array}</math></center> | ||

| + | |||

| + | <center> | ||

| + | [[Image:pw_figure4.png|900px]] | ||

| + | |||

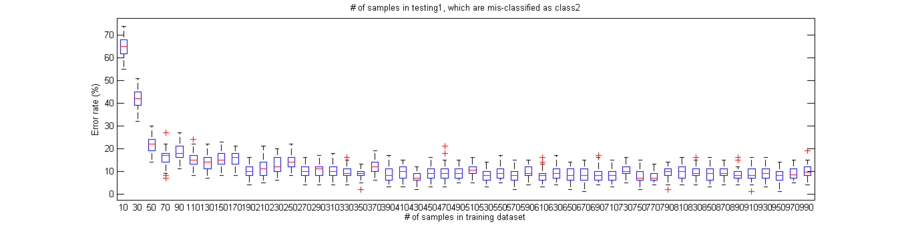

| + | Figure 4. The classification error rates with respect to the number of samples from 10 to 1000. | ||

| + | </center> | ||

| + | |||

| + | We build a classifier using hypercube as a window function. Figure 4 illustrates the classification error rates with respect to the different number of samples while optimal window length obtained above is used. In this experiment, we can recognize that the error rate decreases as the number of samples in training dataset increases until it reaches about 190 samples. After that, the classification error rate seems stable no matter what the sample size is. It demonstrates that the performance of classifiers designed using this method is satisfactory, even though density estimation is not accurate with a small samples size. As mentioned earlier, this is one of the advantages of non-parametric approaches. | ||

| + | |||

| + | |||

| + | == Discussion == | ||

---- | ---- | ||

| + | So far, we analyzed one of the non-parametric methods, Parzen Window density estimation, focused on how to estimate density, how to converge to actual density, and how to generate a classifier using it. As we proved both theoretically and experimentally in this slecture, Parzen window shows super ability for decision making without any assumptions about the distributions of given sample data. However, finding an appropriate window function which would show better performance is going to be a tedious work, that is one of the disadvantages of Parzen window method. | ||

| + | |||

| + | |||

| + | == References == | ||

| + | ---- | ||

| + | [1] Pattern classification. Richard O. Duda, Peter E. Hart, and David G. Stork.<br> | ||

| + | [2] Lecture notes of ECE 662, Professor Mireille Boutin.<br> | ||

| + | [3] Introduction to Statistical Pattern Recognition. K. Fukunaga.<br> | ||

| + | |||

| + | |||

| + | |||

---- | ---- | ||

==[[ParzenWindowReview|Questions and comments]]== | ==[[ParzenWindowReview|Questions and comments]]== | ||

Latest revision as of 09:53, 22 January 2015

Parzen window method and classification

A slecture by ECE student Chiho Choi

Partly based on the ECE662 Spring 2014 lecture material of Prof. Mireille Boutin.

Contents

Density estimation using Parzen window

Unlike parametric density estimation methods, non-parametric approaches locally estimate density function by a small number of neighboring samples [3] and therefore show less accurate estimation results. In spite of their accuracy, however, the performance of classifiers designed using these estimates is very satisfactory.

The basic idea for estimating unknown density function is based on the fact that the probability $ P $ that a vector x belongs to a region $ R $ [1]:

It can be rewritten as

if we assume a small local region $ R $, a large number of samples $ n $, and $ k $ of $ n $ falling in $ R $.

Suppose that the region $ R $ is a $ d $-dimensional hypercube around $ \textbf{x}_i \in \mathbb{R}^n $ in the rest of this slecture, and let the volume $ V_n $:

where $ h_n $ is the length of an edge. Then the window function for this hypercube can be defined by

and displayed in Figure 1(a) and 1(b) below such cases where $ d $ = 1 and $ d $ = 2, respectively.

Figure 1. Given window function. (a) where $ d $ = 1, (b) where $ d $ = 2.

We simply shift this window function for $ \textbf{x}_i $ to determine if $ \textbf{x}_i $ belongs to the volume $ V_n $, $ \varphi(\frac{\textbf{x} - \textbf{x}_i}{h_n}) $, and can compute the number of samples $ k_n $ falling in this volume using it:

In Parzen window method, therefore, the estimate for density $ p_n(\textbf{x}) $ is

In order to check how window length effects on $ p_n(\textbf{x}) $, we define $ \delta_n(\textbf{x} - \textbf{x}_i) $ by $ \frac{1}{V_n} \varphi(\frac{\textbf{x} - \textbf{x}_i}{h_n}) $ as an approximation of a unit impulse centered at $ \textbf{x}_i $ [2] and write $ p_n(\textbf{x}) $ [1] by:

From this observation, we can infer the relationship between $ h_n $ and $ p_n(\textbf{x}) $. If $ h_n $ is very large, the amplitude of $ \delta_n $ is relatively small because $ V_n = h_n^d $. Thus, $ p_n(\textbf{x}) $ becomes a very smooth estimate for $ p(\textbf{x}) $ (see Figure 2(d)). In contrast, if $ h_n $ is very small, the amplitude of $ \delta_n $ is large. As a result, $ p_n(\textbf{x}) $ is a noisy estimate for $ p(\textbf{x}) $ (see Figure 2(a)). Therefore, when we have a limited number of samples, to find out an optimal value of $ h_n $ is very important to accurately estimate $ p(\textbf{x}) $. Let us assume that we have an unlimited number of samples. Then, $ V_n $ goes to zero as $ n $ increases, and hence $ p_n(\textbf{x}) $ converges to $ p(\textbf{x}) $ [1] no matter what $ h_n $ is.

Figure 2. Density estimate using Parzen window where N = 500. (a) $ h_n $ = 0.0044, (b) $ h_n $ = 0.0268, (c) $ h_n $ = 0.2280, and (d) $ h_n $ = 0.4293.

Figure 3. L2 norm distance between ground truth $ p $ and estimated $ p_n $.

In Figure 2, we can see the effect of the window length on the density estimate results. It demonstrates that a large or small $ h_n $ value causes inaccurate estimation for $ p(\textbf{x}) $. Also, as can be seen in Figure 3, the optimal window length in this experiment can be obtained around 3rd - 5th element ($ \textit{i.e.} $, $ h_n $ = 0.15 - 0.35) which shows minimum distance between ground truth $ p $ and estimated $ p_n $. As stated earlier, the choice of $ h_n $ is very important, especially when the number of samples is small.

Convergence of the mean and variance

Since x is a vector of random samples $ \textbf{x}_1, ..., \textbf{x}_n $, $ p_n $ has mean $ E(p_n(\textbf{x})) $ and variance $ Var(p_n(\textbf{x})) $. Thus, $ p_n(\textbf{x}) $ converges to $ p(\textbf{x}) $ [1], if

Let us prove the convergence of the mean and variance [2], respectively.

In order to make this formula converges to zero as $ n $ goes to infinity, according to the lecture note [2], we can make $ nV_n \rightarrow \infty $ and $ V_n\rightarrow 0 $ $ (\textit{e.g.}, V_n = \frac{1}{\sqrt{n}} $).

Classification using Parzen window method

A decision making using a classifier based on Parzen window estimation can be performed by simple majority voting method. Here, we check how it works. According to Professor Mireille Boutin [2], we pick the class such that $ Prob(w_{i0}|x_0) \geq Prob(w_i|x_0) \forall i=1,...,c $ from Bayes' rule. In other words,

Figure 4. The classification error rates with respect to the number of samples from 10 to 1000.

We build a classifier using hypercube as a window function. Figure 4 illustrates the classification error rates with respect to the different number of samples while optimal window length obtained above is used. In this experiment, we can recognize that the error rate decreases as the number of samples in training dataset increases until it reaches about 190 samples. After that, the classification error rate seems stable no matter what the sample size is. It demonstrates that the performance of classifiers designed using this method is satisfactory, even though density estimation is not accurate with a small samples size. As mentioned earlier, this is one of the advantages of non-parametric approaches.

Discussion

So far, we analyzed one of the non-parametric methods, Parzen Window density estimation, focused on how to estimate density, how to converge to actual density, and how to generate a classifier using it. As we proved both theoretically and experimentally in this slecture, Parzen window shows super ability for decision making without any assumptions about the distributions of given sample data. However, finding an appropriate window function which would show better performance is going to be a tedious work, that is one of the disadvantages of Parzen window method.

References

[1] Pattern classification. Richard O. Duda, Peter E. Hart, and David G. Stork.

[2] Lecture notes of ECE 662, Professor Mireille Boutin.

[3] Introduction to Statistical Pattern Recognition. K. Fukunaga.

Questions and comments

If you have any questions, comments, etc. please post them on this page.