(New page: Category:ECE662 Category:Bayes' Rule Category:slecture Category:ECE662Spring2014Boutin Category:ECE Category:pattern recognition <center><font size= 4> '''Derivat...) |

|||

| Line 12: | Line 12: | ||

<font size=2> | <font size=2> | ||

A [https://www.projectrhea.org/learning/slectures.php slecture] by Stylianos Chatzidakis | A [https://www.projectrhea.org/learning/slectures.php slecture] by Stylianos Chatzidakis | ||

| + | |||

| + | Partly based on the [[2014 Spring ECE 662 Boutin|ECE662 Spring 2014 lecture]] material of [[User:Mboutin|Prof. Mireille Boutin]]. | ||

| + | </font size> | ||

| + | </center> | ||

| + | |||

| + | [[Media:slecture_Derivation of Bayes rule_in Greek.pdf| click here for PDF version]] | ||

| + | ---- | ||

| + | |||

| + | ==Prerequisite== | ||

| + | |||

| + | This tutorial assumes familiarity with the following-- | ||

| + | * The axioms of probability | ||

| + | * Definition of conditional probability | ||

| + | |||

| + | ---- | ||

| + | |||

| + | ==Λήψη αποφάσεων κατά Bayes== | ||

| + | |||

| + | Σημειώσεις μαθήματος | ||

| + | |||

| + | ==1. Εισαγωγή== | ||

| + | |||

| + | '''<font size= 2>Problem:</font size>''' Οι σημειώσεις αυτές βασίζονται στο μάθημα ECE662 του Πανεπιστημίου Purdue και στόχος είναι να προσφέρουν μία σύντομη εισαγωγή στην συμπερασματολογία κατά Bayes, τα κύρια χαρακτηριστικά και το θεώρημα Bayes και τέλος τον κανόνα Bayes που χρησιμοποιείται στην λήψη αποφάσεων. | ||

| + | |||

| + | Ο λογισμός πιθανοτήτων βασίζεται σε τρεις απλούς κανόνες βάσει των οποίων πραγματοποιούνται όλες οι πράξεις στην θεωρία πιθανοτήτων και τη στατιστική: | ||

| + | |||

| + | 1. Η πιθανότητα βρίσκεται μεταξύ 0 και 1, όπου το 0 σημαίνει αδύνατο και το 1 σημαίνει βέβαιο: | ||

| + | |||

| + | <math>0=<P(x)=<1</math> | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | <center> | ||

| + | {| border="1" class="wikitable" style="text-align:center; font-size:160%; " | ||

| + | |+ Table 1: Student information | ||

| + | ! scope="col" | | ||

| + | ! scope="col" | Total | ||

| + | ! scope="col" | No. of Male | ||

| + | ! scope="col" | No. of Female | ||

| + | |- | ||

| + | ! scope="row" | No. of CS majors | ||

| + | | 0.3 x 200 = 60 || 0.8 x 60 = 48 || 0.2 x 60 = 12 | ||

| + | |- | ||

| + | ! scope="row" | No. of non majors | ||

| + | | 0.7 x 200 = 140 || 0.4 x 140 = 56 || 0.6 x 140 = 84 | ||

| + | |- | ||

| + | ! scope="row" | Total | ||

| + | | 200 || 104 || 96 | ||

| + | |} | ||

| + | </center> | ||

| + | The elements of the table excluding the legends (or captions) can be considered as a 3x3 matrix. Let (1,1) represent the first cell of the matrix. The content of (1,1) is computed first, followed by content of (1,2) and then (1,3). The same is then done with the second and third rows of the matrix. <br /> | ||

| + | |||

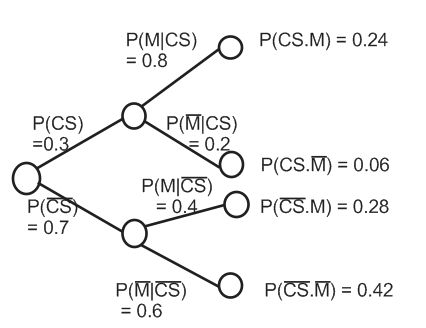

| + | The probability tree in Figure 1 is drawn by considering events as sequential. The number of branches in the probability tree depends on the number of events (i.e., how much you know about the problem). The numbers on the branches denote the conditional probabilities. Consider the root node to be at level 0 and thus the leaf nodes to be at level 2. Two events can happen at the root node: <math>CS</math> or <math>\overline{CS}</math>. Since 30% of students are majors, we get <math>\textbf{P}(CS) = 0.3</math> and <math>\textbf{P}(\overline{CS}) = 1 - 0.3 = 0.7</math>. (<font size=2>'''P'''</font size> denotes probability). At level 1, two events can happen at each of the nodes, i.e., either a <math>M</math> or a <math>\overline{M}</math> can happen. From the problem definition we know that 80% of the CS majors are males, therefore we get, <math>\textbf{P}(M\vert CS) = 0.8</math> and <math>\textbf{P}(\overline{M}\vert CS) = 1 - 0.8 = 0.2</math>. Similarly, we get, <math>\textbf{P}(M\vert \overline{CS}) = 0.4</math> and <math>\textbf{P}(\overline{M}\vert \overline{CS}) = 0.6</math>. It is important to note that the events which can occur at a node are both '''mutually exhaustive''' and '''exclusive'''. The probability at a leaf node represents the probability of happening of all the events along the path from the root node to that leaf. Note that <math>\textbf{P}(CS\cap M)</math> and <math>\textbf{P}(CS\cdot M)</math> are equivalent representations. | ||

| + | |||

| + | <center>[[Image:tree.png|frame|none|alt=Alt text|<font size= 4> '''Figure 1: Probability tree''' </font size>]] </center><br /> | ||

| + | |||

| + | # From the table, <center><math>\textbf{P}(CS) = \frac{\text{No. of CS majors}}{\text{Total no. of Students}} = \frac{60}{200} = 0.3</math></center><br /> This is nothing but the fraction of the total students who are CS majors. | ||

| + | # From the table, <center><math>\textbf{P}(CS\cap M) = \frac{\text{No. of CS majors who are also males}}{\text{Total no. of Students}} = \frac{48}{200} = 0.24</math></center><br /> Using the probability tree we can interpret <math>(CS\cap M)</math> as the occurrence of event <math>CS</math> followed by the occurrence of event <math>M</math>. Therefore,<br /> <center><math>\textbf{P}(CS\cap M) = \textbf{P}(CS)\times\textbf{P}(M\vert CS) = 0.3\times0.8 = 0.24 \text{ (multiplication rule)}</math></center> | ||

| + | # From the table, <center><math>\textbf{P}(M) = \frac{\text{Total no. males}}{\text{Total no. of Students}} = \frac{104}{200} = 0.52</math></center> <br />From the probability tree it is clear that the event <math>M</math> can occur in 2 ways. Therefore we get, <center><math>\textbf{P}(M) = \textbf{P}(M\vert CS)\times \textbf{P}(CS) + \textbf{P}(M\vert \overline{CS})\times \textbf{P}(\overline{CS}) = 0.8\times 0.3 + 0.4\times 0.7 = 0.52\text{ (total probability theorem)}</math></center> | ||

| + | #From the table, <center><math>\textbf{P}(CS\vert M) = \frac{\text{No. of males who are CS majors}}{\text{Total no. of males}} = \frac{48}{104} = 0.4615</math></center> <br />Now let us compute the same using the probability tree. If you carefully observe the tree it is evident that the computation is not direct. So let us start from the definition of conditional probability, i.e., <center><math>\textbf{P}(CS\vert M) = \frac{\textbf{P}(CS\cap M)}{\textbf{P}(M)}</math></center> <br /> Expanding the numerator using multiplication rule, <center><math>\textbf{P}(CS\vert M) = \frac{\textbf{P}(M\vert CS)\times \textbf{P}(CS)}{\textbf{P}(M)} </math></center> <br /> Using total probability theorem in the denominator, <center><math>\textbf{P}(CS\vert M) = \frac{\textbf{P}(M\vert CS)\times \textbf{P}(CS)}{\textbf{P}(M\vert CS)\times \textbf{P}(CS) + \textbf{P}(M\vert \overline{CS})\times \textbf{P}(\overline{CS})}\text{ Eq. (1)}</math></center> <br /> | ||

| + | <center><math>\implies\textbf{P}(CS\vert M) = \frac{0.8\times 0.3}{0.8\times 0.3 + 0.7\times 0.4} = 0.4615</math></center> <br /> | ||

| + | |||

| + | '''<font size= 2>Observation:</font size>''' | ||

| + | |||

| + | From part 4. and part 1. we observe that <math>\textbf{P}(CS\vert M)>\textbf{P}(CS)</math>, i.e., <math>0.4615 > 0.3</math>. What does this mean? How did the probability that a randomly selected student being a CS major change, when you were informed that the student is a male? Why did it increase? | ||

| + | |||

| + | '''<font size= 2>Explanation:</font size>''' | ||

| + | |||

| + | In part 1. of the problem we only knew the percentage of males and females in the course. So, we computed the probability using just that information. In computing this probability the sample space was the total number of students in the course <br /> In part 4. of the problem we were informed that event <math>M</math> has occurred, i.e., we got partial information. What did we do with this information? We used it and revised the probability, i.e., our prior belief, in this case <math>\textbf{P}(CS)</math>. <math>\textbf{P}(CS)</math> is called the prior because that is what we knew about the outcome before being informed about the occurrence of event <math>M</math>. We revised the probability (prior) by changing the sample space from the total number of students to the total number of males in the course. The increase in the prior is justified by the fact that there are more males who are CS majors than females. | ||

| + | |||

| + | '''<font size= 2>Inference:</font size>''' | ||

| + | |||

| + | So, what do we learn from this example? | ||

| + | # We are supposed to revise our beliefs when we get information. Doing this will help us predict the outcome more accurately. | ||

| + | # In this example we computed probabilities using two different methods: constructing a table and, by constructing a probability tree. In practice one could use either of the methods to solve a problem. | ||

| + | |||

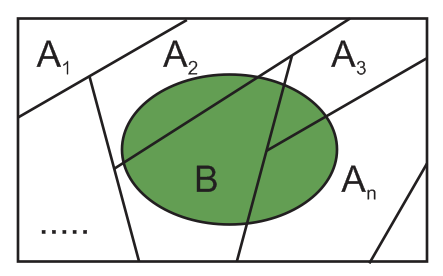

| + | Eq. (1) is called Bayes' theorem and can be generalized as, <center><math>\textbf{P}(A_{i}\vert B) = \frac{\textbf{P}(B\vert A_{i})\times \textbf{P}(A_{i})}{\sum_{j=1}^n\textbf{P}(B\vert A_{j})\times \textbf{P}(A_{j})}\text{ Eq. (2)}</math></center> <br /> where, <math>n</math> is the number of events (cardinality of the set <math>\{A_{i}\}</math>) in the sample space and, <math>i = 1, 2, ..., n</math>. Note that the events <math>A_{i}</math> should be mutually exclusive and exhaustive as shown in the Figure. 2. In Figure. 2 the green colored region corresponds to event <math>B</math>. | ||

| + | |||

| + | <center>[[Image:vennDiagram.png|frame|none|alt=Alt text|<font size= 4> '''Figure 2: Venn diagram''' </font size>]] </center> <br /> | ||

| + | |||

| + | Bayes' theorem can be understood better by visualizing the events as sequential as depicted in the probability tree. When additional information is obtained about a subsequent event; it is used to revise the probability of the initial event. The revised probability is called posterior. In other words, we initially have a cause-effect model where we want to predict whether event <math>B</math> will occur or not, given that event <math>A_{i}</math> has occurred. <center><math>A_{i} \xrightarrow[\textbf{P}(B\vert A_{i})]{cause-effect} B </math></center> <br /> | ||

| + | We then move to the inference model where we are told that event <math>B</math> has occurred and our goal is to infer whether event <math>A_{i}</math> has occurred or not '''[3]''' <center><math> A_{i} \xleftarrow[\textbf{P}(A_{i}\vert B)]{inference} B </math></center> <br /> | ||

| + | In summary, Bayes' Theorem '''[4]''' provides us a simple technique to turn information about the probability of different effects (outcomes) from each possible cause, into information about the probable cause given the effect (outcome). | ||

| + | ---- | ||

| + | |||

| + | ==Bayes' Classifier== | ||

| + | |||

| + | Bayes' Classifier uses Bayes' theorem (in the form of Bayes' rule) to classify objects into different categories. This technique is widely used in the area of pattern recognition. Let us describe the setting for a classification problem and then briefly outline the procedure. | ||

| + | |||

| + | '''<font size= 2>Problem Setting:</font size>''' | ||

| + | Consider a collection of <math>N</math> objects each with a <math>d</math> dimensional feature vector <math>X</math>. Let <math>X_{k}</math> be the feature vector of the <math>k^{th}</math> object. Feature vector can be thought of as a <math>d</math>-tuple describing the object. The task is to classify the objects into one of the <math>C</math> categories (classes) | ||

| + | |||

| + | '''<font size= 2>Solution Approach:</font size>''' | ||

| + | Given an object <math>k</math> with feature vector <math>X_{k}</math> choose a class <math>w_{i}</math> such that, <center><math>\textbf{P}(w_{i}\vert X_{k}) \geq \textbf{P}(w_{j}\vert X_{k}), \forall j=1,2,..,C \text{ Eq. (3)} </math></center><br /> | ||

| + | Using, Bayes' theorem this can be re-written as, <center><math>\frac{\textbf{P}(X_{k}\vert w_{i})\times\textbf{P}(w_{i})}{\sum_{i=1}^C\textbf{P}(X_{k}\vert w_{i})\times\textbf{P}(w_{i})} \geq \frac{\textbf{P}(X_{k}\vert w_{j})\times\textbf{P}(w_{j})}{\sum_{j=1}^C\textbf{P}(X_{k}\vert w_{j})\times\textbf{P}(w_{j})} </math></center><br /> | ||

| + | Since the denominators are the same, we get <center><math>\textbf{P}(X_{k}\vert w_{i})\times\textbf{P}(w_{i}) \geq \textbf{P}(X_{k}\vert w_{j})\times\textbf{P}(w_{j})\text{ Eq. (4)} </math></center><br /> | ||

| + | Eq. (4) is called '''Bayes' Rule''', where <math>\textbf{P}(X_{k}\vert w_{i})</math> and <math>\textbf{P}(w_{i})</math> are called the likelihood and prior respectively '''[5]'''. Eq. (4), i.e., Bayes' rule is used in the classification problem instead of Eq. (3) because in most real situations it is easier to estimate the likelihood and prior. | ||

| + | |||

| + | |||

| + | ---- | ||

| + | |||

| + | == References == | ||

| + | |||

| + | # [http://www.stats.uwo.ca/faculty/bellhouse/ D. R. Bellhouse], "The Reverend Thomas Bayes FRS: a Biography to Celebrate the Tercentenary of his Birth," Statistical Science 19, 2004. | ||

| + | # Mario F. Triola, [http://faculty.washington.edu/tamre/BayesTheorem.pdf "Bayes' Theorem"]. | ||

| + | # Dimitri P. Bertsekas, John N. Tsitsiklis, "Introduction to Probability," Second Edition, Athena Scientific, Belmont, Massachusetts, USA, 2008. | ||

| + | # D. S. Sivia, "Data Analysis--A Bayesian Tutorial," Oxford University Press, 1998. | ||

| + | # [https://engineering.purdue.edu/~mboutin/ Mireille Boutin], "ECE662: Statistical Pattern Recognition and Decision Making Processes," Purdue University, Spring 2014. | ||

| + | |||

| + | ---- | ||

| + | |||

| + | ==[[Talk:slecture_From_Bayes_Theorem_to_Pattern_Recognition_review|Questions and comments]]== | ||

| + | |||

| + | If you have any questions, comments, etc. please post them on [[Talk:slecture_From_Bayes_Theorem_to_Pattern_Recognition_review|this page]]. | ||

Revision as of 21:08, 30 April 2014

Derivation of Bayes rule_In Greek

A slecture by Stylianos Chatzidakis

Partly based on the ECE662 Spring 2014 lecture material of Prof. Mireille Boutin.

Contents

[hide]Prerequisite

This tutorial assumes familiarity with the following--

- The axioms of probability

- Definition of conditional probability

Λήψη αποφάσεων κατά Bayes

Σημειώσεις μαθήματος

1. Εισαγωγή

Problem: Οι σημειώσεις αυτές βασίζονται στο μάθημα ECE662 του Πανεπιστημίου Purdue και στόχος είναι να προσφέρουν μία σύντομη εισαγωγή στην συμπερασματολογία κατά Bayes, τα κύρια χαρακτηριστικά και το θεώρημα Bayes και τέλος τον κανόνα Bayes που χρησιμοποιείται στην λήψη αποφάσεων.

Ο λογισμός πιθανοτήτων βασίζεται σε τρεις απλούς κανόνες βάσει των οποίων πραγματοποιούνται όλες οι πράξεις στην θεωρία πιθανοτήτων και τη στατιστική:

1. Η πιθανότητα βρίσκεται μεταξύ 0 και 1, όπου το 0 σημαίνει αδύνατο και το 1 σημαίνει βέβαιο:

$ 0=<P(x)=<1 $

| Total | No. of Male | No. of Female | |

|---|---|---|---|

| No. of CS majors | 0.3 x 200 = 60 | 0.8 x 60 = 48 | 0.2 x 60 = 12 |

| No. of non majors | 0.7 x 200 = 140 | 0.4 x 140 = 56 | 0.6 x 140 = 84 |

| Total | 200 | 104 | 96 |

The elements of the table excluding the legends (or captions) can be considered as a 3x3 matrix. Let (1,1) represent the first cell of the matrix. The content of (1,1) is computed first, followed by content of (1,2) and then (1,3). The same is then done with the second and third rows of the matrix.

The probability tree in Figure 1 is drawn by considering events as sequential. The number of branches in the probability tree depends on the number of events (i.e., how much you know about the problem). The numbers on the branches denote the conditional probabilities. Consider the root node to be at level 0 and thus the leaf nodes to be at level 2. Two events can happen at the root node: $ CS $ or $ \overline{CS} $. Since 30% of students are majors, we get $ \textbf{P}(CS) = 0.3 $ and $ \textbf{P}(\overline{CS}) = 1 - 0.3 = 0.7 $. (P denotes probability). At level 1, two events can happen at each of the nodes, i.e., either a $ M $ or a $ \overline{M} $ can happen. From the problem definition we know that 80% of the CS majors are males, therefore we get, $ \textbf{P}(M\vert CS) = 0.8 $ and $ \textbf{P}(\overline{M}\vert CS) = 1 - 0.8 = 0.2 $. Similarly, we get, $ \textbf{P}(M\vert \overline{CS}) = 0.4 $ and $ \textbf{P}(\overline{M}\vert \overline{CS}) = 0.6 $. It is important to note that the events which can occur at a node are both mutually exhaustive and exclusive. The probability at a leaf node represents the probability of happening of all the events along the path from the root node to that leaf. Note that $ \textbf{P}(CS\cap M) $ and $ \textbf{P}(CS\cdot M) $ are equivalent representations.

- From the table,

$ \textbf{P}(CS) = \frac{\text{No. of CS majors}}{\text{Total no. of Students}} = \frac{60}{200} = 0.3 $

This is nothing but the fraction of the total students who are CS majors. - From the table,

$ \textbf{P}(CS\cap M) = \frac{\text{No. of CS majors who are also males}}{\text{Total no. of Students}} = \frac{48}{200} = 0.24 $

Using the probability tree we can interpret $ (CS\cap M) $ as the occurrence of event $ CS $ followed by the occurrence of event $ M $. Therefore,

$ \textbf{P}(CS\cap M) = \textbf{P}(CS)\times\textbf{P}(M\vert CS) = 0.3\times0.8 = 0.24 \text{ (multiplication rule)} $ - From the table,

$ \textbf{P}(M) = \frac{\text{Total no. males}}{\text{Total no. of Students}} = \frac{104}{200} = 0.52 $

From the probability tree it is clear that the event $ M $ can occur in 2 ways. Therefore we get,$ \textbf{P}(M) = \textbf{P}(M\vert CS)\times \textbf{P}(CS) + \textbf{P}(M\vert \overline{CS})\times \textbf{P}(\overline{CS}) = 0.8\times 0.3 + 0.4\times 0.7 = 0.52\text{ (total probability theorem)} $ - From the table,

$ \textbf{P}(CS\vert M) = \frac{\text{No. of males who are CS majors}}{\text{Total no. of males}} = \frac{48}{104} = 0.4615 $

Now let us compute the same using the probability tree. If you carefully observe the tree it is evident that the computation is not direct. So let us start from the definition of conditional probability, i.e.,$ \textbf{P}(CS\vert M) = \frac{\textbf{P}(CS\cap M)}{\textbf{P}(M)} $

Expanding the numerator using multiplication rule,$ \textbf{P}(CS\vert M) = \frac{\textbf{P}(M\vert CS)\times \textbf{P}(CS)}{\textbf{P}(M)} $

Using total probability theorem in the denominator,$ \textbf{P}(CS\vert M) = \frac{\textbf{P}(M\vert CS)\times \textbf{P}(CS)}{\textbf{P}(M\vert CS)\times \textbf{P}(CS) + \textbf{P}(M\vert \overline{CS})\times \textbf{P}(\overline{CS})}\text{ Eq. (1)} $

Observation:

From part 4. and part 1. we observe that $ \textbf{P}(CS\vert M)>\textbf{P}(CS) $, i.e., $ 0.4615 > 0.3 $. What does this mean? How did the probability that a randomly selected student being a CS major change, when you were informed that the student is a male? Why did it increase?

Explanation:

In part 1. of the problem we only knew the percentage of males and females in the course. So, we computed the probability using just that information. In computing this probability the sample space was the total number of students in the course

In part 4. of the problem we were informed that event $ M $ has occurred, i.e., we got partial information. What did we do with this information? We used it and revised the probability, i.e., our prior belief, in this case $ \textbf{P}(CS) $. $ \textbf{P}(CS) $ is called the prior because that is what we knew about the outcome before being informed about the occurrence of event $ M $. We revised the probability (prior) by changing the sample space from the total number of students to the total number of males in the course. The increase in the prior is justified by the fact that there are more males who are CS majors than females.

Inference:

So, what do we learn from this example?

- We are supposed to revise our beliefs when we get information. Doing this will help us predict the outcome more accurately.

- In this example we computed probabilities using two different methods: constructing a table and, by constructing a probability tree. In practice one could use either of the methods to solve a problem.

where, $ n $ is the number of events (cardinality of the set $ \{A_{i}\} $) in the sample space and, $ i = 1, 2, ..., n $. Note that the events $ A_{i} $ should be mutually exclusive and exhaustive as shown in the Figure. 2. In Figure. 2 the green colored region corresponds to event $ B $.

Bayes' theorem can be understood better by visualizing the events as sequential as depicted in the probability tree. When additional information is obtained about a subsequent event; it is used to revise the probability of the initial event. The revised probability is called posterior. In other words, we initially have a cause-effect model where we want to predict whether event $ B $ will occur or not, given that event $ A_{i} $ has occurred.

We then move to the inference model where we are told that event $ B $ has occurred and our goal is to infer whether event $ A_{i} $ has occurred or not [3]

In summary, Bayes' Theorem [4] provides us a simple technique to turn information about the probability of different effects (outcomes) from each possible cause, into information about the probable cause given the effect (outcome).

Bayes' Classifier

Bayes' Classifier uses Bayes' theorem (in the form of Bayes' rule) to classify objects into different categories. This technique is widely used in the area of pattern recognition. Let us describe the setting for a classification problem and then briefly outline the procedure.

Problem Setting: Consider a collection of $ N $ objects each with a $ d $ dimensional feature vector $ X $. Let $ X_{k} $ be the feature vector of the $ k^{th} $ object. Feature vector can be thought of as a $ d $-tuple describing the object. The task is to classify the objects into one of the $ C $ categories (classes)

Solution Approach:

Given an object $ k $ with feature vector $ X_{k} $ choose a class $ w_{i} $ such that,Using, Bayes' theorem this can be re-written as,

Since the denominators are the same, we get

Eq. (4) is called Bayes' Rule, where $ \textbf{P}(X_{k}\vert w_{i}) $ and $ \textbf{P}(w_{i}) $ are called the likelihood and prior respectively [5]. Eq. (4), i.e., Bayes' rule is used in the classification problem instead of Eq. (3) because in most real situations it is easier to estimate the likelihood and prior.

References

- D. R. Bellhouse, "The Reverend Thomas Bayes FRS: a Biography to Celebrate the Tercentenary of his Birth," Statistical Science 19, 2004.

- Mario F. Triola, "Bayes' Theorem".

- Dimitri P. Bertsekas, John N. Tsitsiklis, "Introduction to Probability," Second Edition, Athena Scientific, Belmont, Massachusetts, USA, 2008.

- D. S. Sivia, "Data Analysis--A Bayesian Tutorial," Oxford University Press, 1998.

- Mireille Boutin, "ECE662: Statistical Pattern Recognition and Decision Making Processes," Purdue University, Spring 2014.

Questions and comments

If you have any questions, comments, etc. please post them on this page.