| (8 intermediate revisions by one other user not shown) | |||

| Line 1: | Line 1: | ||

[[Category: ECE]] | [[Category: ECE]] | ||

| − | [[Category: | + | [[Category: ECE301]] |

| + | [[Category:signals and systems]] | ||

[[Category: Fall]] | [[Category: Fall]] | ||

[[Category: 2007]] | [[Category: 2007]] | ||

| Line 14: | Line 15: | ||

==[[ECE 301 Fall 2007 mboutin LTI Systems|LTI Systems]]== | ==[[ECE 301 Fall 2007 mboutin LTI Systems|LTI Systems]]== | ||

{{:ECE 301 Fall 2007 mboutin LTI Systems}} | {{:ECE 301 Fall 2007 mboutin LTI Systems}} | ||

| − | |||

| − | |||

| − | |||

==[[ECE 301 Fall 2007 mboutin Fourier Series|Fourier Series]]== | ==[[ECE 301 Fall 2007 mboutin Fourier Series|Fourier Series]]== | ||

{{:ECE 301 Fall 2007 mboutin Fourier Series}} | {{:ECE 301 Fall 2007 mboutin Fourier Series}} | ||

| + | |||

| + | ==[[ECE 301 Fall 2007 mboutin Duality|Duality]]== | ||

| + | {{:ECE 301 Fall 2007 mboutin Duality}} | ||

| + | |||

| + | ==[[ECE 301 Fall 2007 mboutin Fourier Transform Table|Fourier Transform Table]]== | ||

| + | {{:ECE 301 Fall 2007 mboutin Fourier Transform Table}} | ||

| + | |||

| + | ==[[ECE 301 Fall 2007 mboutin Convergence of Fourier Transforms|Convergence of Fourier Transforms]]== | ||

| + | {{:ECE 301 Fall 2007 mboutin Convergence of Fourier Transforms}} | ||

| + | |||

| + | ==[[ECE 301 Fall 2007 mboutin Sifting Property|Sifting Property]]== | ||

| + | {{:ECE 301 Fall 2007 mboutin Sifting Property}} | ||

| + | |||

| + | ==[[ECE 301 Fall 2007 mboutin Difference Between Fourier and Laplace|Difference Between Fourier and Laplace]]== | ||

| + | {{:ECE 301 Fall 2007 mboutin Difference Between Fourier and Laplace}} | ||

| + | |||

| + | ==[[ECE 301 Fall 2007 mboutin Common Questions on FS/FT Answered By Mimi|Common Questions on FS/FT Answered by Mimi]]== | ||

| + | {{:ECE 301 Fall 2007 mboutin Common Questions on FS/FT Answered By Mimi}} | ||

| + | |||

| + | ==[[ECE 301 Fall 2007 mboutin Guide to Partial Fraction Expansion|Guide to Partial Fraction Expansion]]== | ||

| + | {{:ECE 301 Fall 2007 mboutin Guide to Partial Fraction Expansion}} | ||

| + | |||

| + | ==[[ECE 301 Fall 2007 mboutin Joseph Fourier|Mr. Fourier]== | ||

| + | {{:ECE 301 Fall 2007 mboutin Joseph Fourier}} | ||

| + | |||

| + | ==[[ECE 301 Fall 2007 mboutin Geometric Series Note|Geometric Series Note]]== | ||

| + | {{:ECE 301 Fall 2007 mboutin Geometric Series Note}} | ||

| + | |||

| + | ==[[ECE 301 Fall 2007 mboutin Meaning of Fourier Transform|Meaning of Fourier Transform]]== | ||

| + | {{:ECE 301 Fall 2007 mboutin Meaning of Fourier Transform}} | ||

| + | |||

| + | ==[[ECE 301 Fall 2007 mboutin Multidimension Fourier Transform|Multidimension Fourier Transform]]== | ||

| + | {{:ECE 301 Fall 2007 mboutin Multidimension Fourier Transform}} | ||

| + | |||

| + | ==[[ECE 301 Fall 2007 mboutin Even/Odd Fourier Coefficients|Even/Odd Fourier Coefficients]]== | ||

| + | {{:ECE 301 Fall 2007 mboutin Even/Odd Fourier Coefficients}} | ||

| + | |||

| + | ==[[ECE 301 Fall 2007 mboutin Sampling Theorem|Sampling Theorem]]== | ||

| + | {{:ECE 301 Fall 2007 mboutin Sampling Theorem}} | ||

| + | |||

| + | ==[[ECE 301 Fall 2007 mboutin Sampling Theorem Def|Sampling Theorem Def.]]== | ||

| + | {{:ECE 301 Fall 2007 mboutin Sampling Theorem Def}} | ||

Latest revision as of 15:56, 23 April 2013

Contents

- 1 Periodic Signals

- 2 Useful Definitions for ECE301: signals and systems

- 3 Automated System Property Verification (for ECE301: "signals and systems")

- 4 Overview

- 5 Basics: Systems as "functions that operate on functions"

- 6 Testing properties

- 7 Framework for computing the CT Convolution of two unit step exponentials

- 7.1 Definition of Sampling Theorem

- 7.2 Fourier Series

- 7.3 Coefficient LTI Transfer

- 7.4 Types of Filters

- 7.5 Types of Filters

- 7.6 Duality

- 7.7 Fourier Transform Table

- 7.8 Convergence of Fourier Transforms

- 7.9 Sifting Property

- 7.10 Difference Between Fourier and Laplace

- 7.11 Common Questions on FS/FT Answered by Mimi

- 7.12 Guide to Partial Fraction Expansion

- 8 A guide to Partial Fraction Expansion

- 9 The Sampling Theorem

Periodic Signals

Useful Definitions for ECE301: signals and systems

Periodic CT Signal:

- A CT signal $ x(t)\ $ is called periodic if there exists $ T>0\ $ period such that $ x(t+T)=x(t)\ $, for all values of t. The fundamental period is the smallest period of all periods of a signal (denoted by $ T_0\ $).

In Mathspeak:

- $ x(t) periodic \iff \exists T>0 \ni x(t+T)=x(t), \forall t \in \mathbb{R} $

Periodic DT Signal:

- A DT signal $ x[n]\ $ is called periodic if there exists $ N>0\ $ period such that $ x[n+N]=x[n]\ $, for all values of n. the fundamental period is the smallest period of all periods of a signal (denoted by $ N_0\ $).

In Mathspeak:

- $ x[n] periodic \iff \exists N>0 \ni x[n+N]=x[n], \forall n \in \mathbb{N} $

Comment:

- The difference between CT and DT:

Note that the period N must be an integer in DT, but that the period T in CT can be any positive real number.

-Mimi (Wed, 26 Sep 2007 16:29:43)

Systems in General

Automated Property Verification

Automated System Property Verification (for ECE301: "signals and systems")

Overview

For those who are CS geeks like William, the question of whether a function to check whether a system has a given property can be written is very pertinent. I have been looking into symbolic math solvers to try to make them do this.

So far, my most promising lead is the AXIOM solver [1], which might have a LISP interface to its symbolic math engine. This would be ideal.

My notes so far are as follows:

Basics: Systems as "functions that operate on functions"

A system can be represented as a function system(x), where x is some function x(t), that returns another function of the form y(t).

In Common LISP, an example of x might be:

(setq x (lambda (tt) (+ tt 5)))

This corresponds to x(t) = t + 5. t is a reserved constant in LISP, so I use tt. A setq here probably isn't the "right way" to do things, but LISP is not my native language.

At any rate, a simple system to play with would be a time shift. You would represent this as follows (note the extra argument to, representing the amount of time to shift by):

(defun timeshift (x to) (lambda (tt) (funcall x (- tt to))))

You can then represent x(t) -> [Shift by to]? -> y(t) as (in more broken LISP):

(setq y (timeshift x 'to))

At this point, you could in theory make a call

(funcall y tt)

to get y(tt) = x(tt - to) = tt + 5, but LISP doesn't do symbolic math natively. Thus, some work needs to be done to get AXIOM or some other engine to do the heavy lifting.

An example using clisp, with commentary:

(setq x (lambda (tt) (+ tt 5))) ; x(tt) = tt + 5 (defun timeshift (x to) (lambda (tt) (funcall x (- tt to)))) ; Define the time-shift function

- Apply the timeshift to x with to=15.

- This is akin to y(t) = x(t - 15) = t - 15 + 5 = t - 10

(setq y (timeshift x 15))

- Finally, compute a value of y(t)

- y(3) = 3 - 10 = -7

(funcall y 3)

Again, the lack of native symbolic math operations in LISP is extremely limiting, so a symbolic engine needs to be integrated somehow.

Testing properties

As you can see, using LISP to model systems is potentially a powerful method. The "functions operating on functions" way of thinking can be extended even further to the "system" functions (such as timeshift above) to prove things about systems.

Lacking a good symbolic solver, I cannot test the following code yet, but we might write a function "linear" that determines whether the given system function is linear or not.

(defun islinear (sysfunc)

(let ((y1 (funcall sysfunc 'x1)) ; Define y1 = sysfunc(x1), y2 = sysfunc(x2)

(y2 (funcall sysfunc 'x2)))

(eq

(+ (* 'a (funcall y1 'tt)) ; a*y1(t) + b*y2(t) = ...

(* 'b (funcall y2 'tt)))

(funcall

(funcall sysfunc

; ... = sysfunc(a*x1(t) + b*x2(t))(t) ?

(lambda (tt) (+ (* 'a (funcall 'x1 tt))

(* 'b (funcall 'x2 tt))))

) 'tt)

)))

Properties of Systems

There are five general properties of systems that are introduced in this homework. These include systems with and without memory, time invariant systems, linear systems, causal systems and stable systems. This post will detail how to check if a system exhibits these general properties.

1. Systems with and without memory:

- Def: A system is said to be memoryless if its output for each value of the independent variable at a given time is dependent only on the input at that same time.

- Proving: This is very simple and can be done by visual inspection alone. If there is any kind of phase shift, the systems depends on values other than the input at that current time and is NOT memoryless. Also, if the system is described by an accumulator or summer again it depends of values other than the input at the current time and is NOT memoryless. Note that in a system comprised of the linear combination of sub-systems if any one of the sub-systems is memoryless than the entire system is memoryless.

2. Time Invariant Systems:

- Def: A system is time invariant if a time shift in the input signal results in an identical time shift in the output signal.

- Proving: In equation form, the system y(t) = x(t) is time invariant if y(t-to) = x(t-to). Plug in "t-to" for all "t's" in the system. Simplify. If the end result is just a time delay, then the system is time invariant. The easy way--if there are any "t's" outside the function x(t) [i.e. t*x(t)]? the system must NOT be time invariant.

3. Linear Systems:

- Def: A system is linear if any output can be derived from the sum of the products of an output and constant and another output and constant. In equation form for y(t) = x(t) with outputs y1, y2, and y3: $ y_3(t) = a\cdot y_1(t) + b\cdot y_2(t) $

- Proving: Use the equation above to prove. Example 1.17 in the text shows a nice overview. Basically, consider two arbitrary inputs and their respective outputs. A third input is considered to be a linear combination of the first two inputs. Write the output and substitute the third input for the linear combination. Separate the a and b variables. If you can arrange the equation so that the output of the third input is the linear combination of the first two outputs, then the system is linear.

4. Causal Systems:

- Def: A system is causal if the output at any time depends only on the values of the input at the present time and in the past.

- Proving: Consider each component of the system separately. If there is no time delay, the systems depends only on the present time and is causal. If there is a time delay, determine whether it is in past or future time. If it is past time, then the system is causal. When the systems is rotated over the y-axis [i.e. x(-t)]? then if is possible for some values of t that the system is in past time and for others in future. Determine these values. The system is causal for the values of past time as well as for the value of present time and else is NOT causal.

5. Stable Systems:

- Def: A system is stable if a bounded input function yields a bounded output function.

- Proving: Consider y(t) = x(t). If the input function is bounded then $ |x(t)| < \epsilon $. Consider the end behavior of all combinations of minimum and maximum values for x(t). If it is bounded, then y(t) IS stable. (Look at Example 1.13 in the text for further instruction).

... --brian.a.baumgartner.1, Wed, 05 Sep 2007 11:20:49

I was mistaken in article 4. Just because a system does not have a time delay does not PROVE that the system is causal. I wrote this without considering time scaling. I daresay that if a system does not have a time delay OR time scaling then it is causal; however I suggest doing the math to back this up. To find the values of when the system is causal and when it is not consider the system y(t) = x(at+b). Next set up the following inequality:

at+b <= t

The system IS causal as long as this inequality holds true. It is NOT causal for at+b > t.

Simplified View of Cascaded Systems

We talked in class about how, in a cascaded system, the "time coordinates" change and that you have to keep track of them as they propagate down the system through other transforms if you are trying to find the final output of the systems. This explanation seems somewhat confusing, so I tried looking at it in a different manor. It seems like we are trying to find the equation for the output by starting at the input, like a signal would, and writing all the transforms or "things" that happen to the signal using different variables, then we go back and substitute so it all works out. I did the reverse and started with the output and "built up" the total effect (output equation) from what happened "most recently" (that is to say the present is at the output and the input is the past), so you don't have to worry about keeping track of different substitution variables (Mimi used squares and squiggles) or the "time coordinates".

A very simple method is as such:

- Start from the output, take the last transform and put it in parenthesis. It's in a nice package now, it's done, don't touch it.

- Take that "package" and drop it right into the next "most recent" (one to the left) transform (substitute it for t). Put that in parenthesis, it's your new package.

- Keep going until you run out of transforms.

- If so inclined, simplify.

Example:

- Sys 1: y1(t)= x(2t) Sys 2: y2(t)= x(t-3)

- Input -> Sys 1 -> Sys 2 -> Output

- Start from the output, take the "most recent" transform (Sys 2) and put it in parenthesis, so: (t-3)

- Next, take the next most recent transform (Sys 1), and drop your (t-3) in it (substitute your "package" of (t-3) for t): 2(t-3)

- Simplify: 2t-6

- Done! Don't forget it is a transform of a function, not a function itself so you need to state so, as such: z(t) = x(2t-6)

So, put very simply, start at the output and substitute, in iterations, towards the input. That's all you really need to know, I just thought if I was verbose and used analogies I might score bonus points. Keep in mind the all the transforms deal with the independent variable, in this case time. Also, in the spirit of the kiwi, I could be completely wrong about everything. :) So seriously, someone check my work.

Determining the effects of Transforms of the Independent Variable (Time) in the form x(at + b) --michael.a.mitchell.2, Sun, 02 Sep 2007 12:20:52

If you are trying to find the effect of a transform in the form of x(at + b), you should:

- Delay or advance x(t) by the value of b. (Advance if b>0, delay if b<0)

- Then scale/reverse time by the value of a. (Compress if |a|>1, Stretch if |a|< 1, Reverse time if a<0)

LTI Systems

Properties of Convolution and LTI Systems

Linear Time Invariant (LTI) systems have properties that arise from the properties of convolution.

Property 1: Convolution is Commutative

- $ x_1(t)*x_2(t) = x_2(t)*x_1(t)\ $

System Example: Convolving the input to a system with its impulse response is the same as convolving the impulse response with the input.

Property 2: Convolution is Distributive

- $ \displaystyle x_1(t)* \left ( x_2(t)+x_3(t) \right ) = x_1(t)*x_2(t)+x_1(t)*x_3(t) $

System Example: Convolving a single input with two impulse responses then adding the output is the same as convolving the input with the sum of the impulse responses.

Property 2: Convolution is Associative

- $ x_1(t)*x_2(t)*x_3(t) = x_2(t)*x_3(t)*x_1(t)\ $

System Example: Convolving an input with an impulse response and convolving that with the impulse response of another system is the same as convolving the two impulse responses and then the input to the system.

Convolution Simplification

Convolution of Unit Step Function:

- To take a convolution, first determine whether the system is CT or DT and use the correct formula. Next it's time to simplify. Originally the bounds are set to negative and positive infinity. The unit step function will determine the new set of bounds. Consider the following unit step function as an example: $ u(2t-1)\ $. This function will be a zero as long as $ (2t-1) $ is less than 0. Solve for t and apply the new bounds. Next its time for the real work!

Convolution of Delta Function:

- Consider $ \delta (ax+b) $. Simplify this convolution by solving for when the delta function is set to one. (This is when the $ (ax+b)\ $ is equal to zero). That is the only value of the integration or sum, so replace t accordingly and solve.

Most General Convolutions (CT)

Framework for computing the CT Convolution of two unit step exponentials

Let's take the convolution of the two most general unit-step exponentials in CT.

This solution can be very helpful in checking your work for convolutions of this form. Just plug in your numbers for the capital letters.

(I know this is kinda long, but it is very detailed to show the process of how to get to the general simplified solution.)

$ x_1(t)=Ae^{Bt+C}u(Dt+E) \qquad x_2(t)=Fe^{Gt+H}u(It+J) $

$ \begin{align} x_1(t)*x_2(t) &= \int_{-\infty}^{\infty}x_1(\tau)x_2(t-\tau)d\tau \\ &=\int_{-\infty}^{\infty}Ae^{B\tau+C}u(D\tau+E)Fe^{G(t-\tau)+H}u(I(t-\tau)+J)d\tau \\ &=AF\int_{-\infty}^{\infty}e^{B\tau+C+G(t-\tau)+H}u(D\tau+E)u(It-I\tau+J)d\tau; \;(u(D\tau+E)=0\;,for\;D\tau+E<0\;\rightarrow\;\tau<\frac{-E}{D}) \\ &=AF\int_{\frac{-E}{D}}^{\infty}e^{\tau(B-G)+Gt+C+H}u(It-I\tau+J)d\tau; \;(u(It-I\tau+J)=0\;,for\;It-I\tau+J<0\;\rightarrow\;\tau>t+\frac{J}{I}) \\ &=AF\int_{\frac{-E}{D}}^{t+\frac{J}{I}}e^{\tau(B-G)+Gt+C+H}d\tau\cdot u(t+\frac{J}{I}+\frac{E}{D}) \\ &=AFe^{Gt+C+H}\int_{\frac{-E}{D}}^{t+\frac{J}{I}}e^{\tau(B-G)}d\tau\cdot u(t+\frac{J}{I}+\frac{E}{D}) \\ &=AFe^{Gt+C+H}\frac{1}{B-G}\left[e^{\tau(B-G)}\right]_{\frac{-E}{D}}^{t+\frac{J}{I}}\cdot u(t+\frac{J}{I}+\frac{E}{D}) \\ &=AFe^{Gt+C+H}\frac{1}{B-G}(e^{(t+\frac{J}{I})\cdot(B-G)}-e^{\frac{-E}{D}\cdot(B-G)})\cdot u(t+\frac{J}{I}+\frac{E}{D}) \\ &=\frac{AF}{B-G}(e^{Gt+CH+(t+\frac{J}{I})\cdot(B-G)}-e^{Gt+C+H-\frac{E}{D}(B-G)})\cdot u(t+\frac{J}{I}+\frac{E}{D}) \\ &=\frac{AF}{B-G}(e^{Bt+C+H+\frac{J}{I}(B-G)}-e^{Gt+C+H+\frac{E}{D}(G-B)})\cdot u(t+\frac{J}{I}+\frac{E}{D}) \end{align} $

Example: Problem 2 on Fall 06 Midterm 1:

$ Let:\;x_1(t)=x(t)=e^{-2t}u(t) \qquad x_2(t)=h(t)=u(t) $

$ Thus:\;A=1,\;B=-2,\;C=0,\;D=1,\;E=0,\;F=1,\;G=0,\;H=0,\;I=1,\;J=0 $

$ \begin{align} x(t)*h(t)&=x_1(t)*x_2(t) \\ &=\frac{1\cdot1}{-2-0}(e^{-2t+0+0+\frac{0}{1}(-2-0)}-e^{0t+0+0+\frac{0}{1}(0--2)})\cdot u(t+\frac{0}{1}+\frac{0}{1}) \\ &=\frac{-1}{2}(e^{-2t}-1)\cdot u(t) \\ &=\frac{1}{2}(1-e^{-2t})\cdot u(t) \end{align} $

Definition of Sampling Theorem

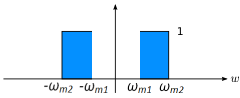

A band-limited signal can be recovered by sampling if the sampling frequency $ \omega_s $ is greater than $ 2\omega_m $, where $ \omega_m $ is the cut-off frequency. $ T = \frac{2\pi}{\omega_s} $

Received $ \frac{7}{10} $ because didn't specify cut-off frequency of what and should have used "recovered from sampling" instead of "recovered by sampling."

Fourier Series

Coefficient LTI Transfer

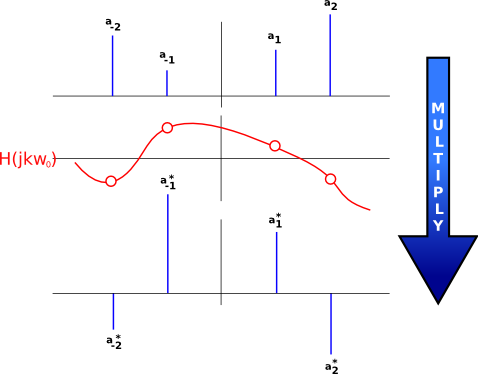

When transferring coefficients of a fourier series through an LTI system, each value of $ a_k\ $ is multiplied by $ H(\jmath \omega)\ $ in the system output. Therefore...

- $ \displaystyle a_k \rightarrow a_k \cdot H(\jmath \omega) $

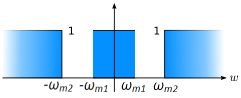

The image below illustrates the process of taking the value of the frequency response function at each frequency of the coefficients and then multiplying by that value to yield the transformed coefficient values.

Note that when $ H(\jmath \omega) $ is negative, the sign of the value of the coefficient is flipped.

Types of Filters

Types of Filters

Low Pass

Low Pass

High Pass

High Pass

Duality

I found something interesting when you use duality on the same transform pair over and over... Note: I can not find a way to display a proper fourier symbol, so I went with the "\displaystyle {\bf F}" as seen below.

Later note: I found the fraktur typeface looks kind of like a script F, which is "\mathfrak {F}", instead of above. --Mike Walker

- $ (1)\; \mathfrak {F} (e^{-at}u(t))=\frac{1}{a+j\omega} $

By Duality of (1):

- $ (2)\; \mathfrak {F} (\frac{1}{a+jt})=2\pi e^{a\omega}u(\omega) $

By Duality of (2) (and interestingly Time Reversal of (1)):

- $ (3)\; \mathfrak {F} (2\pi e^{at}u(-t))=\frac{2\pi}{a-j\omega} $

By Linearity of (3) the tex:2\pi divides out of both sides:

- $ (4)\; \mathfrak {F} (e^{at}u(-t))=\frac{1}{a-j\omega} $

By Duality of (4) (and again interestingly Time Reversal of (2)):

- $ (5)\; \mathfrak {F} (\frac{1}{a-jt})=2\pi e^{-a\omega}u(-\omega) $

By Duality of (5) we see that we get back to (1).

If this is done in a more general since, it becomes clear that it is only necessary to take the dual of a Fourier Transform Pair once. After taking the dual once, one might as well use time reversal. Taking the dual four times will always result in the original pair again after the extra $ 2\pi \ $'s are divided out.

Fourier Transform Table

| Time Domain | Fourier Domain |

|---|---|

| $ x(t)=\frac{1}{2 \pi} \int_{-\infty}^{\infty} X(j \omega)e^{j \omega t}d \omega $ | $ X(j \omega)=\int_{-\infty}^\infty x(t) e^{-j \omega t}d t $ |

| $ 1\ $ | $ 2 \pi \delta (\omega)\ $ |

| $ -0.5+u(t)\ $ | $ \frac{1}{j \omega}\ $ |

| $ \delta (t)\ $ | $ 1\ $ |

| $ \delta (t-c)\ $ | $ e^{-j \omega c}\ $ |

| $ u(t)\ $ | $ \pi \delta(\omega)+\frac{1}{j \omega} $ |

| $ e^{-bt}u(t)\ $ | $ \frac{1}{j \omega + b} $ |

| $ cos \omega_0 t\ $ | $ \pi [\delta ( \omega + \omega_0 ) + \delta ( \omega - \omega_0 ) ]\ $ |

| $ cos ( \omega_0 t + \theta )\ $ | $ \pi [ e^{-j \theta} \delta ( \omega + \omega_0 ) + e^{j \theta} \delta ( \omega - \omega_0 )]\ $ |

| $ sin \omega_0 t\ $ | $ j \pi [ \delta ( \omega + \omega_0 ) - \delta ( \omega - \omega_0 ) ]\ $ |

| $ sin ( \omega_0 t + \theta )\ $ | $ j \pi [ e^{-j \theta} \delta ( \omega + \omega_0 ) - e^{j \theta} \delta ( \omega - \omega_0 ) ]\ $ |

| $ rect \left ( \frac{t}{\tau} \right ) $ | $ \tau sinc \frac{\tau \omega}{2 \pi} $ |

| $ \tau sinc \frac{\tau t}{2 \pi} $ | $ 2 \pi p_\tau\ ( \omega ) $ |

| $ \left ( 1-\frac{2 |t|}{\tau} \right ) p_\tau (t) $ | $ \frac{\tau}{2} sinc^2 \frac{\tau \omega}{4 \pi} $ |

| $ \frac{\tau}{2} sinc^2 \left ( \frac{\tau t}{4 \pi} \right ) $ | $ 2 \pi \left ( 1-\frac{2|\omega|}{\tau} \right ) p_\tau (\omega) $ |

Notes:

- $ sinc(x) = \frac {sin(x)}{x} $

- $ p_\tau (t)\ $ is the rectangular pulse function of width $ \tau\ $

Source courtesy Wikibooks.org

Convergence of Fourier Transforms

Consider $ X(j\omega)\ $ evaluated according to Equation 4.9:

- $ X(j\omega) = \int_{-\infty}^\infty x(t)e^{-j \omega t} dt $

and let $ x(t)\ $ denote the signal obtained by using $ X(j\omega)\ $ in the right hand side of Equation 4.8:

- $ x(t) = (1/(2\pi)) \int_{-\infty}^\infty X(j\omega)e^{j \omega t} d\omega $

If $ x(t)\ $ has finite energy, i.e., if it is square integrable so that Equation 4.11 holds:

- $ \int_{-\infty}^\infty |x(t)|^2 dt < \infty $

then it is guaranteed that $ X(j\omega)\ $ is finite, i.e, Equation 4.9 converges.

Let $ e(t)\ $ denote the error between $ \hat{x}(t)\ $ and $ x(t)\ $, i.e. $ e(t)=\hat{x}(t) - x(t)\ $, then Equation 4.12 follows:

- $ \int_{-\infty}^\infty |e(t)|^2 dt = 0 $

Thus if $ x(t)\ $ has finite energy, then although $ x(t)\ $ and $ \hat{x}(t)\ $ may differ significantly at individual values of $ t\ $, there is no energy in their difference.

comments:

this is not clear --mireille.boutin.1, Fri, 12 Oct 2007 16:23:04

why does Equation 4.12 follow???? Can somebody explain?

Sifting Property

So, what is this sifting property Mimi keeps mentioning?

- In case you were wondering, and you probably were not, the sifting property Mimi referenced in class was mentioned (only briefly in my class) in ECE 202.

- Formally, it is a property obeyed by the Dirac delta function as such:

- $ \int_{-\infty}^{\infty}f(\tau)\delta(t-\tau)d\tau=f(t) $

Student 1

- Ok, that's all well and good, but why?

Fifth year senior

- What this does is it allows us to pick, or 'sift' out, hence the name, a particular value of the function in the integral at an exact instant in time.

Student 1

- Doesn't the function do that by itself outside of the integral anyways?

Fifth year senior

- Good, question, I'm glad I paid you to ask it. One of its uses is in helping develop and understand the idea of convolution.

Student 1:

- ...

Fifth year senior

- Ok, so we know that a LTI system can be completely described by it's unit impulse response, but why is that? The sifting property shows us mathematically that any function, say a C.T. or D.T. signal, can be described as the sum of an infinite (or finite for most D.T. cases) number of shifted and scaled impulses. Knowing this, is makes sense that if we know what the system will do to a single impulse, and we know that the signal can be broken up into single impulses, we can then apply the 'effect' of the system to each individual impulse of the signal, sum them, and find the resulting output.

Student 1

- So all we need to know to find the output of a LTI system is its input and its response to an impulse function?

Fifth year senior

- Sounds simple, doesn't it. I hope you like algebra...

Difference Between Fourier and Laplace

I'm sure this is a question that many other people have, so here's the answer.

I asked Mimi this some time ago, and essentially, the Fourier transform is a special case of the Laplace transform. If you recall, the Laplace transform uses the variable 's', which equals a + jb (for a,b real), and thus has both a real and imaginary part. The key difference is that the Fourier transform has no real part, it is purely imaginary, so the 'a' would be zero.

As for the mathematical definitions of each, the limits on the Fourier transform go from negative infinity to positive infinity, while for the (one-sided) Laplace transform, they go from zero to positive infinity.

Laplace (One-Sided)

- $ \mathfrak {L}(s)=\int_{0}^{\infty} f(t)e^{-st} dt $

Fourier

- $ \mathfrak {F}(\omega)=\int_{-\infty}^{\infty} f(t)e^{-j{\omega}t} dt $

Thanks --heather.r.barrett.1, Fri, 26 Oct 2007 17:01:45

I was wondering about that, too. Now I can sleep at night again. =P

- The easiest way to think about this is that the Fourier transform is the Laplace transform evaluated at $ s = j \omega\ $. Any signal that has a Fourier transform has a Laplace transform, but not conversely. When evaluating the Region of Convergence (ROC) of the Laplace transform, the signal has a Fourier transform if:

- CT: The ROC includes the imaginary axis, x = 0, in the complex plane.

- DT: The ROC includes the unit circle, z = 1, in the complex plane.

Common Questions on FS/FT Answered by Mimi

I just had a few question about FS and FT and whatnot.

1. Is the Fourier Transform for Aperiodic Signals Only?

- No. They are for periodic signals also. The formula for transforming a periodic signal is (I believe) the first one on the table.

2. Are Fourier Series for Periodic Signals Only?

- YES.

3. We are not supposed to use the Fourier Transform of complex exponentials. Does this mean that we shouldn't take the Fourier Transform of periodic signals?

- Correction: you are not suppose to integrate to compute the Fourier Transform of complex exponentials. Yes you do: but you either use a table, or you guess the answer and go backward.

4. I think I'm confusing the Fourier Series and Fourier Transform in general. Whats the difference?

- (Answering Questions 1 and 2 might help me understand this)

- Fourier Series is for periodic signals. It represents signals as a summation of complex exponentials.

- Fourier Transform is for all signal. It represents signals as an integral of complex exponentials.

Guide to Partial Fraction Expansion

A guide to Partial Fraction Expansion

This page is meant as a comprehensive review of partial fraction expansion. Partial fraction expansion allows us to fit functions to the known ones given by the known Fourier Transform pairs table.

First, the denominator must be of a higher degree than the numerator. If this is not the case, then perform long division to make it such. Note: for the remainder of this guide it is assumed that the denominator is of a higher degree than the numerator. There are four cases that arise which one must consider:

Case 1 : Denominator is a product of distinct linear factors.

- $ \frac{(polynomial)}{(a_1x+b_1)(a_2x+b_2)\cdots(a_kx+b_k)}=\frac{A_1}{a_1x+b_1}+\frac{A_2}{a_2x+b_2}+\cdots+\frac{A_k}{a_kx+b_k} $

Case 2 : Denominator is a product of linear factors, some of which are repeated.

- $ \frac{(polynomial)}{(a_1x+b_1)^r}=\frac{A_1}{a_1x+b_1}+\frac{A_2}{(a_1x+b_1)^2}+\cdots+\frac{A_r}{(a_1x+b_1)^r} $

Case 3 : Denominator contains irreducible quadratic factors, none of which is repeated.

- $ \frac{(polynomial)}{ax^2+bx+c}=\frac{Ax+B}{ax^2+bx+c} $

Case 4 : Denominator contains a repeated irreducible quadratic factor.

- $ \frac{(polynomial)}{(ax^2+bx+c)^r}=\frac{A_1x+B_1}{ax^2+bx+c}+\frac{A_2x+B_2}{(ax^2+bx+c)^2}+\cdots+\frac{A_rx+B_r}{(ax^2+bx+c)^r} $

Example encompassing all of the above cases:

- $ \frac {(polynomial degree < 10)}{x(x-1)(x^2+x+1)(x^2+1)^3} = \frac {A}{x} + \frac {B}{x^2+x+1} + \frac {Cx+D}{x^2+1} + \frac {Ex+F}{(x^2+1)^2} + \frac {Gx+H}{(x^2+1)^2} + \frac {Ix+J}{(x^2+1)^3} $

General method to find out what the Capital Letters equal:

The most general method is to multiply both sides by the left hand side's denominator. This clears all fractions. Then, expand and collect terms on the right hand side. Equate the right and left hand sides' coefficients. This leads to a system of linear equations which can be solved for obtaining the Capital Letters. Tricks to obtaining the Capital Letters quickly: Capital Letters whose denominator is the highest power of its kind can be found directly as follows:

First, multiply both sides by its denominator.

Second, find the value of x which would make the denominator equal 0.

Third, evaluate the equation for that value of x. This leaves you with the Capital Letter on the right and its value on the left. Example of finding Capital Letters:

- $ \frac{4x}{(x-1)^2(x+1)}=\frac{A}{x-1}+\frac{B}{(x-1)^2}+\frac{C}{x+1} $

The above trick can be used to find B and C but not A.

- $ B=\frac{4x}{x+1}\;(evaluated\;at\;x=1)=\frac{4}{2}=2 $

- $ C=\frac{4x}{(x-1)^2}\;(evaluated\;at\;x=-1)=\frac{-4}{4}=-1 $

A is then found using the general method given above.

- $ \begin{align} 4x&=A(x-1)(x+1)+B(x+1)+C(x-1)^2 \\ &=A(x-1)(x+1)+2(x+1)-(x-1)^2 \\ &=(A-1)x^2+4x+(-A+1) \end{align} $

- $ A-1=0 \rightarrow A=1 $

Note: The four cases for finding the form of the partial fraction expansion as well as the general method of finding the capital letters were adapted from section 7.4 in Calculus Early Transcendentals, 5e. (Our Calc I, II, & III book.) The tricks to obtaining the capital letters quickly are from learning to do the Laplace Transform in ECE 202.

comments:

... --tom.l.moffitt.1, Thu, 18 Oct 2007 22:43:05 -0400 reply Thank you. I've been looking for this for a while.

[[ECE 301 Fall 2007 mboutin Joseph Fourier|Mr. Fourier]

Sources:

1. Wikipedia: [2] 2. scienceworld.wolfram.com

Jean Baptiste Joseph Fourier (1768 - 1830)

French mathematician who discovered that any periodic motion can be written as a superposition of sinusoidal and cosinusoidal vibrations.Fourier transform is named in honor of him. He developed a mathematical theory of heat in Théorie Analytique de la Chaleur (Analytic Theory of Heat), (1822), discussing it in terms of differential equations.

Fourier was a friend and advisor of Napoleon. Fourier believed that his health would be improved by wrapping himself up in blankets, and in this state he tripped down the stairs in his house and killed himself. The paper of Galois which he had taken home to read shortly before his death was never recovered.

Fourier is credited with the discovery in 1824 that gases in the atmosphere might increase the surface temperature of the Earth. This was the effect that would later be called the greenhouse effect. He established the concept of planetary energy balance - that planets obtain energy from a number of sources that cause temperature increase. Planets also lose energy by infrared radiation (that Fourier called "chaleur obscure" or "dark heat") with the rate increasing with temperature. A balance is reached between heat gain and heat loss; the atmosphere shifts the balance toward the higher temperatures by slowing the heat loss. Although Fourier understood that rate of infrared radiation increases with temperature, the Stefan-Boltzmann law which gives the exact form of this dependency (a fourth-power law) was discovered fifty years later.

Fourier recognized that Earth primarily gets energy from Solar radiation, to which the atmosphere is transparent, and that geothermal heat doesn't contribute much to the energy balance. However, he mistakenly believed that there is a significant contribution of radiation from interplanetary space.

Fourier referred to an experiment by M. de Saussure, who exposed a black box to sunlight. When a thin sheet of glass is put on top of the box, the temperature inside of the box increases. Infrared radiation was discovered by William Herschel twenty five years later.

comments:

PLAGIARISM --mireille.boutin.1, Tue, 16 Oct 2007 22:54:03 Copying a webpage from somewhere else with small modifications without mentioning the source constitutes plagiarism. This will not be tolerated on the kiwi.

Geometric Series Note

The Geometric Series formulas below still hold for $ \alpha\ $'s containing complex exponentials.

For k from 0 to n, where $ \alpha \ne 1 $:

- $ \sum_{k=0}^{n} \alpha^k = \frac{1-\alpha^{n+1}}{1-\alpha} $

- (else, = n + 1)

For k from 0 to $ \infty\ $, where $ \alpha < 1\ $:

- $ \sum_{k=0}^\infty \alpha^k = \frac{1}{1-\alpha} $

- (else it diverges)

Example: We want to evaluate the following:

- $ \sum_{k=0}^\infty (\frac{1}{2})^k e^{-j \omega k}= \sum_{k=0}^\infty (\frac{1}{2}e^{-j\omega})^k = \frac{1}{1-\frac{1}{2}e^{-j\omega}} $

In this case, $ \alpha=\frac{1}{2}e^{-j\omega} $ in the above Geometric Series formula.

Meaning of Fourier Transform

Oppenheim-Willsky

- "In Chapter 3, we developed a representation of periodic singals as linear combinations of complex exponentials.. Whereas for periodic signals the complex exponential building blocks are harmonically related, for aperiodic singals they are infinitesimally close in frequency, and the resulting spectrum of coefficients in this representation is called the Fourier Transform.... In particular, Fourier reasoned that an aperiodic signal can be viewed as a periodic singal with an infinite period." An example of choosing the decision (this is a link)!!##!!

Wikipedia [3]

- "Loosely speaking, the Fourier transform decomposes a function into a continuous spectrum of its frequency components, and the inverse transform synthesizes a function from its spectrum of frequency components. A useful analogy is the relationship between a set of notes in musical notation (the frequency components) and the sound of the musical chord represented by these notes (the function/signal itself).

- Using physical terminology, the Fourier transform of a signal x(t) can be thought of as a representation of a signal in the "frequency domain"; i.e. how much each frequency contributes to the signal. This is similar to the basic idea of the various other Fourier transforms including the Fourier series of a periodic function."

My Definition

- When thinking about the Fourier Transform, I like to think about the analogy of a musical chord given by Wikipedia. A chord of music can be represented as our function x(t). Taking the Fourier transform of x(t) breaks the chord down into the frequency components. The inverse of the Fourier Transform then "sums" the frequency components to form the function x(t) or the musical chord. The difference between the Fourier Series and Fourier Transform is that a Fourier Series can only be applied to periodic functions which can be broken into a finite number of frequency components. The Fourier Transform applies to aperiodic functions and breaks the function into as infinite number of infinitesimally close frequency components using the integral.

The Human Perspective

- The cool thing is that humans (and other animals) do Fourier Transforms all the time, without having to deal with the underlying mathematics. When you hear sounds, you hear tones (which is just the "frequency" abstracted out of the to-and-fro motions of your ear-drum). When you see, you see colors, which are frequency components in the light spectrum. How our sensory organs work as "analog computers" is itself a fascinating topic to pursue.

[1] Wikipedia - Fourier Transform

Multidimension Fourier Transform

The Fourier Transform can be extended to multidimensions of space. This extension is quite simple to do, as all it involves is integrating with respect to each dimension for CT signals, and performing a summation for each dimension in a DT signal.

For example, the single dimension Fourier Transform only integrates with respect to x:

- $ F(\omega) = \int_{-\infty}^{\infty} f(x)e^{-2\pi j\omega x} dx $

If our two-dimensional function depends on x and y ( f(x,y)), then the two dimensional Fourier Transform can be represented as:

- $ F(u,v) = \int_{-\infty}^{\infty}\int_{-\infty}^{\infty} f(x,y)e^{-2\pi j(ux + vy)}\,dx,dy $

The variables u and v represent the omega for each direction respectively.

For DT Signals, the jump to multiple dimensions is basically the same. With one dimension, our function only depended on n, (x[n]). The Fourier Transform was then a summation with respect to n and was thus represented as:

- $ F[\omega] = \frac{1}{N}\sum_{x=0}^{N-1} f[x]e^{-2\pi j\omega x/N} $

If the two-dimensional function depends on variable n and m. (x[n,m]) , the Fourier Transform then becomes a double summation:

- $ F[u,v] = \frac{1}{M}\sum_{y=0}^{M-1}\left( \frac{1}{N}\sum_{x=0}^{N-1} f[x,y]e^{-2\pi ju x/N} \right)e^{-2\pi jvy/M} $

Again, The variables u and v represent the omega for each direction respectively, meaning there is a seperate omega for each variable in the original function.

Sources:

- http://www.cg.tuwien.ac.at/~theussl/DA/node21.html

- http://fourier.eng.hmc.edu/e101/lectures/Image_Processing/node6.html

Even/Odd Fourier Coefficients

Let $ x[n]\ $ be a real periodic sequence with fundamental period $ N_0\ $ and Fourier coefficients $ c_k = a_k+jb_k\ $, where $ a_k\ $ and $ b_k\ $ are both real.

Show that $ a_{-k} = {a}_{k}\ $ and $ b_{-k}=-b_k\ $.

If $ x[n]\ $ is real we have (equation for Fourier coefficients):

- $ c_{-k} = \frac{1}{N_0} \sum_{n=0}^{N_0-1} x[n]{e}^{jk \omega_0 n} $

and further:

- $ = \left ( \frac{1}{N_0} \sum_{n=0}^{N_0-1} x[n]e^{-jk \omega_0 n} \right ) ^{*} = c^{*}_k $

Therefore:

- $ c_{-k} = a_{-k} + jb_{-k} =(a_k + b_k)^{*} = a_k - jb_k\ $

So now we can see that:

- $ a_{-k} = a_k\ $ and $ b_{-k} = -b_k\ $

Sampling Theorem

The Sampling Theorem

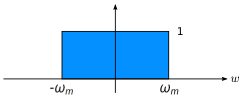

The sampling theorem tells us that we can perfectly reconstruct a signal if the following two conditions are observed:

- The signal has a finite bandwidth B. (meaning the signal is band limited)

- The signal has been sampled at a rate that is greater than 2*B (called the Nyquist rate), in other words, more than twice per period of the highest frequency component.

Lets look at each of these requirements more closely:

1. The signal is band limited.

- A band limited signal has a finite highest frequency component such that no other content exists in the signal at a higher frequency. If you take the Fourier transform of a signal and can find a point at which the signal is equal to zero at the point and all points after, it is band limited with a bandwidth equal to the frequency corresponding to that point.

- Below is a Fourier transform of a signal, you can see that is is bandlimited because it has a point equal to zero and stays at zero for all frequencies higher than it. This point is labeled B on the graph and is the signals Bandwidth.

2. You must sample at a rate greater than the Nyquist rate**.

- I believe there may have been some confusion in class, I think the substitute said that you may sample at equal to or greater than the Nyquist rate. This is wrong, you must sample at a rate strictly greater than the Nyquist rate otherwise aliasing occurs.

- To see why, look at the figure below, which is sampled at exactly twice per period, or the Nyquist rate for the signal:

- As you can see, in this case, we have shown three different possible sinusoids that fit the sampled data, and it is ambiguous as to which of them was the signal that was sampled. Also, I do not have a pretty graph for this, but image if you shifted the samples forward by 90 degrees, you would be sampling every time the green waveform crosses zero, and it would look like the waveform was a constant function of 0.

Below are some programs that you can play around with to observe what happens when either criterion is broken.

To get a 'feel' for why you must exceed the Nyquist rate, I really recommend you play around with some applets and really understand visually how and why aliasing occurs in under-sampled signals.

This is a simple program that lets you play around with a signal, sample it at different intervals, and see what the reconstructed wave looks like using a zero order hold plot. Try to find the Nyquist rate and sample both below and above it to see what happens. Also, try to find a sampling interval that produces a nice looking alias. (easiest using a simple sine wave)

Alternately, if you do not want to unzip and run a .exe on your machine, here is a more complicated version that runs in your browser as a java applet, it is at the bottom of the page.

Another decent applet to play with:

Sampling Theorem Def.

Before delving into the true comprehensive definition of the sampling theorem, I will first discuss an INAPPROPRIATE definition of the sampling theorem.

For example, if one were to have wrote, "The sampling theorem specifies that to properly recreate a signal (without aliasing) you must sample at a frequency above the Nyquist rate. (Nyquist rate= $ 2 \omega_m\ $)" on the third midterm exam they would have merited only 5 points out of a possible ten. Since the proper definition should be easily understood be the common layman, one MUST specify EXACTLY what $ \omega_m\ $ is. It is also very important to note that the minute difference between should and must cost this person an entire point on their exam. In fact, for some unique signals, it is possible (though not probable) to actually be able to reconstruct a signal when sampling at frequencies less than the Nyquist rate. Finally, this definition is not correct because it does not specify from what the signal is being recreated. If the signal is for example not band-limited, it cannot be reconstructed at all.

Wow, this individual must be pretty thick-headed to have studied this material for so long and only achieve a failing grade (50%) on the most straight forward question on the exam. If he had only assumed that the audience he was writing the theorem for was completely uneducated (rather than the our esteemed professor) he may have been able to do a little better on this question.

If he was thinking clearly earlier, he may have written something like this:

- The sampling theorem specifies that if the original signal is continuous (which means that it is valid at all real values of time) and band-limited (which means that the Fourier transform of the signal is zero for frequencies above the absolute value of any real frequency) that if you sample it at a frequency greater than the Nyquist rate (which is equal to two times the absolute value of the frequency at which the Fourier transform of the signal is zero for values greater than said frequency) you are guaranteed to be able to successful reconstruct the signal without aliasing (which is what happens when you mess up!!). However, it is important (in the opinion of some) to note that when sampling at frequencies less than the Nyquist rate in rare cases you are able to properly reconstruct the signal.

Additional Definition --andrew.c.daniel.1, Thu, 06 Dec 2007 23:02:19

Sampling Theorem: The sampling theorem states that a band-limited signal is able to be completely recovered from it's samples as long as the frequency it is sampled at exceeds the Nyquist rate, $ 2 \omega_m\ $, where $ \omega_m\ $ is the frequency where the Fourier transform of the signal equals zero for all frequencies greater than $ \omega_m\ $.

Additional Definition --corey.e.zahner.1, Fri, 07 Dec 2007 13:36:43

Sampling Theorem: Given a signal $ x(t)\ $, it can be uniquely reconstructed from its transform $ X(j \omega)\ $, if $ \omega_s > 2 \omega_m\ $, where $ \omega_s\ $ is the sampling frequency and $ |\omega_m|\ $ is the point above which $ X(\omega) = 0\ $

This definition is worth 0/10 points and a frowny face. The word transform should be changed to sampling to get any points

... --matthew.a.harger.1, Sat, 08 Dec 2007 19:43:45

Don't Know what I'm doing, so my def goes here.

Sampling Theorem: The sampling theorem states that given a signal $ x(t)\ $, the signal is sampled with shifted deltas by some period T. [$ x(t)\ $ multiplied by $ \sum_{-\infty}^{\infty} \delta (t-nT) $. However, the sampling frequency must be at least twice the maximum signal frequency or aliasing occurs and it can't be guaranteed the original signal can be recovered.

4/10 -- Grading comments as follows:

- >> "No. It states that a signal can be recovered from its samples provided two conditions are met."

- >> Should have added "Band Limited" to definition >> Must be "Greater Than" twice the maximum signal frequency >> Aliasing "May" occur