Note: Most tree growing methods favor greatest impurity reduction near the root node.

Ex.

To assign category to a leaf node.

Easy!

If sample data is pure

-> assign this class to leaf.

else

-> assign the most frequent class.

Note: Problem of building decision tree is "ill-conditioned"

i.e. small variance in the training data can yield large variations in decision rules obtained.

Ex. p.405(D&H)

A small move of one sample data can change the decision rules a lot.

Reference about clustering

"Data clustering, a review," A.K. Jain, M.N. Murty, P.J. Flynn[1]

"Algorithms for clustering data," A.K. Jain, R.C. Dibes[2]

"Support vector clustering," Ben-Hur, Horn, Siegelmann, Vapnik [3]

"Dynamic cluster formation using level set methods," Yip, Ding, Chan[4]

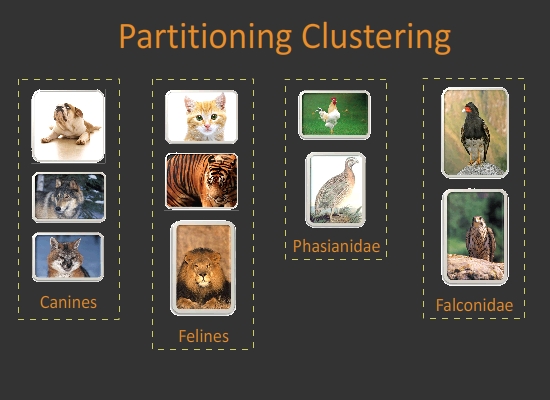

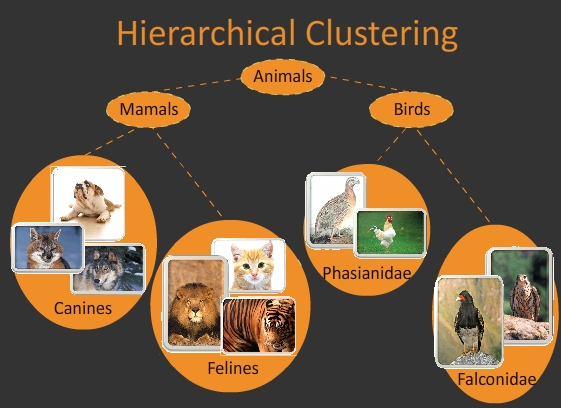

What is clustering?

The task of finding "natural " groupings in a data set.

Synonymons="unsupervised learning"

Clustering as a useful technique for searching in databases

Clustering can be used to construct an index for a large dataset to be searched quickly.

- Definition: An index is a data structure that enables sub-linear time look up.

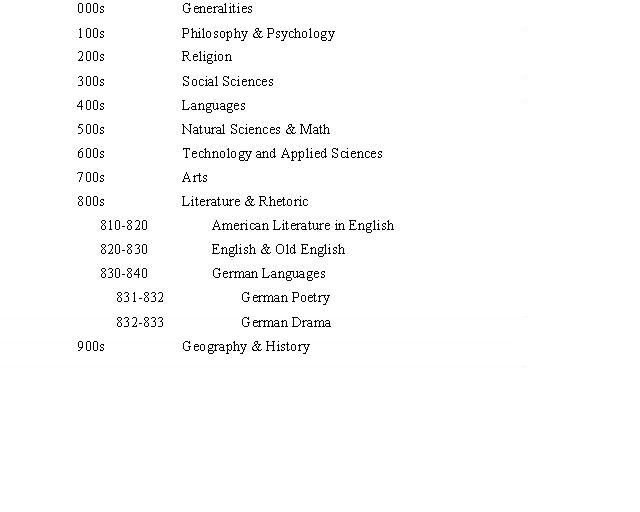

- Example: Dewey system to index books in a library

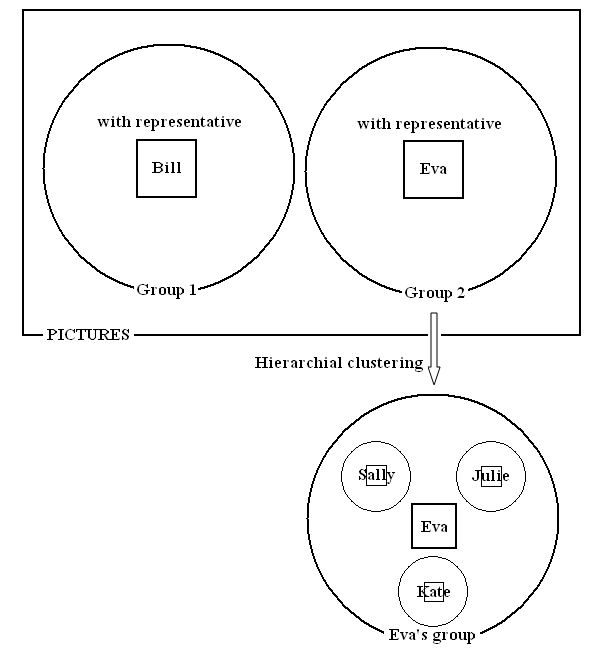

- Example of Index: Face Recognition

- need face images with label

- must cluster to obtain sub-linear search time

- Search will be faster because of $ \bigtriangleup $ inequality.

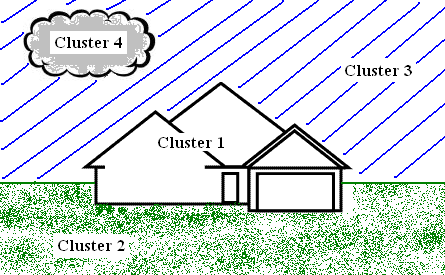

- Example: Image segmentation is a clustering problem

- dataset = pixels in image - each cluster is an object in image

Input to a clustering algorithm is either - distances between each pairs of objects in dataset - feature vectors for each object in dataset