K-Nearest Neighbors Density Estimation

A slecture by CIT student Raj Praveen Selvaraj

Partly based on the ECE662 Spring 2014 lecture material of Prof. Mireille Boutin.

Introduction

This slecture discusses about the K-Nearest Neighbors(k-NN) approach to estimate the density of a given distribution. The approach of K-Nearest Neighbors is very popular in signal and image processing for clustering and classification of patterns. It is an non-parametric density estimation technique which lets the region volume be a function of the training data. We will discuss the basic principle behind the k-NN approach to estimate density at a point X and then move on to building a classifier using the k-NN Density estimate.

Basic Principle

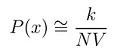

The general formulation for density estimation states that, for N Observations x1,x2,x3,...,xn the density at a point x can be approximated by the following function,

where V is the volume of some neighborhood(say A) around x and k denotes the number of observations that are contained within the neighborhood.

The basic idea of k-NN is to extend the neighborhood, until the k nearest values are included. If we consider the neighborhood around x as a sphere, for the given N Observations, we pick an integer,

$ k \ge 2 $

Post your slecture material here. Guidelines:

- If you are making a text slecture

- Type text using wikitext markup languages

- Type all equations using latex code between <math> </math> tags.

- You may include links to other Project Rhea pages.

Questions and comments

If you have any questions, comments, etc. please post them on this page.