Contents

An Implementation of Sobel Edge Detection

by Sean Sodha

Introduction

Edge Detection is when we use matrix math to calculate areas of different intensities of an image. After finding all of the large differences in intensities in the picture, we have covered all of the edges in the picture.Sobel Edge detection is a widely used form of image processing. Along with Canny and Prewitt, Sobel is one of the most popular edge detection algorithms used in today's technology.

The Math Behind the Algorithm

When using Sobel Edge Detection, the image is processed in the X and Y directions separately first, and then combined together to form a new image which represents the sum of the X and Y edges of the image. However, these images can be processed separately as well. That will be covered later in this document.

When using a Sobel Edge Detector, it is first best to convert the image from an RGB scale to a Grayscale image. Then from there, we will use what is called kernel convolution. A kernel is a 3 x 3 matrix consisting of differently (or symmetrically) weighted indexes. This will represent the filter that we will be implementing for an edge detection.

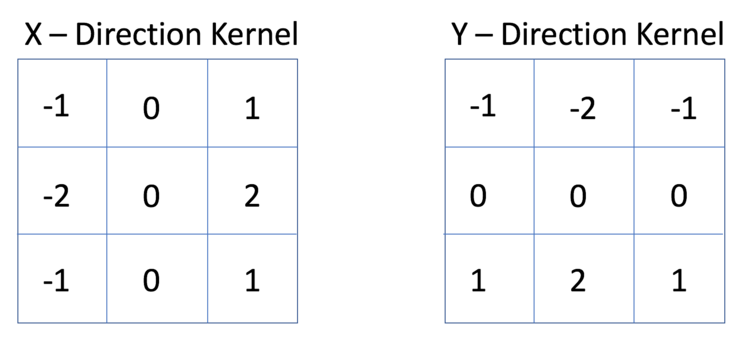

When we want to scan across the X direction of an image for example, we will want to use the following X Direction Kernel to scan for large changes in the gradient. Similarly, when we want to scan across the Y direction of an image, we could also use the following Y Direction Kernel to scan for large gradients as well.

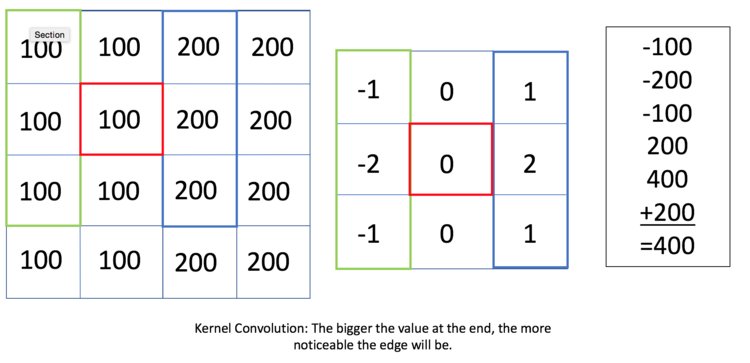

By using Kernel Convolution, we can see in the example image below there is an edge between the column of 100 and 200 values.

This Kernel Convolution is an example of an X Direction Kernel usage. If an image were scanning from left to write, we can see that if the filter was set at (2,2) in the image above, it would have a value of 400 and therefore would have a fairly prominent edge at that point. If a user wanted to exaggerate the edge, then the user would need to change the filter values of -2 and 2 to higher magnitude. Perhaps -5 and 5. This would make the gradient of the edge larger and therefore, more noticeable.

Once the image is processed in the X direction, we can then process the image in the Y direction. Magnitudes of both the X and Y kernels will then be added together to produce a final image showing all edges in the image. This will be discussed in the next section.

Edge Detection Example

Now that we have gone through the mathematics of the edge detection algorithm, it is now time to put it to use on a real image.

Below is the original image that was used in this project:

The first step to using Sobel Edge Detection is to convert the image to grayscale. While it is possible to use the algorithm in standard RGB scale, it is easier to implement in a grayscale. Below is the grayscale image.

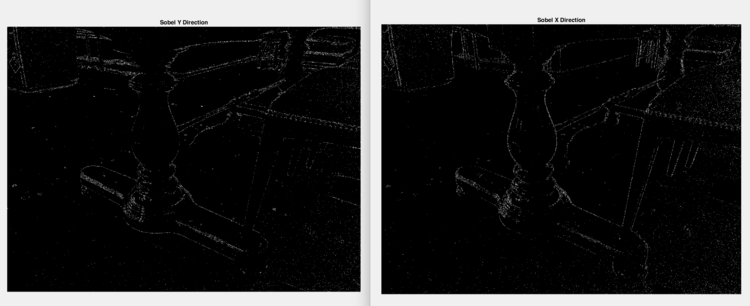

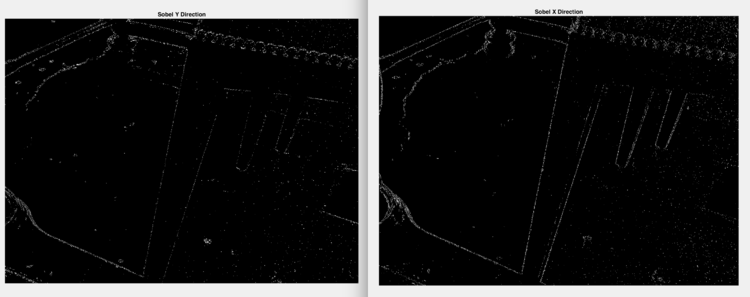

The first step that I want to show is showing the differences between Sobel Edge Detection in the X Direction and in the Y direction individually.

As we can see, the images are fairly similar simply because many of the edges in the image are at an angle. However, we can see that in Sobel Y Direction image, it does not catch a lot of the leg of the chair on the right. This is because when we use the Y direction, we are scanning from top to bottom, and it will only detect edges that are going from left to right. On the other hand, Sobel X Direction will detect the edges of the chair leg because the image will be processed from left to right using a different filter. This will catch the left and right edge of the chair leg. The images below show this distinction.