What is a Markov chain/process? What can you do with it? And why are lots of people talking about them?

Outlines of the Project

A) What is a Markov Chain

-Stochastic process

-Definition of Markov Chain

-Concrete examples and several properties

B) What can we do with it?

-Transition Probabilities and Transition Matrix

-Computation with Transition Matrix

-Matrix limits and Markov Chains

C) Why Useful

-Applications in Real Life with examples and computation

What is a Markov chain?

To introduce the answer this question, we should first realize the definition of stochastic process, because a Markov Chain is a special type of a stochastic process.

Definition of a stochastic process:

“A sequence of random variables X1, X2… is called a stochastic process with discrete time parameter” [1]

Explanation: We may think X1, X2… as samples of an experiment. In stochastic process, they represents states of process. Clearly, X1 is called the initial state, and Xn represents the state at time n. In the introductory probability course, we are familiar with the models when X1…Xn are independently identical distributed (iid) random variables. However, note states do not necessarily have to be independently identical distributed. I think this note is especially important to eliminate confusion for first time learners in the Markov Chain.

Definition of a Markov chain

A stochastic process is a Markov Chain if, for each time n, the conditional distributions of all Xn+j given X1…Xn depend only on Xn instead of X1…Xn-1. In majority or basic cases, we consider j=1. That is, the probability distribution of random variable Xn is determined by the value from the (n-1)th state (Xn-1). This means the distribution of future states depend only on the present state, and has nothing to do with the past states.

*Note: In a Markov Chain, the value of present state only determines the “probability distribution of the future state”, it does not determine exactly the value of the future states.

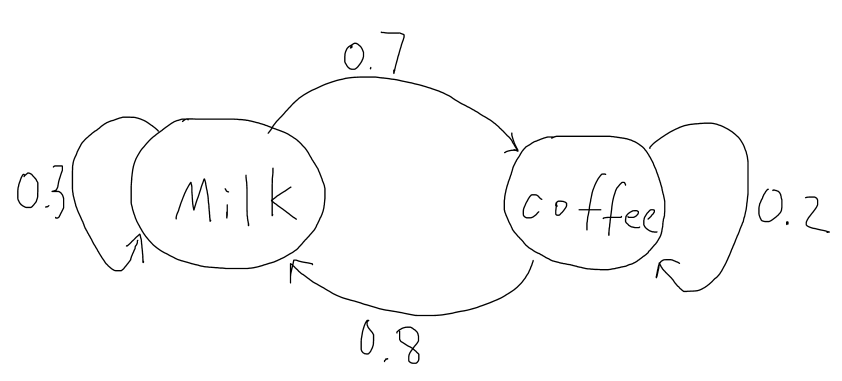

A concrete example

Let’s consider a person has two choices of drinking for his breakfast every morning, milk or coffee. We let Xi=1 if the person chooses to drink milk on the ith morning. Let Xi=2 if the person chooses to drink coffee on the ith morning. In this case, the sequence of cases (random variables) X1, X2… is a stochastic process with two possible states at each time. To make it be a Markov chain, consider people are usually preferring switching rather than repeating, and he prefers a little bit more on milk than coffee. Thus assume probability of that person choosing milk today given he chose milk yesterday is 0.3. The probability that he chooses coffee given he chose coffee yesterday is 0.2. Then this sequence of states becomes a Markov Chain.

$ P(X_{n+1}=1|X_n=1)=0.3 $, $ P(X_{n+1}=2|X_n=1)=1-0.3=0.7 $

$ P(X_{n+1}=1|X_n=2)=1-0.2=0.8 $, $ P(X_{n+1}=2|X_n=2)=0.2 $