| Line 5: | Line 5: | ||

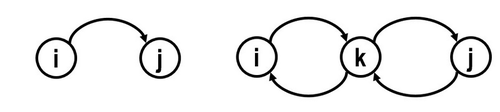

Before moving on and discovering more behaviors of Markov chains, we need to classify the properties of states first. In the previous section, we use <math>P_{ij}</math> to represent the transition probability of state changing from <math>i</math> to <math>j</math>; now, we use <math>P_{ij}^{n}</math> to denote the transition probability of state changing from <math>i</math> to <math>j</math> after <math>n</math> step. If <math>P_{ij}^{n} \geq 0</math> for all <math>n \geq 1</math>, we say state <math>j</math> is accessible from state <math>i</math>, which also can be written as <math>i \rightarrow j</math>. If <math>i \rightarrow j</math> and at the same time <math>j \rightarrow i</math>, then we say that state <math>i</math> and state <math>j</math> communicate, which can be denoted as <math>i \leftrightarrow j</math>. On the other hand, if <math>P_{ij}^{n} = 0</math> or <math>P_{ji}^{n} = 0</math>, or if both of them come into existence, we claim that state <math>i</math> and state <math>j</math> do not communicate. The diagrams below illustrate these two cases. | Before moving on and discovering more behaviors of Markov chains, we need to classify the properties of states first. In the previous section, we use <math>P_{ij}</math> to represent the transition probability of state changing from <math>i</math> to <math>j</math>; now, we use <math>P_{ij}^{n}</math> to denote the transition probability of state changing from <math>i</math> to <math>j</math> after <math>n</math> step. If <math>P_{ij}^{n} \geq 0</math> for all <math>n \geq 1</math>, we say state <math>j</math> is accessible from state <math>i</math>, which also can be written as <math>i \rightarrow j</math>. If <math>i \rightarrow j</math> and at the same time <math>j \rightarrow i</math>, then we say that state <math>i</math> and state <math>j</math> communicate, which can be denoted as <math>i \leftrightarrow j</math>. On the other hand, if <math>P_{ij}^{n} = 0</math> or <math>P_{ji}^{n} = 0</math>, or if both of them come into existence, we claim that state <math>i</math> and state <math>j</math> do not communicate. The diagrams below illustrate these two cases. | ||

| − | <center>[[File:Markovcommunicate.png|500px|thumbnail]]</center> | + | <center>[[File:Markovcommunicate.png|500px|thumbnail|Diagram courtesy of Barwe and Chen Yin]]</center> |

<center>(''i'' and ''j'' communicate) (''i'', ''j'', and ''k'' do not communicate)</center> | <center>(''i'' and ''j'' communicate) (''i'', ''j'', and ''k'' do not communicate)</center> | ||

| Line 13: | Line 13: | ||

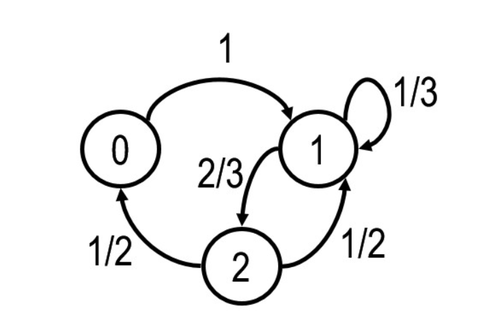

An example of the Irreducible Markov chains: | An example of the Irreducible Markov chains: | ||

| − | <center>[[File:Irreduciblemarkov.png|500px|thumbnail]]</center> | + | <center>[[File:Irreduciblemarkov.png|500px|thumbnail|Diagram courtesy of Barwe and Chen Yin]]</center> |

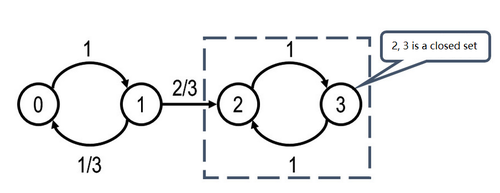

An example of reducible Markov chains: | An example of reducible Markov chains: | ||

| − | <center>[[File:reduciblemarkov1.png|500px|thumbnail]]</center> | + | <center>[[File:reduciblemarkov1.png|500px|thumbnail|Diagram courtesy of Barwe and Chen Yin]]</center> |

From the above diagram, we can see that after transiting from state 1 to state 2, the state 2 and 3 become a closed set; namely, they are never going to return to state 1; therefore, we can say it is a reducible Markov chain. | From the above diagram, we can see that after transiting from state 1 to state 2, the state 2 and 3 become a closed set; namely, they are never going to return to state 1; therefore, we can say it is a reducible Markov chain. | ||

| Line 23: | Line 23: | ||

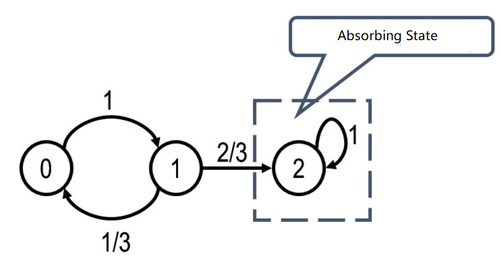

Another example of reducible Markov chain: | Another example of reducible Markov chain: | ||

| − | <center>[[File:reduciblemarkov2.png|500px|thumbnail]]</center> | + | <center>[[File:reduciblemarkov2.png|500px|thumbnail|Diagram courtesy of Barwe and Chen Yin]]</center> |

Similarly, after transiting from state 1 to 2, state 2 will never return to state 1. | Similarly, after transiting from state 1 to 2, state 2 will never return to state 1. | ||

Latest revision as of 01:57, 6 December 2020

Communication and Reducibility

Before moving on and discovering more behaviors of Markov chains, we need to classify the properties of states first. In the previous section, we use $ P_{ij} $ to represent the transition probability of state changing from $ i $ to $ j $; now, we use $ P_{ij}^{n} $ to denote the transition probability of state changing from $ i $ to $ j $ after $ n $ step. If $ P_{ij}^{n} \geq 0 $ for all $ n \geq 1 $, we say state $ j $ is accessible from state $ i $, which also can be written as $ i \rightarrow j $. If $ i \rightarrow j $ and at the same time $ j \rightarrow i $, then we say that state $ i $ and state $ j $ communicate, which can be denoted as $ i \leftrightarrow j $. On the other hand, if $ P_{ij}^{n} = 0 $ or $ P_{ji}^{n} = 0 $, or if both of them come into existence, we claim that state $ i $ and state $ j $ do not communicate. The diagrams below illustrate these two cases.

If all states of a Markov chain belong to one communication class, then that Markov chain is considered irreducible. If there are two or more communication classes, we call that Markov chain reducible.

An example of the Irreducible Markov chains:

An example of reducible Markov chains:

From the above diagram, we can see that after transiting from state 1 to state 2, the state 2 and 3 become a closed set; namely, they are never going to return to state 1; therefore, we can say it is a reducible Markov chain.

Another example of reducible Markov chain:

Similarly, after transiting from state 1 to 2, state 2 will never return to state 1.