(Added image for binomial distribution and continued description) |

|||

| Line 5: | Line 5: | ||

<big>Bernoulli Trial</big><br> | <big>Bernoulli Trial</big><br> | ||

| − | Earlier in the text, we briefly learned of Daniel Bernoulli and | + | Earlier in the text, we briefly learned of Daniel Bernoulli and relating <math>e</math> to compound interest using the limit definition <math>e=\lim_{n \to \infty}\left(1+\frac1n\right)^n</math>. We will now observe more of Bernoulli's work in the form of the Bernoulli Trial. |

| − | A Bernoulli Trial is a discrete experiment with two possible outcomes, described as "success" | + | A Bernoulli Trial is a discrete experiment with two possible outcomes, described as "success" or "failure" of some event <math>E</math>. In each trial, the event <math>E</math> has a constant probability <math>p</math> of occurring. Therefore, with one trial, the probability of the event occurring once is <math>P(1)=p</math>, and the probability of the event occurring zero times is <math>P(0)=1-p</math>. |

| Line 16: | Line 16: | ||

As seen in this image, the overall range of values depends solely on <math>n</math> as, with <math>n</math> trials, the event can only occur <math>0</math> to <math>n</math> times. The peak of the distribution, however, occurs at <math>np</math>, and the overall range set by <math>n</math> will affect how centralized the distribution is, with smaller ranges resulting in less variance and larger ranges resulting in greater variance. | As seen in this image, the overall range of values depends solely on <math>n</math> as, with <math>n</math> trials, the event can only occur <math>0</math> to <math>n</math> times. The peak of the distribution, however, occurs at <math>np</math>, and the overall range set by <math>n</math> will affect how centralized the distribution is, with smaller ranges resulting in less variance and larger ranges resulting in greater variance. | ||

| + | |||

| + | Finally, let us consider the probability that the event occurs some number of times, <math>i</math>. We have already stated that, for each individual trial, the probability of the event occurring is <math>p</math>. For multiple trials, however, the event must occur <math>i</math> times, each with probability p, and the event will not occur the remaining <math>n-i</math> times, each with probability <math>1-p</math>. These <math>i</math> successes, however, can occur in <math>{n \choose i}</math> different ways. Therefore, the probability of the event occurring <math>i</math> times can be found using the following formula. | ||

| + | |||

| + | <math>\begin{align} | ||

| + | P(i)=p^i(1-p)^{n-i}{n \choose i}=p^i(1-p)^{n-i}\frac{n!}{i!(n-i)!} | ||

| + | \end{align}</math> | ||

Revision as of 17:45, 2 December 2018

Bernoulli Trials and Binomial Distribution

Thus far, we have observed how $ e $ relates to compound interest and its unique properties involving Euler's Formula and the imaginary number $ i $. In this section, we will take a look at a potentially mysterious instance of how $ e $ appears when working with probability and will attempt to discover some explanations of why this occurs at all. Before we do this, however, let us begin with a few definitions.

Bernoulli Trial

Earlier in the text, we briefly learned of Daniel Bernoulli and relating $ e $ to compound interest using the limit definition $ e=\lim_{n \to \infty}\left(1+\frac1n\right)^n $. We will now observe more of Bernoulli's work in the form of the Bernoulli Trial.

A Bernoulli Trial is a discrete experiment with two possible outcomes, described as "success" or "failure" of some event $ E $. In each trial, the event $ E $ has a constant probability $ p $ of occurring. Therefore, with one trial, the probability of the event occurring once is $ P(1)=p $, and the probability of the event occurring zero times is $ P(0)=1-p $.

Binomial Distribution

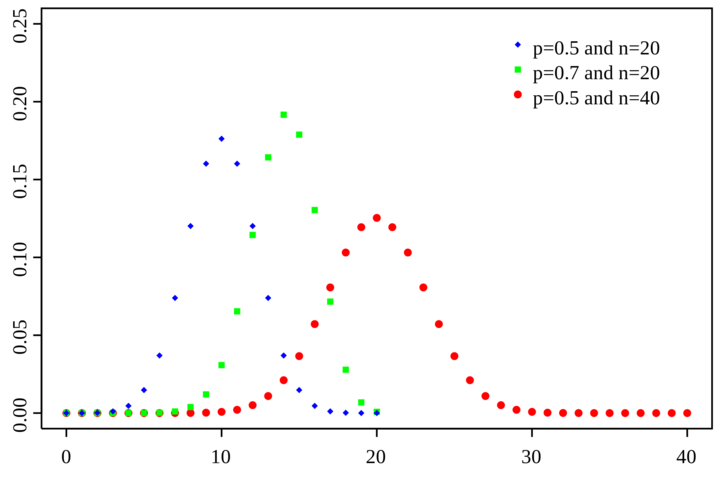

A Binomial Distribution involves repeating a Bernoulli Trial some number of times, $ n $, each with the same probability $ p $. As such, the binomial distribution depends on both $ n $ and $ p $. An example distribution with varying values for $ n $ and $ p $ is shown in the image below[1].

As seen in this image, the overall range of values depends solely on $ n $ as, with $ n $ trials, the event can only occur $ 0 $ to $ n $ times. The peak of the distribution, however, occurs at $ np $, and the overall range set by $ n $ will affect how centralized the distribution is, with smaller ranges resulting in less variance and larger ranges resulting in greater variance.

Finally, let us consider the probability that the event occurs some number of times, $ i $. We have already stated that, for each individual trial, the probability of the event occurring is $ p $. For multiple trials, however, the event must occur $ i $ times, each with probability p, and the event will not occur the remaining $ n-i $ times, each with probability $ 1-p $. These $ i $ successes, however, can occur in $ {n \choose i} $ different ways. Therefore, the probability of the event occurring $ i $ times can be found using the following formula.

$ \begin{align} P(i)=p^i(1-p)^{n-i}{n \choose i}=p^i(1-p)^{n-i}\frac{n!}{i!(n-i)!} \end{align} $