| Line 27: | Line 27: | ||

<br>'''Important Notes''':<br><math>P_{ij}</math> in the above matrix represents <math>P(X_{n+1}=j|X_n=i)</math>, this turns out the result that the sum of every entries in each row is 1 (This follows the same notation as my probability textbook and most professional articles I have searched in the electronic library). However, in some textbooks (for example, my linear algebra textbook) and resources I found on the Internet, <math>P_{ij}</math> in the Matrix = <math>P(X_{n+1}=i|X_n=j)</math>, this means the sum of every entries in each column is 1. To avoid confusion, we are going to follow the first notation style in this note. | <br>'''Important Notes''':<br><math>P_{ij}</math> in the above matrix represents <math>P(X_{n+1}=j|X_n=i)</math>, this turns out the result that the sum of every entries in each row is 1 (This follows the same notation as my probability textbook and most professional articles I have searched in the electronic library). However, in some textbooks (for example, my linear algebra textbook) and resources I found on the Internet, <math>P_{ij}</math> in the Matrix = <math>P(X_{n+1}=i|X_n=j)</math>, this means the sum of every entries in each column is 1. To avoid confusion, we are going to follow the first notation style in this note. | ||

| − | <br>The transition matrix makes computation in a Markov Chain easier. In the above breakfast example (it has stationary transition probability), if we are given that person drunk milk at time n,<math>X_n=1</math>, we can determine the probability distribution of <math>X_{n+3}</math>. The initial state distribution can be written as a row vector : | + | <br><br>The transition matrix makes computation in a Markov Chain easier. In the above breakfast example (it has stationary transition probability), if we are given that person drunk milk at time n,<math>X_n=1</math>, we can determine the probability distribution of <math>X_{n+3}</math>. The initial state distribution can be written as a row vector : <math>\begin{pmatrix}1 & 0\end{pmatrix}</math> |

| − | <br><math>x_{n+3}=x_{n+2}P=(x_{n+1}P)P=((x_nP)P)P=x_nP^3= | + | <br><math>x_{n+3}=x_{n+2}P=(x_{n+1}P)P=((x_nP)P)P=x_nP^3=\begin{pmatrix}1 & 0\end{pmatrix} |

| + | \begin{pmatrix} | ||

0.3 & 0.7 \\ | 0.3 & 0.7 \\ | ||

0.8 & 0.2 | 0.8 & 0.2 | ||

| − | \end{pmatrix}^3 | + | \end{pmatrix}^3</math> |

| − | <br> | + | <br><math>x_{n+3}=\begin{pmatrix}0.475 & 0.525\end{pmatrix}</math> |

| + | <br>This means given the person drunk milk today, three days later, he has probability 0.475 to drink milk and 0.525 to drink coffee. | ||

| + | <br><br>- Matrix Limits and Markov Chain | ||

| + | <br>In the Linear Algebra courses (MA351 and MA353 in Purdue University), we know that a diagonalizable Matrix A can be represented in a form <math>A=QDQ^{-1}</math>. | ||

| + | <br>Where <math>D=\begin{bmatrix} | ||

| + | r_{1} & \cdots & 0 \\ | ||

| + | \vdots & \ddots & \vdots \\ | ||

| + | 0 & \cdots & r_n | ||

| + | \end{bmatrix}</math>, where <math>r_1...r_n</math> are eigenvalues of the matrix A, and Q are has columns corresponding to the eigenvectors. | ||

| + | <br><math>A^n=QDQ^{-1}QDQ^{-1}...QDQ^{-1}=QD^nQ^{-1}; | ||

| + | \lim_{n \to \infty}A^n=\lim_{n \to \infty}(QD^nQ^{-1})</math>. | ||

| + | <br>Since D is a diagonal matrix, it’s very easy to compute its powers. In the above breakfast example, we are able to compute the matrix limits. | ||

[[Category:MA375Spring2014Walther]] [[Category:Math]] [[Category:Project]] | [[Category:MA375Spring2014Walther]] [[Category:Math]] [[Category:Project]] | ||

Revision as of 14:14, 24 March 2014

What is a Markov chain/process? What can you do with it? And why are lots of people talking about them? Back to MA375 Spring 2014

Outlines of the Project

A) What is a Markov Chain

-Stochastic process

-Definition of Markov Chain

-Concrete examples and several properties

B) What can we do with it?

-Transition Probabilities and Transition Matrix

-Computation with Transition Matrix

-Matrix limits and Markov Chains

C) Why Useful

-Applications in Real Life with examples and computation

1.What is a Markov chain?

To introduce the answer this question, we should first realize the definition of stochastic process, because a Markov Chain is a special type of a stochastic process. Definition of a stochastic process:

“A sequence of random variables X1, X2… is called a stochastic process with discrete time parameter” [1]

Explanation: We may think X1, X2… as samples of an experiment. In stochastic process, they represents states of process. Clearly, X1 is called the initial state, and Xn represents the state at time n. In the introductory probability course, we are familiar with the models when X1…Xn are independently identical distributed (iid) random variables. However, note states do not necessarily have to be independently identical distributed. I think this note is especially important to eliminate confusion for first time learners in the Markov Chain.

Definition of a Markov chain

A stochastic process is a Markov Chain if, for each time n, the conditional distributions of all Xn+j given X1…Xn depend only on Xn instead of X1…Xn-1. In majority or basic cases, we consider j=1. That is, the probability distribution of random variable Xn is determined by the value from the (n-1)th state (Xn-1). This means the distribution of future states depend only on the present state, and has nothing to do with the past states.

*Note: In a Markov Chain, the value of present state only determines the “probability distribution of the future state”, it does not determine exactly the value of the future states.

A concrete example

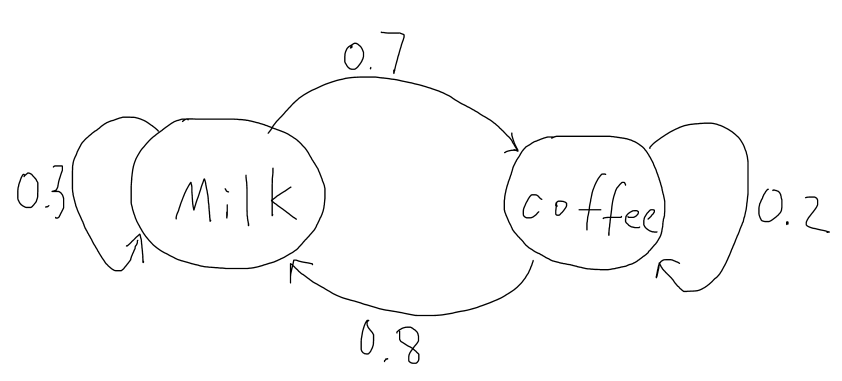

Let’s consider a person has two choices of drinking for his breakfast every morning, milk or coffee. We let Xi=1 if the person chooses to drink milk on the ith morning. Let Xi=2 if the person chooses to drink coffee on the ith morning. In this case, the sequence of cases (random variables) X1, X2… is a stochastic process with two possible states at each time. To make it be a Markov chain, consider people are usually preferring switching rather than repeating, and he prefers a little bit more on milk than coffee. Thus assume probability of that person choosing milk today given he chose milk yesterday is 0.3. The probability that he chooses coffee given he chose coffee yesterday is 0.2. Then this sequence of states becomes a Markov Chain.

P(Xn + 1 = 1 | Xn = 1) = 0.3, P(Xn + 1 = 2 | Xn = 1) = 1 − 0.3 = 0.7

P(Xn + 1 = 1 | Xn = 2) = 1 − 0.2 = 0.8, P(Xn + 1 = 2 | Xn = 2) = 0.2

What can we do with it

Transition probabilities and Transition Matrix

We define P_ij to be the probability that the future state is j given the present state is i.

P(Xn + 1 = j | Xn = i) = Pi''j

They are called transition distributions. If a Markov Chain’s transition distributions do not depend on n (just like the above example), then we call the Markov Chain has stationary transition distributions.

In particular, a Markov Chain with stationary transition distributions can be presented by a Transition Matrix. For instance, the transition Matrix for the above Example is:

$ \begin{pmatrix} 0.3 & 0.7 \\ 0.8 & 0.2 \end{pmatrix} $

More Generally:$ P=\begin{bmatrix} P_{11} & \cdots & P_{1k} \\ \vdots & \ddots & \vdots \\ P_{k1} & \cdots & P_{kk} \end{bmatrix} $

General Rule for nth state distribution:$ x_n=x_{n-1}P $

Important Notes:

$ P_{ij} $ in the above matrix represents $ P(X_{n+1}=j|X_n=i) $, this turns out the result that the sum of every entries in each row is 1 (This follows the same notation as my probability textbook and most professional articles I have searched in the electronic library). However, in some textbooks (for example, my linear algebra textbook) and resources I found on the Internet, $ P_{ij} $ in the Matrix = $ P(X_{n+1}=i|X_n=j) $, this means the sum of every entries in each column is 1. To avoid confusion, we are going to follow the first notation style in this note.

The transition matrix makes computation in a Markov Chain easier. In the above breakfast example (it has stationary transition probability), if we are given that person drunk milk at time n,$ X_n=1 $, we can determine the probability distribution of $ X_{n+3} $. The initial state distribution can be written as a row vector : $ \begin{pmatrix}1 & 0\end{pmatrix} $

$ x_{n+3}=x_{n+2}P=(x_{n+1}P)P=((x_nP)P)P=x_nP^3=\begin{pmatrix}1 & 0\end{pmatrix} \begin{pmatrix} 0.3 & 0.7 \\ 0.8 & 0.2 \end{pmatrix}^3 $

$ x_{n+3}=\begin{pmatrix}0.475 & 0.525\end{pmatrix} $

This means given the person drunk milk today, three days later, he has probability 0.475 to drink milk and 0.525 to drink coffee.

- Matrix Limits and Markov Chain

In the Linear Algebra courses (MA351 and MA353 in Purdue University), we know that a diagonalizable Matrix A can be represented in a form $ A=QDQ^{-1} $.

Where $ D=\begin{bmatrix} r_{1} & \cdots & 0 \\ \vdots & \ddots & \vdots \\ 0 & \cdots & r_n \end{bmatrix} $, where $ r_1...r_n $ are eigenvalues of the matrix A, and Q are has columns corresponding to the eigenvectors.

$ A^n=QDQ^{-1}QDQ^{-1}...QDQ^{-1}=QD^nQ^{-1}; \lim_{n \to \infty}A^n=\lim_{n \to \infty}(QD^nQ^{-1}) $.

Since D is a diagonal matrix, it’s very easy to compute its powers. In the above breakfast example, we are able to compute the matrix limits.