| Line 2: | Line 2: | ||

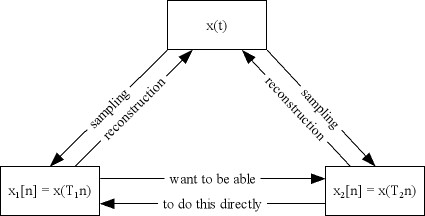

Assume a function <math>x(t)</math> | Assume a function <math>x(t)</math> | ||

| + | |||

| + | [[Image:sampling_rate_conv.jpg]] | ||

We wish to covert a signal sampled at <math>T_1</math> to one sampled at <math>T_2</math> without having to reconstruct the original <math>x(t)</math> and then resampling at a new rate. | We wish to covert a signal sampled at <math>T_1</math> to one sampled at <math>T_2</math> without having to reconstruct the original <math>x(t)</math> and then resampling at a new rate. | ||

Revision as of 18:08, 22 September 2009

Sample Rate Conversion

Assume a function $ x(t) $

We wish to covert a signal sampled at $ T_1 $ to one sampled at $ T_2 $ without having to reconstruct the original $ x(t) $ and then resampling at a new rate.

There are two cases here.

1. $ T_2 $ is a multiple of $ T_1 $

- conversion can be accomplished by down-sampling

2. $ T_2 $ is a divider of $ T_1 $

- conversion can be accomplished by up-sampling followed by LPF

Case 1 - $ T_2 $ is a multiple of $ T_1 $

We are trying to accomplish the following -

$ x_1[n] \longrightarrow D = \frac{T_2}{T_1} \bigg\Downarrow \longrightarrow x_2[n] $

We know that

$ x_2[n] = x_1[Dn] $

where $ D = \frac{T_2}{T_1} $

as this is the same as doing $ x_2[n] = x(T_2n) $

This, in Fourier Domain becomes

$ F(x_2[n]) = F(x_1[Dn]) $

$ X_2(\omega) = \sum_{n=-\infty}^{\infty} x_1[Dn] e^{-j \omega n} $

let $ m = Dn $

$ X_2(\omega) = \sum_{m=-\infty}^{\infty} x_1[m] e^{-j \omega \frac{m}{D}} $

where m is a multiple of D

Now, we can introduce a function $ s_D[m] $ such that

$ s_D[m] = \begin{cases} 1, & \mbox{if }m\mbox{ multiple of }D\\ 0, & \mbox{else } \end{cases} $

The Fourier series of this function can be represented as

$ S_D[m] = \frac{1}{D} \sum_{k = 0}^{D-1} (e^{j \frac{2 \pi}{D} m})^k $

and therefore we get

$ X_2(\omega) = \sum_{m = -\infty}^{\infty} S_D[m] e^{-j \omega \frac{m}{D}} $

$ X_2(\omega) = \sum_{m = -\infty}^{\infty} \frac{1}{D}\sum_{k = 0}^{D-1} e^{j k \frac{2 \pi}{D} m} x_1[m] e^{-j \omega \frac{m}{D}} $

$ X_2(\omega) = \frac{1}{D} \sum_{k=0}^{D-1} \sum_{m=-\infty}^{\infty} x_1[m] e^{-j m (\frac{\omega - 2 \pi k}{D})} $

And since $ \sum_{m=-\infty}^{\infty} x_1[m] e^{-j m (\frac{\omega - 2 \pi k}{D})} = X_1(\frac{\omega - 2 \pi k}{D}) $

Therefore, $ X_2(\omega) = \frac{1}{D} \sum_{k=0}^{D-1} X_1(\frac{\omega - 2 \pi k}{D}) $