| Line 9: | Line 9: | ||

=== Step 0: Defining Inputs and Outputs === | === Step 0: Defining Inputs and Outputs === | ||

---- | ---- | ||

| − | [[File:AudioCompression 438Fall2016 flowdiagram.png|799px| | + | [[File:AudioCompression 438Fall2016 flowdiagram.png|799px|framed|center|Flow diagram of audio data.]] |

The flow of data is straightforward: from a 16-bit mono PCM .wav file sampled at 48kHz, encode it into some minimized form of data, then be able to decode this data into an array of 8-bit unsigned integers that fits into the microcontroller’s memory to be pushed to the DAC. Therefore, what’s to be stored on the microcontroller is the minimized data for various sounds, so that we can store multiple sound effects (instead of being limited to one 2-second sound effect that fills up all of the flash storage). | The flow of data is straightforward: from a 16-bit mono PCM .wav file sampled at 48kHz, encode it into some minimized form of data, then be able to decode this data into an array of 8-bit unsigned integers that fits into the microcontroller’s memory to be pushed to the DAC. Therefore, what’s to be stored on the microcontroller is the minimized data for various sounds, so that we can store multiple sound effects (instead of being limited to one 2-second sound effect that fills up all of the flash storage). | ||

| Line 17: | Line 17: | ||

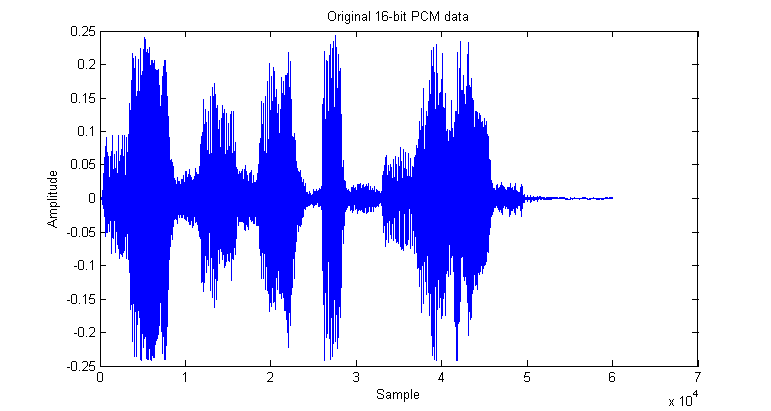

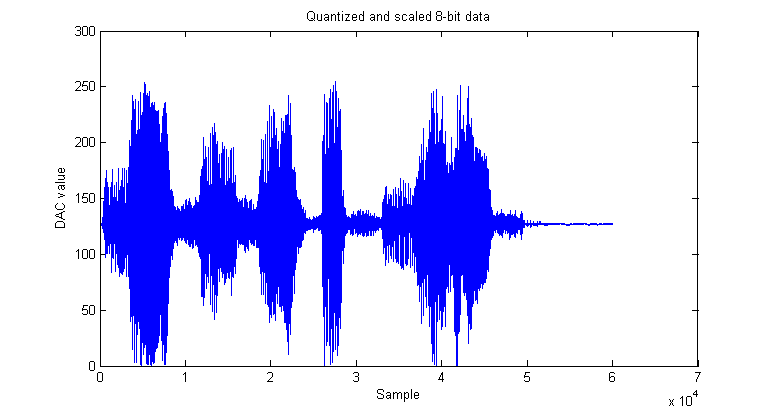

<math>Y = \lfloor \frac{X-min(X)}{max(X)}\cdot 255 \rceil</math>, where Y is the quantized signal and X is the original signal. Below is a plot of our sample input file and its 8-bit quantized version. | <math>Y = \lfloor \frac{X-min(X)}{max(X)}\cdot 255 \rceil</math>, where Y is the quantized signal and X is the original signal. Below is a plot of our sample input file and its 8-bit quantized version. | ||

| − | [[File:AudioCompression 438Fall2016 unquantized.png|771px| | + | [[File:AudioCompression 438Fall2016 unquantized.png|771px|framed|center|Input waveform.]] |

| − | [[File:AudioCompression 438Fall2016 quantized.png|771px| | + | [[File:AudioCompression 438Fall2016 quantized.png|771px|framed|center|Quantized waveform.]] |

Revision as of 21:34, 27 November 2016

Introduction and Background

From the earliest wax cylinders made by Thomas Edison [1] to be played on phonographs, to the standard .mp3 file format used ubiquitously today, storing audio has always been an important aspect of the music industry. Even though we are a far cry from only having cassette tapes or vinyl records to store audio, people have always strived to be able to store more songs on smaller and smaller devices. This was no different for my Senior Design project – a remote controlled BB-8 robot which needed to be able to play “realistic astromech droid sounds” [2], with limited processing power as well as limited storage capacity. In our case, the goal was to create an audio compression technique that would:

- Compress audio files down to fit within the flash memory of the microcontroller [3]

- Not be too computationally complex to decode so as to interfere with essential operation of BB-8

- Still sound “okay”; i.e. perceptually not have any huge distortions

Armed with this task, I decided to do what any engineer would do: look for an algorithm that already does what I want it to do. Lossless audio compression methods such as FLAC were dropped almost immediately, as the compression ratios found were much too high (not compressed enough) [4], and while MP3 seemed like the go-to choice, writing an MP3 decoder would likely be more work than the rest of the project itself. Therefore, I decided to try to utilize some concepts from ECE438, such as quantization and possibly LPC to create a simple, yet efficient, compression system. A huge portion of audio compression is in the encoding complexity; however, since we will only be playing static data, we don’t have to worry about the encoding complexity of the audio data.

Step 0: Defining Inputs and Outputs

The flow of data is straightforward: from a 16-bit mono PCM .wav file sampled at 48kHz, encode it into some minimized form of data, then be able to decode this data into an array of 8-bit unsigned integers that fits into the microcontroller’s memory to be pushed to the DAC. Therefore, what’s to be stored on the microcontroller is the minimized data for various sounds, so that we can store multiple sound effects (instead of being limited to one 2-second sound effect that fills up all of the flash storage).

Step 1: Conversion to DAC values

The first step in this process is to quantize the original 16-bit data into 8-bits. Of course, since the DAC on our microcontroller only allows for a uniform spacing of output voltage, a max quantizer isn't applicable to this solution. Therefore, we simply uniformly quantize by the following formula: $ Y = \lfloor \frac{X-min(X)}{max(X)}\cdot 255 \rceil $, where Y is the quantized signal and X is the original signal. Below is a plot of our sample input file and its 8-bit quantized version.