(New page: Since linear discriminant material will not be in any homework, i thought that writing this contribution might be a good practice on how perceptron classifier in batch mode works. ...) |

|||

| Line 1: | Line 1: | ||

| − | Since linear discriminant material will not be in any homework, i thought that writing this contribution might be a good practice on how perceptron classifier in batch mode works. | + | =About the batch perceptron= |

| + | Since linear discriminant material will not be in any ECE662 homework this semester, i thought that writing this contribution might be a good practice on how perceptron classifier in batch mode works. | ||

Assume that we have two classes and for each class we have a training set T = {'''x'''<sub>1</sub>,'''x'''<sub>2</sub>, ... ,'''x'''<sub>10</sub>} of 10 samples in 2D , we want to use the training samples to estimate the parameters of the hyperplane that is separating the samples of each class that is: | Assume that we have two classes and for each class we have a training set T = {'''x'''<sub>1</sub>,'''x'''<sub>2</sub>, ... ,'''x'''<sub>10</sub>} of 10 samples in 2D , we want to use the training samples to estimate the parameters of the hyperplane that is separating the samples of each class that is: | ||

| Line 40: | Line 41: | ||

<sub></sub> | <sub></sub> | ||

| + | ---- | ||

| + | [[2010_Spring_ECE_662_mboutin|Back to ECE662 Spring 2010]] | ||

Revision as of 06:28, 12 April 2010

About the batch perceptron

Since linear discriminant material will not be in any ECE662 homework this semester, i thought that writing this contribution might be a good practice on how perceptron classifier in batch mode works.

Assume that we have two classes and for each class we have a training set T = {x1,x2, ... ,x10} of 10 samples in 2D , we want to use the training samples to estimate the parameters of the hyperplane that is separating the samples of each class that is:

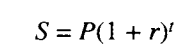

we want to find a hyper plane H = {x: <W . X> + b = 0} that separate the training vectors of the two classes , this problem can be defined as solving the set of linear inequalities below with respect to the weights vector W and the bias b:

<W . X> +b $ \geq $ 0, class 1

<W . X> +b < 0, class 2

if a solution to the above inequalities exist then the training data is called separable, and we can apply the Perceptron Batch algorithm to find W and b after augmenting each training vector by 1, and normalize the samples of the second class by multiplying with -1.

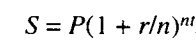

notice that in the original feature space we are seeking a hyperplane "in this case line in 2D" that separates patterns from both classes, while after augmentation and normalization we are seeking a hyperplane" will be a plane in 3D" that puts normalized patterns on the same positive side.

To illustrate the idea, i wrote a matlab code for training binary linear classifier with perceptron algorithm"DHS section 5.5.1", such that a synthetic 2D training data was generated for two classes and the perceptron batch algorithm was applied to estimate the separating hyperplane H.

the figure below shows the sample in the original feature space along with the hyperplane"the line in blue" that separate the samples of each class. the values of the weights vector W was W = [23.15, -25.95] and the bias value b = -36 , and the number of iteration of the algorithm was 32 using the following initial values:

maximum # of iteration = 100.

stopping threshold = 0.001

Learning rate = 0.5

while the hyperplane "plan in 3D" in the transformed feature space is shown in the figure below, notice that here all the training samples are at the positive side of the hyperplane: