| (54 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | = Limits of Functions = | |

| − | + | ||

| − | = | + | |

by: [[User:Yehm|Michael Yeh]], proud Member of [[Math squad|the Math Squad]]. | by: [[User:Yehm|Michael Yeh]], proud Member of [[Math squad|the Math Squad]]. | ||

<pre> keyword: tutorial, limit, function, sequence </pre> | <pre> keyword: tutorial, limit, function, sequence </pre> | ||

| + | ---- | ||

| + | == Introduction == | ||

| − | + | Provided here is a brief introduction to the concept of "limit," which features prominently in calculus. To help motivate the definition, we first consider continuity at a point. Unless otherwise mentioned, all functions here will have domain and range <math>\mathbb{R}</math>, the real numbers. Words such as "all," "every," "each," "some," and "there is/are" are quite important here; read carefully! | |

| − | + | ---- | |

| − | Provided here is a brief introduction to the concept of "limit," which features prominently in calculus. | + | |

| − | + | ||

== Continuity at a point== | == Continuity at a point== | ||

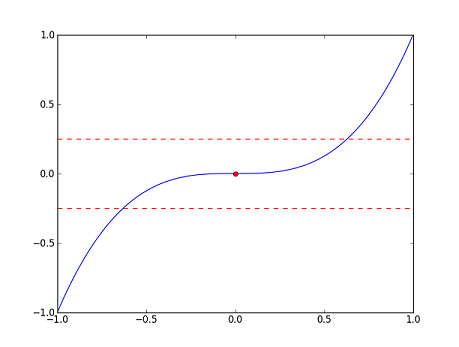

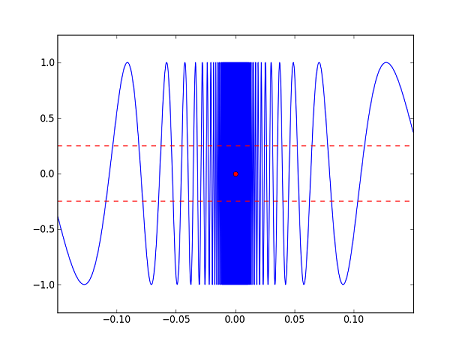

| − | Let's consider | + | Let's consider the following three functions along with their graphs (in blue). The red dots in each correspond to <math>x=0</math>, e.g. for <math>f_1</math>, the red dot is the point <math>(0,f_1(0))=(0,0)</math>. Ignore the red dashed lines for now; we will explain them later. |

| − | :<math>\displaystyle | + | :<math>\displaystyle f_1(x)=x^3</math> |

[[Image:limits_of_functions_f.png]] | [[Image:limits_of_functions_f.png]] | ||

| − | :<math> | + | :<math>f_2(x)=\begin{cases}-x^2-\frac{1}{2} &\text{if}~x<0\\ x^2+\frac{1}{2} &\text{if}~x\geq 0\end{cases}</math> |

[[Image:limits_of_functions_g.png]] | [[Image:limits_of_functions_g.png]] | ||

| − | :<math> | + | :<math>f_3(x)=\begin{cases} \sin\left(\frac{1}{x}\right) &\text{if}~x\neq 0\\ 0 &\text{if}~x=0\end{cases}</math> |

[[Image:limits_of_functions_h.png]] | [[Image:limits_of_functions_h.png]] | ||

| − | We can see from the graphs that <math> | + | We can see from the graphs that <math>f_1</math> is "continuous" at <math>0</math>, and that <math>f_2</math> and <math>f_3</math> are "discontinuous" at 0. But, what exactly do we mean? Intuitively, <math>f_1</math> seems to be continuous at <math> 0</math> because <math>f_1(x)</math> is close to <math>f_1(0)</math> whenever <math>x</math> is close to <math> 0</math>. On the other hand, <math>f_2</math> appears to be discontinuous at <math> 0</math> because there are points <math>x</math> which are close to <math>0</math> but such that <math>f_2(x)</math> is far away from <math>f_2(0)</math>. The same observation applies to <math>f_3</math>. |

| − | Let's make these observations more precise. First, we will try to estimate <math> | + | Let's make these observations more precise. First, we will try to estimate <math>f_1(0)</math> with error at most <math>0.25</math>, say. In the graph of <math>f_1</math>, we have marked off a band of width <math>0.5</math> about <math>f_1(0)</math>. So, any point in the band will provide a good approximation here. As a first try, we might think that if <math>x</math> is close enough to <math>0</math>, then <math>f_1(x)</math> will be a good estimate of <math>f_1(0)</math>. Indeed, we see from the graph that for ''any'' <math>x</math> in the interval <math>(-\sqrt[3]{0.25},\sqrt[3]{0.25})</math>, <math>f_1(x)</math> lies in the band (or if we wish to be more pedantic, we would say that <math>(x,f_1(x))</math> lies in the band). So, "close enough to <math>0</math>" here means in the interval <math>(-\sqrt[3]{0.25},\sqrt[3]{0.25})</math>; note that ''any'' point which is close enough to <math>0</math> provides a good approximation of <math>f_1(0)</math>. |

| − | There is nothing special about our error bound <math>0.25</math>. | + | There is nothing special about our error bound <math>0.25</math>. Choose a positive number <math>\varepsilon</math>, and suppose we would like to estimate <math>f_1(0)</math> with error at most <math>\varepsilon</math>. Then, as above, we can find some interval <math>\displaystyle(-\delta,\delta)</math> about <math>0</math> (if you like to be concrete, any <math>\displaystyle\delta</math> such that <math>0<\delta<\sqrt[3]{\varepsilon}</math> will do) so that for ''any'' <math>x</math> in <math>\displaystyle(-\delta,\delta)</math>, <math>f_1(x)</math> will be a good estimate for <math>f_1(0)</math>, i.e. <math>f_1(x)</math> will be no more than <math>\varepsilon</math> away from <math>f_1(0)</math>. |

| − | Can we do the same for <math> | + | Can we do the same for <math>f_2</math>? That is, if <math>x</math> is close enough to <math>0</math>, then will <math>f_2(x)</math> be a good estimate of <math>f_2(0)</math>? Well, we see from the graph that <math>f_2(0.25)</math> provides a good approximation to <math>f_2(0)</math>. But if <math>0.25</math> is close enough to <math>0</math>, then certainly <math>-0.25</math> should be too; however, the graph shows that <math>f_2(-0.25)</math> is not a good estimate of <math>f_2(0)</math>. In fact, for any <math>x>0</math>, <math>f_2(-x)</math> will never be a good approximation for <math>f_2(0)</math>, even though <math>x</math> and <math>-x</math> are the same distance from <math>0</math>. |

| − | In contrast to <math> | + | In contrast to <math>f_1</math>, we see that for any interval <math>\displaystyle(-\delta,\delta)</math> about <math>0</math>, we can find an <math>x</math> in <math>\displaystyle(-\delta,\delta)</math> such that <math>f_2(x)</math> is more than <math>0.25</math> away from <math>f_2(0)</math>. |

| − | + | The same is true for <math>f_3</math>. Whenever we find an <math>x</math> such that <math>f_3(x)</math> lies in the band, we can always find a point <math>y</math> such that 1) <math>y</math> is just as close or closer to <math>0</math> and 2) <math>f_3(y)</math> lies outside the band. So, it is not true that if <math>x</math> is close enough to <math>0</math>, then <math>f_3(x)</math> will be a good estimate for <math>f_3(0)</math>. | |

| + | Let's summarize what we have found. For <math>f_1</math>, we saw that for each <math>\varepsilon>0</math>, we can find an interval <math>\displaystyle(-\delta,\delta)</math> about <math>0</math> (<math>\displaystyle\delta</math> depends on <math>\varepsilon</math>) so that for every <math>x</math> in <math>\displaystyle(-\delta,\delta)</math>, <math>|f_1(x)-f_1(0)|<\varepsilon</math>. However, <math>f_2</math> does not satisfy this property. More specifically, there is an <math>\varepsilon>0</math>, namely <math>\varepsilon=0.25</math>, so that for any interval <math>\displaystyle(-\delta,\delta)</math> about <math>0</math>, we can find an <math>x</math> in <math>\displaystyle(-\delta,\delta)</math> such that <math>|f_2(x)-f_2(0)|\geq\varepsilon</math>. The same is true of <math>f_3</math>. | ||

| + | Now we state the formal definition of continuity at a point. Compare this carefully with the previous paragraph. | ||

| + | |||

| + | '''DEFINITION 1.''' Let <math>f</math> be a function from <math>\displaystyle A</math> to <math>\mathbb{R}</math>, where <math>A\subset\mathbb{R}</math>. Then <math>f</math> is continuous at a point <math>c\in A</math> if for every <math>\varepsilon>0</math>, there is a <math>\displaystyle\delta>0</math> such that <math>|f(x)-f(c)|<\varepsilon</math> for any <math>x</math> that satisfies <math>\displaystyle|x-c|<\delta</math>. <math>f</math> is said to be continuous if it is continuous at every point of <math>A</math>. | ||

| + | |||

| + | In our language above, <math>\varepsilon</math> is the error bound, and <math>\displaystyle\delta</math> is our measure of "close enough (to <math>c</math>)." Note that continuity is defined only for points in a function's domain. So, the function <math>k(x)=1/x</math> is technically continuous because <math>0</math> is not in the domain of <math>k</math>. If, however, we defined <math>k(0)=0</math>, then <math>k</math> will no longer be continuous. | ||

---- | ---- | ||

| + | == The Limit of a Function at a Point == | ||

| + | Now, let's consider the two functions <math>g_1</math> and <math>g_2</math> below. Note that <math>g_1</math> is left undefined at <math>0</math>. | ||

| + | :<math>g_1(x)=\begin{cases}-x^2-x &\text{if}~x<0\\ x&\text{if}~x>0\end{cases}</math> | ||

| + | [[Image:limits_of_functions_f2.png]] | ||

| + | :<math>g_2(x)=\begin{cases}0 &\text{if}~x\neq 0\\ \frac{1}{2}&\text{if}~x=0\end{cases}</math> | ||

| + | [[Image:limits_of_functions_g2.png]] | ||

| − | == | + | Recall the function <math>f_1</math> from the previous section. We found that it was continuous at <math>0</math> because <math>f_1(x)</math> is close to <math>f_1(0)</math> if <math>x</math> is close enough to <math>0</math>. We can do something similar with <math>g_1</math> and <math>g_2</math> here. From the graph, we can see that <math>g_1(x)</math> is close to <math>0</math> whenever <math>x</math> is close enough, but not equal, to <math>0</math>. Similarly, we see that <math>g_2(x)</math> is close to <math>0</math> whenever <math>x</math> is close enough, but not equal, to <math>0</math>. The "not equal" part is important for both <math>g_1</math> and <math>g_2</math> because <math>g_1</math> is undefined at <math>x=0</math> while <math>g_2</math> has a discontinuity there. The idea is similar to that of continuity, but we ignore whatever happens at <math>x=0</math>. We are concerned more with how <math>g_1</math> and <math>g_2</math> behave around <math>0</math> rather than at <math>0</math>. This leads to the following definition. |

| − | + | '''DEFINITION 2.''' Let <math>f</math> be a function defined for all real numbers, with possibly finitely many exceptions, and with range <math>\mathbb{R}</math>. Let <math>c</math> be any real number. We say that the limit of <math>f</math> at <math>c</math> is <math>b</math>, or that the limit of <math>f(x)</math> as <math>x</math> approaches <math>c</math> is <math>b</math>, and write <math>\lim_{x\to c}f(x)=b</math> if for every <math>\varepsilon>0</math>, there is a <math>\displaystyle\delta>0</math> such that <math>|f(x)-b|<\varepsilon</math> whenever <math>\displaystyle 0<|x-c|<\delta</math>. | |

| − | - | + | This is the same as the definition for continuity, except we ignore what happens at <math>c</math>. We can see this in two places in the above definition. The first is the use of <math>b</math> instead of <math>f(c)</math>, and the second is the condition <math>\displaystyle 0<|x-c|<\delta</math>, which says that <math>x</math> is close enough, but not equal, to <math>c</math>. The restriction on the domain of <math>f</math> in the above definition is not really necessary; if <math>f</math> has domain <math>A\subset\mathbb{R}</math>, we can define <math>\lim_{x\to c}f(x)</math> for any <math>c</math> which is a "limit point" of <math>\displaystyle A</math>. You can consult Rudin's ''Principles of Mathematical Analysis,'' for example, if you're interested. But, in most calculus courses, one usually encounters the situation described in the definition. |

| − | + | If you've ever seen ''Mean Girls,'' you know that the limit does not always exist. For example, if <math>f_2</math> is the function in the previous section, then <math>\lim_{x\to 0}f_2(x)</math> does not exist. We will show this rigorously in the exercises, but it is clear from the graph of <math>f_2</math>. | |

| − | + | Having defined what a limit is, we make a few remarks about how continuity and limit are related. As a first observation, we can now restate the definition of continuity at a point more succinctly in terms of limits: <math>f</math> is continuous at <math>c</math> if and only if <math>\displaystyle\lim_{x\to c}f(x)=f(c)</math>. Now, let's take a look at the functions <math>g_1</math> and <math>g_2</math> defined earlier. <math>g_1</math> is continuous at every point of its domain; however, it is undefined at <math>0</math>. But since <math>\lim_{x\to 0}g_1(x)</math> exists and equals <math>0</math>, we can make <math>g_1</math> continuous on the entire real line by defining <math>g_1(0)=0</math>. We note that even though <math>\lim_{x\to 0}g_2(x)</math> exists, <math>g_2</math> is discontinuous at <math>0</math>; this is because <math>\lim_{x\to 0}g_2(x)=0\neq \frac{1}{2}=g_2(0)</math>. This shows that the existence of <math>\displaystyle\lim_{x\to c}f(x)</math> does not imply continuity at <math>c</math>, even though, as we just mentioned, continuity of <math>f</math> at <math>c</math> implies the existence of <math>\displaystyle\lim_{x\to c}f(x)</math>. | |

| + | ---- | ||

| + | == Limits at Infinity == | ||

| + | Let <math>f</math> be as in Definition 2. So far, we have only discussed <math>\displaystyle\lim_{x\to c}f(x)</math> when <math>c</math> is a real number. It is not difficult to modify Definition 2 for when <math>c=\pm\infty</math>. In Definition 2, we considered <math>x</math> to be "close enough" when <math>x</math> is in the interval <math>\displaystyle(c-\delta,c+\delta)</math> and <math>x\neq c</math>. For <math>\infty</math>, the analogue would be that <math>x</math> belongs to an interval of the form <math>\displaystyle(M,\infty)</math>, where <math>M</math> is a real number, and similarly for <math>-\infty</math>. So, we say that the limit of <math>f</math> at <math>\infty</math> (respectively, <math>-\infty</math>) is <math>b</math> and write <math>\displaystyle\lim_{x\to\infty}f(x)=b</math> (respectively, <math>\displaystyle\lim_{x\to-\infty}f(x)=b</math>) if for every <math>\varepsilon>0</math>, there is a real number <math>M</math> so that <math>|f(x)-b|<\varepsilon</math> whenever <math>x>M</math> (respectively, <math>x<M</math>). | ||

---- | ---- | ||

| + | == Exercises == | ||

| + | The triangle inequality will be handy for a few of these. | ||

| − | + | A) Show that any constant function is continuous. | |

| + | |||

| + | [[sln_Limits_of_functions_Exercise_A|Solution]] | ||

| − | + | B) Suppose <math >\lim_{x\to c}f(x)=a</math> and <math>\lim_{x\to c}g(x)=b</math>. Show that | |

| + | :i)<math>\lim_{x\to c}(f+g)(x)=a+b</math>, | ||

| + | :ii)<math>\lim_{x\to c}(f-g)(x)=a-b</math>, and | ||

| + | :iii)<math>\lim_{x\to c}(fg)(x)=ab</math>. | ||

| − | + | Furthermore, if <math>b\neq0</math>, show that | |

| + | :iv)<math>\lim_{x\to c}\left(\frac{f}{g}\right)(x)=\frac{a}{b}</math>. | ||

| − | + | As a consequence of A) and B), any polynomial function is continuous. | |

| − | + | C) Show that <math>\lim_{x\to 0}f_2(x)</math> does not exist. | |

| − | + | D) Compute the following limits (if they exist): | |

| − | + | :i)<math>\lim_{x\to0}7</math> | |

| + | :ii)<math>\lim_{x\to2}5x^2-3</math> | ||

| + | :iii)<math>\lim_{x\to+\infty}\frac{2x^2-3x^2-1}{x^3+5}</math> | ||

| + | :iv)<math>\lim_{x\to+\infty}\frac{x^3-10x^2+4}{4x^3-2}</math> | ||

| + | :v)<math>\lim_{x\to-2}\frac{5x^2-3}{x+2}</math> | ||

| + | E) (Derivatives) Let <math>f</math> be a function from <math>\mathbb{R}</math> to <math>\mathbb{R}</math> and <math>c\in\mathbb{R}</math>. If <math>\lim_{h\to 0}\frac{f(c+h)-f(c)}{h}</math> exists, we say that <math>f</math> is differentiable at <math>c</math>. The above limit is then called the "derivative of <math>f</math> at <math>c</math>" and is denoted by <math>\displaystyle f'(c)</math>. Intuitively, <math>f'(c)</math> is the instantaneous rate of change of <math>f</math> at the point <math>c</math>; it is also the slope of the line tangent to the graph of <math>f</math> at the point <math>(c,f(c))</math>. | ||

| + | :i) Let <math>\displaystyle f(x)=x^2</math>. Show that <math>f</math> is differentiable everywhere, and compute <math>\displaystyle f'</math>. | ||

| + | :ii) Let <math>\displaystyle g(x)=|x|</math>. Show that <math>g</math> is not differentiable at <math>0</math>. | ||

| + | |||

| + | == Solutions to Exercises == | ||

| + | |||

| + | A) Let <math>f(x)=k</math> be a constant function with value <math>k</math> and let <math>c</math> be a real number. Now, let <math>\varepsilon>0</math> be given and set <math>\displaystyle\delta=1</math>. Then for ''any'' <math>x</math> in the interval <math>\displaystyle(c-\delta,c+\delta)</math>, <math>|f(x)-f(c)|=|k-k|=0<\varepsilon</math>. | ||

| + | |||

| + | B) Let <math>\varepsilon>0</math> be given. Then we can find a <math>\displaystyle\delta_1>0</math> so that <math>|f(x)-a|<\varepsilon</math> whenever <math>\displaystyle0<|x-c|<\delta_1</math>, and a <math>\displaystyle\delta_2>0</math> so that <math>0<|g(x)-b|<\varepsilon</math> whenever <math>\displaystyle0<|x-c|<\delta_2</math>. Set <math>\displaystyle\delta=\min\{\delta_1,\delta_2\}</math>. Then, for any <math>x</math> such that <math>\displaystyle0<|x-c|<\delta</math>, | ||

| + | :i) | ||

| + | :<math>|(f+g)(x)-(a+b)|=|(f(x)-a)+(g(x)-b)|\leq|f(x)-a|+|g(x)-b|<\varepsilon+\varepsilon=2\varepsilon</math>. | ||

| + | Also, it doesn't really matter that we have <math>2\varepsilon</math> on the right side instead of <math>\varepsilon</math> because <math>2\varepsilon</math> can be made as small as we like. That is, we can think of <math>2\varepsilon</math> as our error bound, instead of <math>\varepsilon</math>. | ||

| + | :ii) | ||

| + | :<math>|(f-g)(x)-(a-b)|=|(f(x)-a)+(b-g(x))|\leq|f(x)-a|+|b-g(x)|<2\varepsilon</math>. | ||

| + | :iii) Note that by the Triangle Inequality, <math>|f(x)|-a\leq|f(x)-a|<\varepsilon</math> so that <math>|f(x)|<|a|+\varepsilon</math>. Then, | ||

| + | :<math>\begin{align}|(fg)(x)-ab|&=|f(x)g(x)-f(x)b+f(x)b-ab|\\ | ||

| + | &\leq|f(x)g(x)-f(x)b|+|f(x)b-ab|\\ | ||

| + | &=|f(x)||g(x)-b|+|f(x)-a||b|\\ | ||

| + | &< (|a|+\varepsilon)|g(x)-b|+|f(x)-a||b|\\ | ||

| + | &<(|a|+\varepsilon)\varepsilon+\varepsilon|b|\\ | ||

| + | &=(|a|+|b|+\varepsilon)\varepsilon\end{align}</math> | ||

| + | Again, we are fine since <math>(|a|+|b|+\varepsilon)\varepsilon</math> can be made as small as we like. | ||

| + | :iv) By part (iii), it will suffice to show that <math>\lim_{x\to c}\left(\frac{1}{g}\right)(x)=\frac{1}{b}</math>. Let <math>\varepsilon>0</math> and set <math>\eta=\frac{1}{2}\min\{\varepsilon,|b|\}</math>. Note that <math>\displaystyle\eta>0</math> since <math>b\neq 0</math>. Therefore, we can find a <math>\displaystyle\delta>0</math> so that <math>\displaystyle|g(x)-b|<\eta</math> whenever <math>\displaystyle 0<|x-c|<\delta</math>. So, if <math>\displaystyle 0<|x-c|<\delta</math>, then <math>|g(x)-b|<\varepsilon</math> since <math>\eta\leq\frac{1}{2}\varepsilon<\varepsilon</math>. Also, by the triangle inequality we have <math>|b|-|g(x)|\leq|b-g(x)|<\eta</math>. But also, <math>\eta\leq\frac{1}{2}b</math>, so we get <math>|g(x)|\geq|b|-\eta\geq|b|-\frac{1}{2}\varepsilon=\frac{1}{2}|b|</math>. Putting these together, we find that for any <math>x</math> such that <math>\displaystyle 0<|x-c|<\delta</math>, | ||

| + | :<math>\bigg|\left(\frac{1}{g}\right)(x)-\frac{1}{b}\bigg|=\frac{|b-g(x)|}{|g(x)||b|}<\frac{\varepsilon}{\left(\frac{1}{2}|b|\right)|b|}=2\frac{\varepsilon}{|b|^2}</math>. | ||

| + | |||

| + | C) Suppose that the limit did exist, say <math>\lim_{x\to 0}f_2(x)=b</math>. Then setting <math>\varepsilon=\frac{1}{4}</math>, there is a <math>\displaystyle\delta>0</math> so that <math>|f_2(x)-b|<\varepsilon</math> whenever <math>\displaystyle 0<|x-0|<\delta</math>. In particular, <math>|f_2(-\frac{1}{2}\delta)-b|<\varepsilon</math> and <math>|f_2(\frac{1}{2}\delta)-b|<\varepsilon</math>. | ||

| + | |||

| + | Since <math>-\frac{1}{2}\delta<0</math>, we have <math>f_2(-\frac{1}{2}\delta)=-(-\frac{1}{2}\delta)^2-\frac{1}{2}=(\frac{1}{2}\delta)^2-\frac{1}{2}</math>, and since <math>\frac{1}{2}\delta>0</math>, we have <math>f_2(\frac{1}{2}\delta)=(\frac{1}{2}\delta)^2+\frac{1}{2}=(\frac{1}{2}\delta)^2-\frac{1}{2}</math>. | ||

| + | |||

| + | So, <math>|f_2(\frac{1}{2}\delta)-f_2(-\frac{1}{2}\delta)|=|2(\frac{1}{2}\delta)^2+1|=2(\frac{1}{2}\delta)^2+1>1</math>. | ||

| + | |||

| + | Now, the triangle inequality gives | ||

| + | :<math>1<|f_2(\frac{1}{2}\delta)-f_2(-\frac{1}{2}\delta)|=|f_2(\frac{1}{2}\delta)-b+b-f_2(-\frac{1}{2}\delta)|\leq |f_2(\frac{1}{2}\delta)-b|+|b-f_2(-\frac{1}{2}\delta)|<2\varepsilon=\frac{1}{2}</math>. | ||

| + | |||

| + | But of course, <math>1\nless\frac{1}{2}</math>, so we obtain a contradiction. This shows that <math>\lim_{x\to 0}f_2(x)</math> does not exist. | ||

| + | |||

| + | D) | ||

| + | :i) <math>\displaystyle 7</math> | ||

| + | :ii) <math>\displaystyle 17</math> | ||

| + | :iii) <math>\displaystyle 0</math> | ||

| + | :iv) <math>\frac{1}{4}</math> | ||

| + | :v) does not exist | ||

| + | |||

| + | E) | ||

| + | :i) Let <math>h</math> be a nonzero real number. Then for any <math>c</math>, | ||

| + | :<math>\frac{(c+h)^2-c^2}{h}=\frac{(c^2+2ch+h^2)-c^2}{h}=\frac{2ch+h^2}{h}=2c+h</math>. | ||

| + | The limit of the right hand side as <math>h</math> approaches <math>0</math> exists and equals <math>2c</math> using A) and B). Thus, <math>f</math> is differentiable everywhere, and <math>f'(c)=2c</math>. | ||

| + | :ii) Note that for nonzero <math>h</math>, | ||

| + | :<math>\frac{g(0+h)-g(0)}{h}=\frac{|0+h|-|0|}{h}=\frac{|h|}{h}</math>. | ||

| + | We notice that for <math>h>0</math>, | ||

| + | :<math>\frac{g(0+h)-g(0)}{h}=\frac{|h|}{h}=\frac{h}{h}=1</math> | ||

| + | while for <math>h<0</math>, | ||

| + | :<math>\frac{g(0+h)-g(0)}{h}=\frac{|h|}{h}=\frac{-h}{h}=-1</math>. | ||

| + | Then, as in C), <math>\lim_{h\to 0}\frac{g(0+h)-g(0)}{h}</math> does not exist. So, <math>g</math> is not differentiable at <math>0</math>. | ||

| + | ---- | ||

| + | == [[questions_limits_of_functions|Questions and comments]] == | ||

| + | If you have any questions, comments, etc. please, please please post them on [[questions_limits_of_functions|this page]]. | ||

---- | ---- | ||

Latest revision as of 13:55, 13 May 2014

Contents

Limits of Functions

by: Michael Yeh, proud Member of the Math Squad.

keyword: tutorial, limit, function, sequence

Introduction

Provided here is a brief introduction to the concept of "limit," which features prominently in calculus. To help motivate the definition, we first consider continuity at a point. Unless otherwise mentioned, all functions here will have domain and range $ \mathbb{R} $, the real numbers. Words such as "all," "every," "each," "some," and "there is/are" are quite important here; read carefully!

Continuity at a point

Let's consider the following three functions along with their graphs (in blue). The red dots in each correspond to $ x=0 $, e.g. for $ f_1 $, the red dot is the point $ (0,f_1(0))=(0,0) $. Ignore the red dashed lines for now; we will explain them later.

- $ \displaystyle f_1(x)=x^3 $

- $ f_2(x)=\begin{cases}-x^2-\frac{1}{2} &\text{if}~x<0\\ x^2+\frac{1}{2} &\text{if}~x\geq 0\end{cases} $

- $ f_3(x)=\begin{cases} \sin\left(\frac{1}{x}\right) &\text{if}~x\neq 0\\ 0 &\text{if}~x=0\end{cases} $

We can see from the graphs that $ f_1 $ is "continuous" at $ 0 $, and that $ f_2 $ and $ f_3 $ are "discontinuous" at 0. But, what exactly do we mean? Intuitively, $ f_1 $ seems to be continuous at $ 0 $ because $ f_1(x) $ is close to $ f_1(0) $ whenever $ x $ is close to $ 0 $. On the other hand, $ f_2 $ appears to be discontinuous at $ 0 $ because there are points $ x $ which are close to $ 0 $ but such that $ f_2(x) $ is far away from $ f_2(0) $. The same observation applies to $ f_3 $.

Let's make these observations more precise. First, we will try to estimate $ f_1(0) $ with error at most $ 0.25 $, say. In the graph of $ f_1 $, we have marked off a band of width $ 0.5 $ about $ f_1(0) $. So, any point in the band will provide a good approximation here. As a first try, we might think that if $ x $ is close enough to $ 0 $, then $ f_1(x) $ will be a good estimate of $ f_1(0) $. Indeed, we see from the graph that for any $ x $ in the interval $ (-\sqrt[3]{0.25},\sqrt[3]{0.25}) $, $ f_1(x) $ lies in the band (or if we wish to be more pedantic, we would say that $ (x,f_1(x)) $ lies in the band). So, "close enough to $ 0 $" here means in the interval $ (-\sqrt[3]{0.25},\sqrt[3]{0.25}) $; note that any point which is close enough to $ 0 $ provides a good approximation of $ f_1(0) $.

There is nothing special about our error bound $ 0.25 $. Choose a positive number $ \varepsilon $, and suppose we would like to estimate $ f_1(0) $ with error at most $ \varepsilon $. Then, as above, we can find some interval $ \displaystyle(-\delta,\delta) $ about $ 0 $ (if you like to be concrete, any $ \displaystyle\delta $ such that $ 0<\delta<\sqrt[3]{\varepsilon} $ will do) so that for any $ x $ in $ \displaystyle(-\delta,\delta) $, $ f_1(x) $ will be a good estimate for $ f_1(0) $, i.e. $ f_1(x) $ will be no more than $ \varepsilon $ away from $ f_1(0) $.

Can we do the same for $ f_2 $? That is, if $ x $ is close enough to $ 0 $, then will $ f_2(x) $ be a good estimate of $ f_2(0) $? Well, we see from the graph that $ f_2(0.25) $ provides a good approximation to $ f_2(0) $. But if $ 0.25 $ is close enough to $ 0 $, then certainly $ -0.25 $ should be too; however, the graph shows that $ f_2(-0.25) $ is not a good estimate of $ f_2(0) $. In fact, for any $ x>0 $, $ f_2(-x) $ will never be a good approximation for $ f_2(0) $, even though $ x $ and $ -x $ are the same distance from $ 0 $.

In contrast to $ f_1 $, we see that for any interval $ \displaystyle(-\delta,\delta) $ about $ 0 $, we can find an $ x $ in $ \displaystyle(-\delta,\delta) $ such that $ f_2(x) $ is more than $ 0.25 $ away from $ f_2(0) $.

The same is true for $ f_3 $. Whenever we find an $ x $ such that $ f_3(x) $ lies in the band, we can always find a point $ y $ such that 1) $ y $ is just as close or closer to $ 0 $ and 2) $ f_3(y) $ lies outside the band. So, it is not true that if $ x $ is close enough to $ 0 $, then $ f_3(x) $ will be a good estimate for $ f_3(0) $.

Let's summarize what we have found. For $ f_1 $, we saw that for each $ \varepsilon>0 $, we can find an interval $ \displaystyle(-\delta,\delta) $ about $ 0 $ ($ \displaystyle\delta $ depends on $ \varepsilon $) so that for every $ x $ in $ \displaystyle(-\delta,\delta) $, $ |f_1(x)-f_1(0)|<\varepsilon $. However, $ f_2 $ does not satisfy this property. More specifically, there is an $ \varepsilon>0 $, namely $ \varepsilon=0.25 $, so that for any interval $ \displaystyle(-\delta,\delta) $ about $ 0 $, we can find an $ x $ in $ \displaystyle(-\delta,\delta) $ such that $ |f_2(x)-f_2(0)|\geq\varepsilon $. The same is true of $ f_3 $.

Now we state the formal definition of continuity at a point. Compare this carefully with the previous paragraph.

DEFINITION 1. Let $ f $ be a function from $ \displaystyle A $ to $ \mathbb{R} $, where $ A\subset\mathbb{R} $. Then $ f $ is continuous at a point $ c\in A $ if for every $ \varepsilon>0 $, there is a $ \displaystyle\delta>0 $ such that $ |f(x)-f(c)|<\varepsilon $ for any $ x $ that satisfies $ \displaystyle|x-c|<\delta $. $ f $ is said to be continuous if it is continuous at every point of $ A $.

In our language above, $ \varepsilon $ is the error bound, and $ \displaystyle\delta $ is our measure of "close enough (to $ c $)." Note that continuity is defined only for points in a function's domain. So, the function $ k(x)=1/x $ is technically continuous because $ 0 $ is not in the domain of $ k $. If, however, we defined $ k(0)=0 $, then $ k $ will no longer be continuous.

The Limit of a Function at a Point

Now, let's consider the two functions $ g_1 $ and $ g_2 $ below. Note that $ g_1 $ is left undefined at $ 0 $.

- $ g_1(x)=\begin{cases}-x^2-x &\text{if}~x<0\\ x&\text{if}~x>0\end{cases} $

- $ g_2(x)=\begin{cases}0 &\text{if}~x\neq 0\\ \frac{1}{2}&\text{if}~x=0\end{cases} $

Recall the function $ f_1 $ from the previous section. We found that it was continuous at $ 0 $ because $ f_1(x) $ is close to $ f_1(0) $ if $ x $ is close enough to $ 0 $. We can do something similar with $ g_1 $ and $ g_2 $ here. From the graph, we can see that $ g_1(x) $ is close to $ 0 $ whenever $ x $ is close enough, but not equal, to $ 0 $. Similarly, we see that $ g_2(x) $ is close to $ 0 $ whenever $ x $ is close enough, but not equal, to $ 0 $. The "not equal" part is important for both $ g_1 $ and $ g_2 $ because $ g_1 $ is undefined at $ x=0 $ while $ g_2 $ has a discontinuity there. The idea is similar to that of continuity, but we ignore whatever happens at $ x=0 $. We are concerned more with how $ g_1 $ and $ g_2 $ behave around $ 0 $ rather than at $ 0 $. This leads to the following definition.

DEFINITION 2. Let $ f $ be a function defined for all real numbers, with possibly finitely many exceptions, and with range $ \mathbb{R} $. Let $ c $ be any real number. We say that the limit of $ f $ at $ c $ is $ b $, or that the limit of $ f(x) $ as $ x $ approaches $ c $ is $ b $, and write $ \lim_{x\to c}f(x)=b $ if for every $ \varepsilon>0 $, there is a $ \displaystyle\delta>0 $ such that $ |f(x)-b|<\varepsilon $ whenever $ \displaystyle 0<|x-c|<\delta $.

This is the same as the definition for continuity, except we ignore what happens at $ c $. We can see this in two places in the above definition. The first is the use of $ b $ instead of $ f(c) $, and the second is the condition $ \displaystyle 0<|x-c|<\delta $, which says that $ x $ is close enough, but not equal, to $ c $. The restriction on the domain of $ f $ in the above definition is not really necessary; if $ f $ has domain $ A\subset\mathbb{R} $, we can define $ \lim_{x\to c}f(x) $ for any $ c $ which is a "limit point" of $ \displaystyle A $. You can consult Rudin's Principles of Mathematical Analysis, for example, if you're interested. But, in most calculus courses, one usually encounters the situation described in the definition.

If you've ever seen Mean Girls, you know that the limit does not always exist. For example, if $ f_2 $ is the function in the previous section, then $ \lim_{x\to 0}f_2(x) $ does not exist. We will show this rigorously in the exercises, but it is clear from the graph of $ f_2 $.

Having defined what a limit is, we make a few remarks about how continuity and limit are related. As a first observation, we can now restate the definition of continuity at a point more succinctly in terms of limits: $ f $ is continuous at $ c $ if and only if $ \displaystyle\lim_{x\to c}f(x)=f(c) $. Now, let's take a look at the functions $ g_1 $ and $ g_2 $ defined earlier. $ g_1 $ is continuous at every point of its domain; however, it is undefined at $ 0 $. But since $ \lim_{x\to 0}g_1(x) $ exists and equals $ 0 $, we can make $ g_1 $ continuous on the entire real line by defining $ g_1(0)=0 $. We note that even though $ \lim_{x\to 0}g_2(x) $ exists, $ g_2 $ is discontinuous at $ 0 $; this is because $ \lim_{x\to 0}g_2(x)=0\neq \frac{1}{2}=g_2(0) $. This shows that the existence of $ \displaystyle\lim_{x\to c}f(x) $ does not imply continuity at $ c $, even though, as we just mentioned, continuity of $ f $ at $ c $ implies the existence of $ \displaystyle\lim_{x\to c}f(x) $.

Limits at Infinity

Let $ f $ be as in Definition 2. So far, we have only discussed $ \displaystyle\lim_{x\to c}f(x) $ when $ c $ is a real number. It is not difficult to modify Definition 2 for when $ c=\pm\infty $. In Definition 2, we considered $ x $ to be "close enough" when $ x $ is in the interval $ \displaystyle(c-\delta,c+\delta) $ and $ x\neq c $. For $ \infty $, the analogue would be that $ x $ belongs to an interval of the form $ \displaystyle(M,\infty) $, where $ M $ is a real number, and similarly for $ -\infty $. So, we say that the limit of $ f $ at $ \infty $ (respectively, $ -\infty $) is $ b $ and write $ \displaystyle\lim_{x\to\infty}f(x)=b $ (respectively, $ \displaystyle\lim_{x\to-\infty}f(x)=b $) if for every $ \varepsilon>0 $, there is a real number $ M $ so that $ |f(x)-b|<\varepsilon $ whenever $ x>M $ (respectively, $ x<M $).

Exercises

The triangle inequality will be handy for a few of these.

A) Show that any constant function is continuous.

B) Suppose $ \lim_{x\to c}f(x)=a $ and $ \lim_{x\to c}g(x)=b $. Show that

- i)$ \lim_{x\to c}(f+g)(x)=a+b $,

- ii)$ \lim_{x\to c}(f-g)(x)=a-b $, and

- iii)$ \lim_{x\to c}(fg)(x)=ab $.

Furthermore, if $ b\neq0 $, show that

- iv)$ \lim_{x\to c}\left(\frac{f}{g}\right)(x)=\frac{a}{b} $.

As a consequence of A) and B), any polynomial function is continuous.

C) Show that $ \lim_{x\to 0}f_2(x) $ does not exist.

D) Compute the following limits (if they exist):

- i)$ \lim_{x\to0}7 $

- ii)$ \lim_{x\to2}5x^2-3 $

- iii)$ \lim_{x\to+\infty}\frac{2x^2-3x^2-1}{x^3+5} $

- iv)$ \lim_{x\to+\infty}\frac{x^3-10x^2+4}{4x^3-2} $

- v)$ \lim_{x\to-2}\frac{5x^2-3}{x+2} $

E) (Derivatives) Let $ f $ be a function from $ \mathbb{R} $ to $ \mathbb{R} $ and $ c\in\mathbb{R} $. If $ \lim_{h\to 0}\frac{f(c+h)-f(c)}{h} $ exists, we say that $ f $ is differentiable at $ c $. The above limit is then called the "derivative of $ f $ at $ c $" and is denoted by $ \displaystyle f'(c) $. Intuitively, $ f'(c) $ is the instantaneous rate of change of $ f $ at the point $ c $; it is also the slope of the line tangent to the graph of $ f $ at the point $ (c,f(c)) $.

- i) Let $ \displaystyle f(x)=x^2 $. Show that $ f $ is differentiable everywhere, and compute $ \displaystyle f' $.

- ii) Let $ \displaystyle g(x)=|x| $. Show that $ g $ is not differentiable at $ 0 $.

Solutions to Exercises

A) Let $ f(x)=k $ be a constant function with value $ k $ and let $ c $ be a real number. Now, let $ \varepsilon>0 $ be given and set $ \displaystyle\delta=1 $. Then for any $ x $ in the interval $ \displaystyle(c-\delta,c+\delta) $, $ |f(x)-f(c)|=|k-k|=0<\varepsilon $.

B) Let $ \varepsilon>0 $ be given. Then we can find a $ \displaystyle\delta_1>0 $ so that $ |f(x)-a|<\varepsilon $ whenever $ \displaystyle0<|x-c|<\delta_1 $, and a $ \displaystyle\delta_2>0 $ so that $ 0<|g(x)-b|<\varepsilon $ whenever $ \displaystyle0<|x-c|<\delta_2 $. Set $ \displaystyle\delta=\min\{\delta_1,\delta_2\} $. Then, for any $ x $ such that $ \displaystyle0<|x-c|<\delta $,

- i)

- $ |(f+g)(x)-(a+b)|=|(f(x)-a)+(g(x)-b)|\leq|f(x)-a|+|g(x)-b|<\varepsilon+\varepsilon=2\varepsilon $.

Also, it doesn't really matter that we have $ 2\varepsilon $ on the right side instead of $ \varepsilon $ because $ 2\varepsilon $ can be made as small as we like. That is, we can think of $ 2\varepsilon $ as our error bound, instead of $ \varepsilon $.

- ii)

- $ |(f-g)(x)-(a-b)|=|(f(x)-a)+(b-g(x))|\leq|f(x)-a|+|b-g(x)|<2\varepsilon $.

- iii) Note that by the Triangle Inequality, $ |f(x)|-a\leq|f(x)-a|<\varepsilon $ so that $ |f(x)|<|a|+\varepsilon $. Then,

- $ \begin{align}|(fg)(x)-ab|&=|f(x)g(x)-f(x)b+f(x)b-ab|\\ &\leq|f(x)g(x)-f(x)b|+|f(x)b-ab|\\ &=|f(x)||g(x)-b|+|f(x)-a||b|\\ &< (|a|+\varepsilon)|g(x)-b|+|f(x)-a||b|\\ &<(|a|+\varepsilon)\varepsilon+\varepsilon|b|\\ &=(|a|+|b|+\varepsilon)\varepsilon\end{align} $

Again, we are fine since $ (|a|+|b|+\varepsilon)\varepsilon $ can be made as small as we like.

- iv) By part (iii), it will suffice to show that $ \lim_{x\to c}\left(\frac{1}{g}\right)(x)=\frac{1}{b} $. Let $ \varepsilon>0 $ and set $ \eta=\frac{1}{2}\min\{\varepsilon,|b|\} $. Note that $ \displaystyle\eta>0 $ since $ b\neq 0 $. Therefore, we can find a $ \displaystyle\delta>0 $ so that $ \displaystyle|g(x)-b|<\eta $ whenever $ \displaystyle 0<|x-c|<\delta $. So, if $ \displaystyle 0<|x-c|<\delta $, then $ |g(x)-b|<\varepsilon $ since $ \eta\leq\frac{1}{2}\varepsilon<\varepsilon $. Also, by the triangle inequality we have $ |b|-|g(x)|\leq|b-g(x)|<\eta $. But also, $ \eta\leq\frac{1}{2}b $, so we get $ |g(x)|\geq|b|-\eta\geq|b|-\frac{1}{2}\varepsilon=\frac{1}{2}|b| $. Putting these together, we find that for any $ x $ such that $ \displaystyle 0<|x-c|<\delta $,

- $ \bigg|\left(\frac{1}{g}\right)(x)-\frac{1}{b}\bigg|=\frac{|b-g(x)|}{|g(x)||b|}<\frac{\varepsilon}{\left(\frac{1}{2}|b|\right)|b|}=2\frac{\varepsilon}{|b|^2} $.

C) Suppose that the limit did exist, say $ \lim_{x\to 0}f_2(x)=b $. Then setting $ \varepsilon=\frac{1}{4} $, there is a $ \displaystyle\delta>0 $ so that $ |f_2(x)-b|<\varepsilon $ whenever $ \displaystyle 0<|x-0|<\delta $. In particular, $ |f_2(-\frac{1}{2}\delta)-b|<\varepsilon $ and $ |f_2(\frac{1}{2}\delta)-b|<\varepsilon $.

Since $ -\frac{1}{2}\delta<0 $, we have $ f_2(-\frac{1}{2}\delta)=-(-\frac{1}{2}\delta)^2-\frac{1}{2}=(\frac{1}{2}\delta)^2-\frac{1}{2} $, and since $ \frac{1}{2}\delta>0 $, we have $ f_2(\frac{1}{2}\delta)=(\frac{1}{2}\delta)^2+\frac{1}{2}=(\frac{1}{2}\delta)^2-\frac{1}{2} $.

So, $ |f_2(\frac{1}{2}\delta)-f_2(-\frac{1}{2}\delta)|=|2(\frac{1}{2}\delta)^2+1|=2(\frac{1}{2}\delta)^2+1>1 $.

Now, the triangle inequality gives

- $ 1<|f_2(\frac{1}{2}\delta)-f_2(-\frac{1}{2}\delta)|=|f_2(\frac{1}{2}\delta)-b+b-f_2(-\frac{1}{2}\delta)|\leq |f_2(\frac{1}{2}\delta)-b|+|b-f_2(-\frac{1}{2}\delta)|<2\varepsilon=\frac{1}{2} $.

But of course, $ 1\nless\frac{1}{2} $, so we obtain a contradiction. This shows that $ \lim_{x\to 0}f_2(x) $ does not exist.

D)

- i) $ \displaystyle 7 $

- ii) $ \displaystyle 17 $

- iii) $ \displaystyle 0 $

- iv) $ \frac{1}{4} $

- v) does not exist

E)

- i) Let $ h $ be a nonzero real number. Then for any $ c $,

- $ \frac{(c+h)^2-c^2}{h}=\frac{(c^2+2ch+h^2)-c^2}{h}=\frac{2ch+h^2}{h}=2c+h $.

The limit of the right hand side as $ h $ approaches $ 0 $ exists and equals $ 2c $ using A) and B). Thus, $ f $ is differentiable everywhere, and $ f'(c)=2c $.

- ii) Note that for nonzero $ h $,

- $ \frac{g(0+h)-g(0)}{h}=\frac{|0+h|-|0|}{h}=\frac{|h|}{h} $.

We notice that for $ h>0 $,

- $ \frac{g(0+h)-g(0)}{h}=\frac{|h|}{h}=\frac{h}{h}=1 $

while for $ h<0 $,

- $ \frac{g(0+h)-g(0)}{h}=\frac{|h|}{h}=\frac{-h}{h}=-1 $.

Then, as in C), $ \lim_{h\to 0}\frac{g(0+h)-g(0)}{h} $ does not exist. So, $ g $ is not differentiable at $ 0 $.

Questions and comments

If you have any questions, comments, etc. please, please please post them on this page.

The Spring 2014 Math Squad was supported by an anonymous gift to Project Rhea. If you enjoyed reading these tutorials, please help Rhea "help students learn" with a donation to this project. Your contribution is greatly appreciated.