| (92 intermediate revisions by 3 users not shown) | |||

| Line 5: | Line 5: | ||

[[Category:math squad]] | [[Category:math squad]] | ||

| − | = | + | keywords: Jacobian, injective, stability, critical points, saddles, tangent vectors, differential equations. |

| + | |||

| + | = '''Jacobians and their applications''' = | ||

| + | |||

| + | by [[user:ruanj | Joseph Ruan]], proud Member of [[Math_squad | the Math Squad]]. | ||

| − | |||

---- | ---- | ||

| − | == | + | =='''Basic Definition'''== |

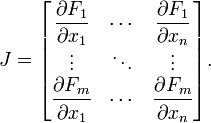

The Jacobian Matrix is just a matrix that takes the partial derivatives of each element of a transformation. In general, the Jacobian Matrix of a transformation F, looks like this: | The Jacobian Matrix is just a matrix that takes the partial derivatives of each element of a transformation. In general, the Jacobian Matrix of a transformation F, looks like this: | ||

| − | [[Image:JacobianGen.png]] < | + | [[Image:JacobianGen.png]] '''F<sub>1</sub>,F<sub>2</sub>, F<sub>3</sub>'''... are each of the elements of the output vector and '''x<sub>1</sub>,x<sub>2</sub>, x<sub>3</sub>''' ... are each of the elements of the input vector. |

| − | < | + | |

| − | So for example, in a 2 dimensional case, let T be a transformation such that | + | So for example, in a 2 dimensional case, let T be a transformation such that T(u,v)=<x,y> then the Jacobian matrix of this function would look like this: |

| − | then the Jacobian matrix of this function would look like this: | + | |

<math>J(u,v)=\begin{bmatrix} | <math>J(u,v)=\begin{bmatrix} | ||

\frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \\ | \frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \\ | ||

\frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \end{bmatrix}</math> | \frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \end{bmatrix}</math> | ||

| + | |||

| + | This Jacobian matrix noticably holds all of the partial derivatives of the transformation with respect to each of the variables. Therefore each row contains how a particular output element changes with respect to each of the input elements. This means that the Jacobian matrix contains vectors that help describe how a change in any of the input elements affects the output elements. | ||

To help illustrate making Jacobian matrices, let's do some examples: | To help illustrate making Jacobian matrices, let's do some examples: | ||

==== Example #1: ==== | ==== Example #1: ==== | ||

| − | |||

| − | |||

| − | |||

What would be the Jacobian Matrix of this Transformation? | What would be the Jacobian Matrix of this Transformation? | ||

| + | |||

| + | <font size=4><math>T(u,v) = <u\times \cos v, u\times \sin v> </math> </font>. | ||

===Solution:=== | ===Solution:=== | ||

<font size = 4> | <font size = 4> | ||

| − | <math>x=u | + | <math>x=u \times \cos v \longrightarrow \frac{\partial x}{\partial u}= \cos v , \; \frac{\partial x}{\partial v} = -u\times \sin v</math> |

| − | <math>y=u | + | <math>y=u\times\sin v \longrightarrow \frac{\partial y}{\partial u}= \sin v , \; \frac{\partial y}{\partial v} = u\times \cos v</math> |

</font> | </font> | ||

| − | + | <math>J(u,v)=\begin{bmatrix} | |

| − | + | ||

| − | <math>\begin{bmatrix} | + | |

\frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \\ | \frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \\ | ||

\frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \end{bmatrix}= | \frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \end{bmatrix}= | ||

\begin{bmatrix} | \begin{bmatrix} | ||

| − | \cos v & -u | + | \cos v & -u\times \sin v \\ |

| − | \sin v & u | + | \sin v & u\times \cos v \end{bmatrix} |

</math> | </math> | ||

| − | |||

| − | |||

==== Example #2: ==== | ==== Example #2: ==== | ||

| − | + | What would be the Jacobian Matrix of this Transformation? | |

| − | <font size=4><math>T(u,v) = <u, v, u | + | <font size=4><math>T(u,v) = <u, v, u^v>,u>0 </math> </font>. |

| − | |||

===Solution:=== | ===Solution:=== | ||

| Line 72: | Line 69: | ||

<math>y=v \longrightarrow \frac{\partial y}{\partial u}=0 , \; \frac{\partial y}{\partial v} = 1</math> | <math>y=v \longrightarrow \frac{\partial y}{\partial u}=0 , \; \frac{\partial y}{\partial v} = 1</math> | ||

| − | <math>z=u | + | <math>z=u^v \longrightarrow \frac{\partial y}{\partial u}= u^{v-1}\times v, \; \frac{\partial y}{\partial v} = u^v\times ln(u)</math> |

</font> | </font> | ||

| − | + | <math>J(u,v)=\begin{bmatrix} | |

| − | + | ||

| − | <math>\begin{bmatrix} | + | |

\frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \\ | \frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \\ | ||

\frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \\ | \frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \\ | ||

| Line 84: | Line 79: | ||

1 & 0 \\ | 1 & 0 \\ | ||

0 & 1 \\ | 0 & 1 \\ | ||

| − | 1 & | + | u^{v-1}\times v & u^v\times ln(u)\end{bmatrix} |

</math> | </math> | ||

==== Example #3: ==== | ==== Example #3: ==== | ||

| − | + | What would be the Jacobian Matrix of this Transformation? | |

| + | |||

| + | <font size=4><math>T(u,v) = <\tan (uv)> </math> </font>. | ||

| − | |||

| − | |||

===Solution:=== | ===Solution:=== | ||

Notice, that this matrix will not be square because there is a difference in dimensions of the input and output, i.e. the transformation is not injective. | Notice, that this matrix will not be square because there is a difference in dimensions of the input and output, i.e. the transformation is not injective. | ||

| + | |||

| + | <font size = 4><math>x=\tan(uv) \longrightarrow \frac{\partial x}{\partial u}= \sec^2(uv)\times v, \; \frac{\partial x}{\partial v} = sec^2(uv)\times u</math></font> | ||

| + | |||

| + | <math>J(u,v)= \begin{bmatrix}\frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \end{bmatrix}=\begin{bmatrix}\sec^2(uv)\times v & sec^2(uv)\times u \end{bmatrix}</math> | ||

| + | |||

| + | |||

| + | |||

| + | =='''Application #1: Jacobian Determinants'''== | ||

| + | |||

| + | The determinant of Example #1 gives: | ||

| + | |||

| + | <font size=5> <math> \left|\begin{matrix} | ||

| + | \cos v & -u \times \sin v \\ | ||

| + | \sin v & u \times \cos v \end{matrix}\right|=~~ u \cos^2 v + u \sin^2 v =~~ u </math></font> | ||

| + | |||

| + | Notice that, in an integral when changing from cartesian coordinates (dxdy) to polar coordinates <math> (drd\theta)</math>, the equation is as such: | ||

| + | |||

| + | <font size=4><math> dxdy=r\times drd\theta=u\times dudv </math></font> | ||

| + | |||

| + | It is easy to extrapolate, then, that the transformation from one set of coordinates to another set is merely | ||

| + | |||

| + | <font size=4><math> dC2=det(J(T))dC1 </math></font> | ||

| + | |||

| + | where C1 is the first set of coordinates, det(J(C1)) is the determinant of the Jacobian matrix made from the Transformation T, T is the Transformation from C1 to C2 and C2 is the second set of coordinates. | ||

| + | |||

| + | It is important to notice several aspects: first, the determinant is assumed to exist and be non-zero, and therefore the Jacobian matrix must be square and invertible. This makes sense because when changing coordinates, it should be possible to change back. | ||

| + | |||

| + | Moreover, recall from linear algebra that, in a two dimensional case, the determinant of a matrix of two vectors describes the area of the parallelogram drawn by it, or more accurately, it describes the scale factor between the unit square and the area of the parallelogram. If we extend the analogy, the determinant of the Jacobian would describe some sort of scale factor change from one set of coordinates to the other. Here is a picture that should help: | ||

| + | |||

| + | [[Image: JacobianPic2.png]] | ||

| + | |||

| + | This is the general idea behind change of variables. It is easy to that the jacobian method matches up with one-dimensional change of variables: | ||

| + | |||

| + | <math>T(u)=\begin{matrix}u^2\end{matrix}=\begin{matrix}x\end{matrix}</math> | ||

| + | <math>~~~,~~~~~~~~ J(u)=\begin{bmatrix}\frac{\partial x}{\partial u}\end{bmatrix}=\begin{bmatrix}2u\end{bmatrix} </math><math>,~~~~~~~ du=\left|J(u)\right|\times du=2u\times dx </math> | ||

| + | |||

| + | Remember that when substituting, to be VERY careful. It should always be the case where | ||

| + | |||

| + | <math> J(\vec u) \times d\vec x = d\vec u,~~~~ J(\vec x) \times d\vec u = d\vec x </math>. | ||

| + | |||

| + | Let's do some examples to show what I mean. | ||

| + | |||

| + | ====Example #4:==== | ||

| + | Compute the following expression: | ||

| + | |||

| + | <font size=4><math> \iint(x^2+y^2)dA </math></font> | ||

| + | |||

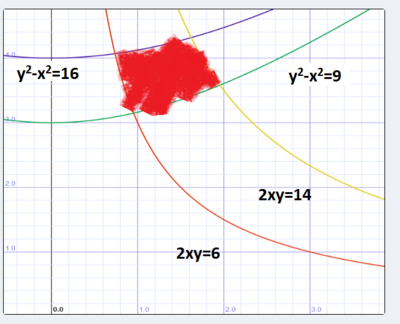

| + | where dA is the region bounded by y<sup>2</sup>-x<sup>2</sup>=9, y<sup>2</sup>-x<sup>2</sup>=16, 2xy=14, 2xy=6. | ||

| + | |||

| + | ====Solution:==== | ||

| + | |||

| + | First, let's graph the region. | ||

| + | |||

| + | [[Image:JacobianPic3.png|400px]] | ||

| + | |||

| + | <math>Let ~~~~ v=2xy, ~~~~ u=y^2-x^2</math>. | ||

| + | |||

| + | Why do we do this? Let's look at the bounds: they're from y<sup>2</sup>-x<sup>2</sup> =9 to y<sup>2</sup> -x<sup>2</sup>=16 and 2xy=6 and 2xy=14.It'd be quite a simple task if the integral looked something like this: | ||

| + | |||

| + | <math> \int^{16}_9 \int^{14}_6 k(u,v)\mathrm{d}u\mathrm{d}v</math> | ||

| + | |||

| + | However, notice that in this case, instead of substituting x= and y=, we're substituing with u= and v=. Therefore the Jacobian will be written as J(u,v) and the expression will be dudv=J(u,v)dxdy. | ||

<font size = 4> | <font size = 4> | ||

| − | <math> | + | <math>u=2xy\longrightarrow \frac{\partial u}{\partial x}= 2y , \; \frac{\partial u}{\partial y} = 2x</math> |

| + | <math>v=y^2-x^2\longrightarrow \frac{\partial v}{\partial x}= -2x , \; \frac{\partial v}{\partial y} =2y</math> | ||

</font> | </font> | ||

| − | + | <math>J(u,v)=\begin{bmatrix} | |

| + | \frac{\partial u}{\partial x} & \frac{\partial u}{\partial y} \\ | ||

| + | \frac{\partial v}{\partial x} & \frac{\partial v}{\partial y} \end{bmatrix}= | ||

| + | \begin{bmatrix} | ||

| + | 2y & 2x \\ | ||

| + | -2x & 2y\end{bmatrix} | ||

| + | </math> | ||

| − | <math>\begin{bmatrix} | + | <math> dudv= \left|J(u,v)\right|\times dxdy |

| − | \frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \end{bmatrix}= | + | \longrightarrow \left|\begin{matrix} |

| + | 2y & 2x \\ | ||

| + | 2x & -2y\end{matrix}\right|\times dxdy=(4y^2+4x^2)dxdy </math> | ||

| + | |||

| + | The new region looks like this: [[Image: JacobianPic4.png|200px]] | ||

| + | |||

| + | Therefore, | ||

| + | |||

| + | <math>\iint(x^2+y^2)dA=\int^{16}_9 \int^{14}_6 (x^2+y^2)/(4y^2+4x^2)\mathrm{d}u\mathrm{d}v=\int^{16}_9 \int^{14}_6 1/4\mathrm{d}u\mathrm{d}v=(16-9)\times (14-6)\times 1/4=14.</math> | ||

| + | |||

| + | ====Example #5:==== | ||

| + | Compute the following expression: | ||

| + | |||

| + | <font size=4><math> \iint(1/\sqrt{4x^2+9y^2})dA </math></font> | ||

| + | |||

| + | where dA is the region bounded by (2x)<sup>2</sup>+(3y)<sup>2</sup>=36. | ||

| + | |||

| + | ====Solution:==== | ||

| + | Again, let's graph the region. | ||

| + | |||

| + | [[Image: JacobianPic5.png|400px]] | ||

| + | |||

| + | There are many ways to approach this, but to start, let's choose the change of variables to be | ||

| + | x=u/2, y=v/3. Why? | ||

| + | |||

| + | <math> (2x)^2+(3y)^2=36\longrightarrow u^2+v^2=36 </math> | ||

| + | |||

| + | The region now looks like this: [[Image: JacobianPic6.png|400px]] | ||

| + | |||

| + | We've turned the region from an ellipse to a circle! | ||

| + | |||

| + | However, notice that in this case, instead of substituting u= and v=, we're substituting with x= and y=. Therefore the Jacobian will be written as J(x,y) and the expression will be dxdy=J(x,y)dudv. | ||

| + | |||

| + | <font size = 4> | ||

| + | <math>x=u/2\longrightarrow \frac{\partial x}{\partial u}= 1/2 , \; \frac{\partial x}{\partial v} = 0</math> | ||

| + | |||

| + | <math>y=v/3\longrightarrow \frac{\partial y}{\partial u}=0 , \; \frac{\partial y}{\partial v} =1/3</math> | ||

| + | |||

| + | </font> | ||

| + | |||

| + | <math>J(x,y)=\begin{bmatrix} | ||

| + | \frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \\ | ||

| + | \frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \end{bmatrix}= | ||

\begin{bmatrix} | \begin{bmatrix} | ||

| − | + | 1/2 & 0 \\ | |

| − | </math> | + | 0 & 1/3\end{bmatrix} |

| + | </math> | ||

| + | <math> dxdy=\left|J(x,y)\right| \times dudv | ||

| + | =\left|\begin{matrix} | ||

| + | 1/2 & 0 \\ | ||

| + | 0 & 1/3\end{matrix}\right|\times dudv=(1/6)dudv </math> | ||

| − | == | + | <font size=4><math> \iint(1/\sqrt{4x^2+9y^2})dA =\iint 1/6*1/\sqrt{u^2+v^2}dudv</math></font> |

| − | + | Now, let's change the coordinates to polar: | |

| − | + | <math> r\sin \theta = u~~~~~, r\cos\theta = v,~~~~~ \iint 1/6\times 1/\sqrt{u^2+v^2}dA=1/6\times\iint 1/\sqrt{r^2}\times r\times drd\theta=1/6\times \int^{2\pi}_0 \int^6_0 dr d\theta=1/6\times 36\times \pi=6\pi</math> | |

| − | \cos | + | |

| − | \ | + | |

| − | + | ====Example #6:==== | |

| + | Compute the following expression: | ||

| − | <font size=4><math> | + | <font size=4><math> \iint(\sqrt{(x-y)(x+6y)})dA </math></font> |

| − | + | where dA refers to the plane bounded by the points (0,0), (1,1), (7,0),(6,-1). | |

| − | + | ====Solution:==== | |

| − | + | So far, we've only discussed cases where separation of variables is used to make regions easier to integrate over. For this example, separation of variables will be used to make the integrand easier. | |

| − | + | First, let's graph the region. | |

| − | + | [[Image:JacobianPic5-5.png]] | |

| − | + | As previously stated, it would relatively simple to just do the double integral with this region. However, the integrand is the real problem. And so, to make things simpler, we'll set | |

| + | <font size = 4> | ||

| + | <math>u=x-y\longrightarrow \frac{\partial u}{\partial x}= 1 , \; \frac{\partial u}{\partial y} = -1</math> | ||

| + | <math>v=x+6y\longrightarrow \frac{\partial v}{\partial x}=1 , \; \frac{\partial v}{\partial y} =6</math> | ||

| + | </font> | ||

| + | Notice that in this case, we're back to substituting from the u= and v= side. | ||

| + | <math>J(u,v)=\begin{bmatrix} | ||

| + | \frac{\partial u}{\partial x} & \frac{\partial u}{\partial y} \\ | ||

| + | \frac{\partial v}{\partial x} & \frac{\partial v}{\partial y} \end{bmatrix}= | ||

| + | \begin{bmatrix} | ||

| + | 1 & -1 \\ | ||

| + | 1 & 6\end{bmatrix} | ||

| + | </math> | ||

| + | |||

| + | <math> dudv=\left|J(u,v)\right| \times dxdy | ||

| + | =\left|\begin{matrix} | ||

| + | 1 & -1 \\ | ||

| + | 1 & 6\end{matrix}\right|\times dxdy=(7)dxdy </math> | ||

| + | |||

| + | Let's look at the transformed region. It just so happens that it made a 7 x 7 square! | ||

| + | |||

| + | [[Image: JacobianPic5-6.png|300px]] | ||

| + | |||

| + | <font size=4><math> \iint(\sqrt{(x-y)(x+6y)})dA=\int^7_0 \int^7_0 \sqrt{uv}dudv=7*3/2*3/2=63/4 </math></font> | ||

| + | |||

| + | ===Application #2: Transforming Tangent vectors=== | ||

| + | |||

| + | The second major application of Jacobian Matrices comes from the fact that it is made of the partial derivatives of the transformation with respect to each original element. Therefore, when a function F is transformed by a transformation T, the tangent vector of F at a point is likewise transformed by the Jacobian Matrix. | ||

| + | |||

| + | Here is a picture to illustrate this. Note: this picture was taken from etsu.edu. | ||

| + | |||

| + | [[Image:JacobianPic7.png]]. | ||

| + | |||

| + | In other words, if we transform a function, we can find the new tangent vector at a transformed point. | ||

| + | |||

| + | ====Example #7:==== | ||

| + | <math> <u,v>=<t^2,t^4>,~~~T(u,v)=<u^3-v^3,3uv>=<x,y></math> | ||

| + | |||

| + | Find the velocity vector of T(u,v) at t=1. | ||

| + | |||

| + | ====Solution:==== | ||

| + | <font size = 4> | ||

| + | <math>x=u^3-v^3\longrightarrow \frac{\partial x}{\partial u}= 3u^2 , \; \frac{\partial x}{\partial v} = -3v^2</math> | ||

| + | |||

| + | <math>y=3uv\longrightarrow \frac{\partial y}{\partial u}=3v , \; \frac{\partial y}{\partial v} =3u</math> | ||

| + | |||

| + | </font> | ||

| + | |||

| + | <math>J(x,y)=\begin{bmatrix} | ||

| + | \frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \\ | ||

| + | \frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \end{bmatrix}= | ||

| + | \begin{bmatrix} | ||

| + | 3u^2 & -3v^2 \\ | ||

| + | 3v & 3u\end{bmatrix} | ||

| + | </math> | ||

| + | |||

| + | <math> u'(t)=\begin{bmatrix}2t\\4t^3\end{bmatrix}</math> | ||

| + | |||

| + | <math> J(x,y)\times u'(t)=\begin{bmatrix}3u^2 & -3v^2 \\3v & 3u\end{bmatrix} \times \begin{bmatrix}2t\\4t^3\end{bmatrix}= | ||

| + | \begin{bmatrix}3u^2\times 2t-3v^2\times 4t^3\\3v\times 2t+3u\times 4t^3\end{bmatrix}= | ||

| + | \begin{bmatrix}3(t^2)^2\times 2t-3(t^4)^2\times 4t^3\\3(t^4)\times 2t+3(t^2)\times 4t^3\end{bmatrix}= | ||

| + | \begin{bmatrix}6t^5-12t^{11}\\18t^5\end{bmatrix} | ||

| + | </math> | ||

| + | |||

| + | Therefore at t=1, the transformed tangent vector is: | ||

| + | |||

| + | <math> \begin{bmatrix}-6\\18\end{bmatrix} </math> | ||

| + | |||

| + | ===Application #3: Linearization of Systems of Differential Equations=== | ||

| + | |||

| + | There is one last major application of Jacobians: approximating systems of Differential equations. Let's take the general expression: | ||

| + | |||

| + | <font size=5><math>\vec x'=f(\vec x,t)</math></font> | ||

| + | |||

| + | Around a point, if f(x,t) is differentiable, the system can be approximated to: | ||

| + | |||

| + | <font size=5><math>\vec x' \approx A\vec x ,~~~~A=J(\vec x ,t_0) </math> </font> | ||

| + | |||

| + | The reason this works is similar to the idea behind one dimensional differentials: | ||

| + | |||

| + | <font size =4><math> dy\approx f'(x)\times dx </math></font> | ||

| + | |||

| + | Why is this important? This can help us check stability for certain systems of differential equations. It is worth mentioning that this linearization works even when there are terms with t in them in the equation. This is because at very small intervals, those terms are small and don't affect the approximation. | ||

| + | |||

| + | ====Example #8:==== | ||

| + | Find the stability of the all the critical points of | ||

| + | |||

| + | |||

| + | <math> | ||

| + | \begin{bmatrix} x'\\ y'\\ z'\end{bmatrix}=\begin{bmatrix}z-x\\ 3/(2+x)-3y\\3y-z\end{bmatrix} | ||

| + | </math> | ||

| + | |||

| + | ====Solution:==== | ||

| + | To find the critical points, we set x'=y'=z'=0. | ||

| + | |||

| + | |||

| + | <math> | ||

| + | z=x,~~~~ y=1/3x,~~~~ 3/(2+x)-x=0 \longrightarrow (x+3)(x-1)=0,~~~~ (1,1/3,1)~~~~ (-3,-1,-3) | ||

| + | </math> | ||

| + | |||

| + | Now let's find the Jacobian at (1,1/3,1) | ||

| + | |||

| + | <math> | ||

| + | |||

| + | J(x',y',z')=\begin{bmatrix}-1 & 0 & 1\\ -3/(2+x)^2 & -3 & 0\\ 0 & 3 & -1 \end{bmatrix}=\begin{bmatrix}-1 & 0 & 1\\ -1/3 & -3 & 0\\ 0 & 3 & -1 \end{bmatrix}. | ||

| + | |||

| + | </math> | ||

| + | |||

| + | The eigenvalues are -3.2055694304005904, -0.8972152847997048 ± 0.6654569511528134i. Since the real parts are all negative, this critical point is locally stable. | ||

| + | |||

| + | Now let's find the Jacobian at (-3,-1,-3) | ||

| + | |||

| + | <math> | ||

| + | |||

| + | J(x',y',z')=\begin{bmatrix}-1 & 0 & 1\\ -3/(2+x)^2 & -3 & 0\\ 0 & 3 & -1 \end{bmatrix}=\begin{bmatrix}-1 & 0 & 1\\ -3 & -3 & 0\\ 0 & 3 & -1 \end{bmatrix}. | ||

| + | |||

| + | </math> | ||

| + | |||

| + | The eigen values are -4, -2.5 ± 1.65831i. Because all of the real parts are negative, this critical point is locally stable. | ||

| + | |||

| + | |||

| + | ====Example #9:==== | ||

| + | |||

| + | Find the stability of the all the critical points of | ||

| + | |||

| + | <math> | ||

| + | \begin{bmatrix} x'\\ y'\\ z'\end{bmatrix}=\begin{bmatrix}z+2y^2+x\\ y-z^{3/2}\\ \sin(z) \end{bmatrix} | ||

| + | </math> | ||

| + | |||

| + | ====Solution:==== | ||

| + | |||

| + | The only critical point in this case is (0,0,0) | ||

| + | |||

| + | Now, let's do the Jacobian. | ||

| + | |||

| + | <math> | ||

| + | |||

| + | J(x',y',z')=\begin{bmatrix}1 & 2y & 1\\ 0 & 1 & 3/2*z^{1/2}\\ 0 & 0 & \cos(z) \end{bmatrix}=\begin{bmatrix}1 & 0 & 1\\ 0 & 1 & 0\\ 0 & 0 & 1 \end{bmatrix}. | ||

| + | |||

| + | </math> | ||

| + | |||

| + | |||

| + | The eigenvalue is just 1. Since this is positive, the critical point is unstable. | ||

| + | |||

| + | ====Example #10:==== | ||

| + | Find the stability of the all the critical points of | ||

| + | |||

| + | <math> | ||

| + | \begin{bmatrix} x'\\ y'\end{bmatrix}=\begin{bmatrix}y\\ -9\sin(x)-y/5\end{bmatrix} | ||

| + | </math> | ||

| + | |||

| + | ====Solution:==== | ||

| + | |||

| + | for critical points, we get that | ||

| + | |||

| + | <math> y=0,~~~~ x= \pi \times k, ~~~~~ k \in \mathbb{N} </math> | ||

| + | |||

| + | Now, let's do the Jacobian. | ||

| + | |||

| + | |||

| + | <math>J(x',y')=\begin{bmatrix}0 & 1\\ \cos(x) & -1/5\end{bmatrix},</math> | ||

| + | |||

| + | <math>Case 1) :x=2\pi k\longrightarrow J(x',y')=\begin{bmatrix}0 & 1\\ 1 & -1/5\end{bmatrix}</math> | ||

| + | |||

| + | <math>Case 2) :x=\pi+2\pi k \longrightarrow J(x',y')=\begin{bmatrix}0 & 1\\ -1 & -1/5\end{bmatrix}</math> | ||

| + | |||

| + | For Case 1, the eigenvalues are -1.104987562112089 and 0.904987562112089 . Therefore, those points are saddles. | ||

| + | |||

| + | For Case 2, the eigenvalues are -0.1-0.99498743710662î and -0.1+0.99498743710662î. Therefore those critical points are spiral sinks. | ||

| + | |||

| + | Since this system is 2-d, it is possible to give a phase portrait and show you that this linearized system is accurate. | ||

| + | |||

| + | [[Image:JacobianPic8.png]] | ||

| + | |||

| + | ====Example #11:==== | ||

| + | |||

| + | Find the stability of the all the critical points of | ||

| + | |||

| + | <math> | ||

| + | \begin{bmatrix} x'\\ y'\end{bmatrix}=\begin{bmatrix}2x-y-x^2\\ x-2y+y^2\end{bmatrix} | ||

| + | </math> | ||

| + | |||

| + | ====Solution:==== | ||

| + | |||

| + | For critical points, we have (0,0) and (1,1). | ||

| + | Now, let's do the Jacobian. | ||

| + | |||

| + | |||

| + | <math>J(x',y')=\begin{bmatrix}2-2x & -1\\ 1 & -2+2y\end{bmatrix},</math> | ||

| + | |||

| + | <math>Case 1) :x=0,~~~y=0~~~~\longrightarrow J(x',y')=\begin{bmatrix}2 & -1\\ 1 & -2\end{bmatrix}</math> | ||

| + | |||

| + | <math>Case 2) :x=1,~~~y=1~~~~ \longrightarrow J(x',y')=\begin{bmatrix}0 & 1\\ 1 & 0\end{bmatrix}</math> | ||

| + | |||

| + | In case 1, we get eigenvalues of 1.732 and -1.732. So, that point is a saddle. | ||

| + | |||

| + | In case 2, we get eigenvalues of -i and i. This is important because this is an exception to the linearization precision so far. Eigenvalues of -i and i would tell us that the critical point is a center. However, for this case only, the linearization will not be precise, and the actual graph may not have this critical point as a center. | ||

| + | |||

| + | Here's a phase diagram to show this: | ||

| + | |||

| + | [[Image:JacobianPic9.png]]. | ||

---- | ---- | ||

Sources: | Sources: | ||

| − | # | + | #http://en.wikipedia.org/wiki/Jacobian_matrix_and_determinant |

| + | |||

| + | ---- | ||

| + | |||

| + | [[Math_squad|Back to Math Squad page]] | ||

| + | |||

| + | |||

| + | <div style="font-family: Verdana, sans-serif; font-size: 14px; text-align: justify; width: 70%; margin: auto; border: 1px solid #aaa; padding: 2em;"> | ||

| + | The Spring 2013 Math Squad 2013 was supported by an anonymous [https://www.projectrhea.org/learning/donate.php gift] to [https://www.projectrhea.org/learning/about_Rhea.php Project Rhea]. If you enjoyed reading these tutorials, please help Rhea "help students learn" with a [https://www.projectrhea.org/learning/donate.php donation] to this project. Your [https://www.projectrhea.org/learning/donate.php contribution] is greatly appreciated. | ||

| + | </div> | ||

Latest revision as of 11:17, 3 March 2015

keywords: Jacobian, injective, stability, critical points, saddles, tangent vectors, differential equations.

Contents

- 1 Jacobians and their applications

Jacobians and their applications

by Joseph Ruan, proud Member of the Math Squad.

Basic Definition

The Jacobian Matrix is just a matrix that takes the partial derivatives of each element of a transformation. In general, the Jacobian Matrix of a transformation F, looks like this:

F1,F2, F3... are each of the elements of the output vector and x1,x2, x3 ... are each of the elements of the input vector.

F1,F2, F3... are each of the elements of the output vector and x1,x2, x3 ... are each of the elements of the input vector.

So for example, in a 2 dimensional case, let T be a transformation such that T(u,v)=<x,y> then the Jacobian matrix of this function would look like this:

$ J(u,v)=\begin{bmatrix} \frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \\ \frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \end{bmatrix} $

This Jacobian matrix noticably holds all of the partial derivatives of the transformation with respect to each of the variables. Therefore each row contains how a particular output element changes with respect to each of the input elements. This means that the Jacobian matrix contains vectors that help describe how a change in any of the input elements affects the output elements.

To help illustrate making Jacobian matrices, let's do some examples:

Example #1:

What would be the Jacobian Matrix of this Transformation?

$ T(u,v) = <u\times \cos v, u\times \sin v> $ .

Solution:

$ x=u \times \cos v \longrightarrow \frac{\partial x}{\partial u}= \cos v , \; \frac{\partial x}{\partial v} = -u\times \sin v $

$ y=u\times\sin v \longrightarrow \frac{\partial y}{\partial u}= \sin v , \; \frac{\partial y}{\partial v} = u\times \cos v $

$ J(u,v)=\begin{bmatrix} \frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \\ \frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \end{bmatrix}= \begin{bmatrix} \cos v & -u\times \sin v \\ \sin v & u\times \cos v \end{bmatrix} $

Example #2:

What would be the Jacobian Matrix of this Transformation?

$ T(u,v) = <u, v, u^v>,u>0 $ .

Solution:

Notice, that this matrix will not be square because there is a difference in dimensions of the input and output, i.e. the transformation is not injective.

$ x=u \longrightarrow \frac{\partial x}{\partial u}= 1 , \; \frac{\partial x}{\partial v} = 0 $

$ y=v \longrightarrow \frac{\partial y}{\partial u}=0 , \; \frac{\partial y}{\partial v} = 1 $

$ z=u^v \longrightarrow \frac{\partial y}{\partial u}= u^{v-1}\times v, \; \frac{\partial y}{\partial v} = u^v\times ln(u) $

$ J(u,v)=\begin{bmatrix} \frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \\ \frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \\ \frac{\partial z}{\partial u} & \frac{\partial z}{\partial v} \end{bmatrix}= \begin{bmatrix} 1 & 0 \\ 0 & 1 \\ u^{v-1}\times v & u^v\times ln(u)\end{bmatrix} $

Example #3:

What would be the Jacobian Matrix of this Transformation?

$ T(u,v) = <\tan (uv)> $ .

Solution:

Notice, that this matrix will not be square because there is a difference in dimensions of the input and output, i.e. the transformation is not injective.

$ x=\tan(uv) \longrightarrow \frac{\partial x}{\partial u}= \sec^2(uv)\times v, \; \frac{\partial x}{\partial v} = sec^2(uv)\times u $

$ J(u,v)= \begin{bmatrix}\frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \end{bmatrix}=\begin{bmatrix}\sec^2(uv)\times v & sec^2(uv)\times u \end{bmatrix} $

Application #1: Jacobian Determinants

The determinant of Example #1 gives:

$ \left|\begin{matrix} \cos v & -u \times \sin v \\ \sin v & u \times \cos v \end{matrix}\right|=~~ u \cos^2 v + u \sin^2 v =~~ u $

Notice that, in an integral when changing from cartesian coordinates (dxdy) to polar coordinates $ (drd\theta) $, the equation is as such:

$ dxdy=r\times drd\theta=u\times dudv $

It is easy to extrapolate, then, that the transformation from one set of coordinates to another set is merely

$ dC2=det(J(T))dC1 $

where C1 is the first set of coordinates, det(J(C1)) is the determinant of the Jacobian matrix made from the Transformation T, T is the Transformation from C1 to C2 and C2 is the second set of coordinates.

It is important to notice several aspects: first, the determinant is assumed to exist and be non-zero, and therefore the Jacobian matrix must be square and invertible. This makes sense because when changing coordinates, it should be possible to change back.

Moreover, recall from linear algebra that, in a two dimensional case, the determinant of a matrix of two vectors describes the area of the parallelogram drawn by it, or more accurately, it describes the scale factor between the unit square and the area of the parallelogram. If we extend the analogy, the determinant of the Jacobian would describe some sort of scale factor change from one set of coordinates to the other. Here is a picture that should help:

This is the general idea behind change of variables. It is easy to that the jacobian method matches up with one-dimensional change of variables:

$ T(u)=\begin{matrix}u^2\end{matrix}=\begin{matrix}x\end{matrix} $ $ ~~~,~~~~~~~~ J(u)=\begin{bmatrix}\frac{\partial x}{\partial u}\end{bmatrix}=\begin{bmatrix}2u\end{bmatrix} $$ ,~~~~~~~ du=\left|J(u)\right|\times du=2u\times dx $

Remember that when substituting, to be VERY careful. It should always be the case where

$ J(\vec u) \times d\vec x = d\vec u,~~~~ J(\vec x) \times d\vec u = d\vec x $.

Let's do some examples to show what I mean.

Example #4:

Compute the following expression:

$ \iint(x^2+y^2)dA $

where dA is the region bounded by y2-x2=9, y2-x2=16, 2xy=14, 2xy=6.

Solution:

First, let's graph the region.

$ Let ~~~~ v=2xy, ~~~~ u=y^2-x^2 $.

Why do we do this? Let's look at the bounds: they're from y2-x2 =9 to y2 -x2=16 and 2xy=6 and 2xy=14.It'd be quite a simple task if the integral looked something like this:

$ \int^{16}_9 \int^{14}_6 k(u,v)\mathrm{d}u\mathrm{d}v $

However, notice that in this case, instead of substituting x= and y=, we're substituing with u= and v=. Therefore the Jacobian will be written as J(u,v) and the expression will be dudv=J(u,v)dxdy.

$ u=2xy\longrightarrow \frac{\partial u}{\partial x}= 2y , \; \frac{\partial u}{\partial y} = 2x $

$ v=y^2-x^2\longrightarrow \frac{\partial v}{\partial x}= -2x , \; \frac{\partial v}{\partial y} =2y $

$ J(u,v)=\begin{bmatrix} \frac{\partial u}{\partial x} & \frac{\partial u}{\partial y} \\ \frac{\partial v}{\partial x} & \frac{\partial v}{\partial y} \end{bmatrix}= \begin{bmatrix} 2y & 2x \\ -2x & 2y\end{bmatrix} $

$ dudv= \left|J(u,v)\right|\times dxdy \longrightarrow \left|\begin{matrix} 2y & 2x \\ 2x & -2y\end{matrix}\right|\times dxdy=(4y^2+4x^2)dxdy $

The new region looks like this:

Therefore,

$ \iint(x^2+y^2)dA=\int^{16}_9 \int^{14}_6 (x^2+y^2)/(4y^2+4x^2)\mathrm{d}u\mathrm{d}v=\int^{16}_9 \int^{14}_6 1/4\mathrm{d}u\mathrm{d}v=(16-9)\times (14-6)\times 1/4=14. $

Example #5:

Compute the following expression:

$ \iint(1/\sqrt{4x^2+9y^2})dA $

where dA is the region bounded by (2x)2+(3y)2=36.

Solution:

Again, let's graph the region.

There are many ways to approach this, but to start, let's choose the change of variables to be x=u/2, y=v/3. Why?

$ (2x)^2+(3y)^2=36\longrightarrow u^2+v^2=36 $

The region now looks like this:

We've turned the region from an ellipse to a circle!

However, notice that in this case, instead of substituting u= and v=, we're substituting with x= and y=. Therefore the Jacobian will be written as J(x,y) and the expression will be dxdy=J(x,y)dudv.

$ x=u/2\longrightarrow \frac{\partial x}{\partial u}= 1/2 , \; \frac{\partial x}{\partial v} = 0 $

$ y=v/3\longrightarrow \frac{\partial y}{\partial u}=0 , \; \frac{\partial y}{\partial v} =1/3 $

$ J(x,y)=\begin{bmatrix} \frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \\ \frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \end{bmatrix}= \begin{bmatrix} 1/2 & 0 \\ 0 & 1/3\end{bmatrix} $

$ dxdy=\left|J(x,y)\right| \times dudv =\left|\begin{matrix} 1/2 & 0 \\ 0 & 1/3\end{matrix}\right|\times dudv=(1/6)dudv $

$ \iint(1/\sqrt{4x^2+9y^2})dA =\iint 1/6*1/\sqrt{u^2+v^2}dudv $

Now, let's change the coordinates to polar:

$ r\sin \theta = u~~~~~, r\cos\theta = v,~~~~~ \iint 1/6\times 1/\sqrt{u^2+v^2}dA=1/6\times\iint 1/\sqrt{r^2}\times r\times drd\theta=1/6\times \int^{2\pi}_0 \int^6_0 dr d\theta=1/6\times 36\times \pi=6\pi $

Example #6:

Compute the following expression:

$ \iint(\sqrt{(x-y)(x+6y)})dA $

where dA refers to the plane bounded by the points (0,0), (1,1), (7,0),(6,-1).

Solution:

So far, we've only discussed cases where separation of variables is used to make regions easier to integrate over. For this example, separation of variables will be used to make the integrand easier.

First, let's graph the region.

As previously stated, it would relatively simple to just do the double integral with this region. However, the integrand is the real problem. And so, to make things simpler, we'll set

$ u=x-y\longrightarrow \frac{\partial u}{\partial x}= 1 , \; \frac{\partial u}{\partial y} = -1 $

$ v=x+6y\longrightarrow \frac{\partial v}{\partial x}=1 , \; \frac{\partial v}{\partial y} =6 $

Notice that in this case, we're back to substituting from the u= and v= side.

$ J(u,v)=\begin{bmatrix} \frac{\partial u}{\partial x} & \frac{\partial u}{\partial y} \\ \frac{\partial v}{\partial x} & \frac{\partial v}{\partial y} \end{bmatrix}= \begin{bmatrix} 1 & -1 \\ 1 & 6\end{bmatrix} $

$ dudv=\left|J(u,v)\right| \times dxdy =\left|\begin{matrix} 1 & -1 \\ 1 & 6\end{matrix}\right|\times dxdy=(7)dxdy $

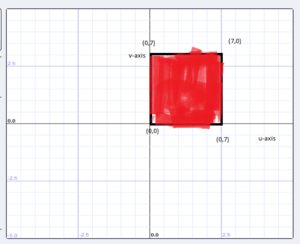

Let's look at the transformed region. It just so happens that it made a 7 x 7 square!

$ \iint(\sqrt{(x-y)(x+6y)})dA=\int^7_0 \int^7_0 \sqrt{uv}dudv=7*3/2*3/2=63/4 $

Application #2: Transforming Tangent vectors

The second major application of Jacobian Matrices comes from the fact that it is made of the partial derivatives of the transformation with respect to each original element. Therefore, when a function F is transformed by a transformation T, the tangent vector of F at a point is likewise transformed by the Jacobian Matrix.

Here is a picture to illustrate this. Note: this picture was taken from etsu.edu.

In other words, if we transform a function, we can find the new tangent vector at a transformed point.

Example #7:

$ <u,v>=<t^2,t^4>,~~~T(u,v)=<u^3-v^3,3uv>=<x,y> $

Find the velocity vector of T(u,v) at t=1.

Solution:

$ x=u^3-v^3\longrightarrow \frac{\partial x}{\partial u}= 3u^2 , \; \frac{\partial x}{\partial v} = -3v^2 $

$ y=3uv\longrightarrow \frac{\partial y}{\partial u}=3v , \; \frac{\partial y}{\partial v} =3u $

$ J(x,y)=\begin{bmatrix} \frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \\ \frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \end{bmatrix}= \begin{bmatrix} 3u^2 & -3v^2 \\ 3v & 3u\end{bmatrix} $

$ u'(t)=\begin{bmatrix}2t\\4t^3\end{bmatrix} $

$ J(x,y)\times u'(t)=\begin{bmatrix}3u^2 & -3v^2 \\3v & 3u\end{bmatrix} \times \begin{bmatrix}2t\\4t^3\end{bmatrix}= \begin{bmatrix}3u^2\times 2t-3v^2\times 4t^3\\3v\times 2t+3u\times 4t^3\end{bmatrix}= \begin{bmatrix}3(t^2)^2\times 2t-3(t^4)^2\times 4t^3\\3(t^4)\times 2t+3(t^2)\times 4t^3\end{bmatrix}= \begin{bmatrix}6t^5-12t^{11}\\18t^5\end{bmatrix} $

Therefore at t=1, the transformed tangent vector is:

$ \begin{bmatrix}-6\\18\end{bmatrix} $

Application #3: Linearization of Systems of Differential Equations

There is one last major application of Jacobians: approximating systems of Differential equations. Let's take the general expression:

$ \vec x'=f(\vec x,t) $

Around a point, if f(x,t) is differentiable, the system can be approximated to:

$ \vec x' \approx A\vec x ,~~~~A=J(\vec x ,t_0) $

The reason this works is similar to the idea behind one dimensional differentials:

$ dy\approx f'(x)\times dx $

Why is this important? This can help us check stability for certain systems of differential equations. It is worth mentioning that this linearization works even when there are terms with t in them in the equation. This is because at very small intervals, those terms are small and don't affect the approximation.

Example #8:

Find the stability of the all the critical points of

$ \begin{bmatrix} x'\\ y'\\ z'\end{bmatrix}=\begin{bmatrix}z-x\\ 3/(2+x)-3y\\3y-z\end{bmatrix} $

Solution:

To find the critical points, we set x'=y'=z'=0.

$ z=x,~~~~ y=1/3x,~~~~ 3/(2+x)-x=0 \longrightarrow (x+3)(x-1)=0,~~~~ (1,1/3,1)~~~~ (-3,-1,-3) $

Now let's find the Jacobian at (1,1/3,1)

$ J(x',y',z')=\begin{bmatrix}-1 & 0 & 1\\ -3/(2+x)^2 & -3 & 0\\ 0 & 3 & -1 \end{bmatrix}=\begin{bmatrix}-1 & 0 & 1\\ -1/3 & -3 & 0\\ 0 & 3 & -1 \end{bmatrix}. $

The eigenvalues are -3.2055694304005904, -0.8972152847997048 ± 0.6654569511528134i. Since the real parts are all negative, this critical point is locally stable.

Now let's find the Jacobian at (-3,-1,-3)

$ J(x',y',z')=\begin{bmatrix}-1 & 0 & 1\\ -3/(2+x)^2 & -3 & 0\\ 0 & 3 & -1 \end{bmatrix}=\begin{bmatrix}-1 & 0 & 1\\ -3 & -3 & 0\\ 0 & 3 & -1 \end{bmatrix}. $

The eigen values are -4, -2.5 ± 1.65831i. Because all of the real parts are negative, this critical point is locally stable.

Example #9:

Find the stability of the all the critical points of

$ \begin{bmatrix} x'\\ y'\\ z'\end{bmatrix}=\begin{bmatrix}z+2y^2+x\\ y-z^{3/2}\\ \sin(z) \end{bmatrix} $

Solution:

The only critical point in this case is (0,0,0)

Now, let's do the Jacobian.

$ J(x',y',z')=\begin{bmatrix}1 & 2y & 1\\ 0 & 1 & 3/2*z^{1/2}\\ 0 & 0 & \cos(z) \end{bmatrix}=\begin{bmatrix}1 & 0 & 1\\ 0 & 1 & 0\\ 0 & 0 & 1 \end{bmatrix}. $

The eigenvalue is just 1. Since this is positive, the critical point is unstable.

Example #10:

Find the stability of the all the critical points of

$ \begin{bmatrix} x'\\ y'\end{bmatrix}=\begin{bmatrix}y\\ -9\sin(x)-y/5\end{bmatrix} $

Solution:

for critical points, we get that

$ y=0,~~~~ x= \pi \times k, ~~~~~ k \in \mathbb{N} $

Now, let's do the Jacobian.

$ J(x',y')=\begin{bmatrix}0 & 1\\ \cos(x) & -1/5\end{bmatrix}, $

$ Case 1) :x=2\pi k\longrightarrow J(x',y')=\begin{bmatrix}0 & 1\\ 1 & -1/5\end{bmatrix} $

$ Case 2) :x=\pi+2\pi k \longrightarrow J(x',y')=\begin{bmatrix}0 & 1\\ -1 & -1/5\end{bmatrix} $

For Case 1, the eigenvalues are -1.104987562112089 and 0.904987562112089 . Therefore, those points are saddles.

For Case 2, the eigenvalues are -0.1-0.99498743710662î and -0.1+0.99498743710662î. Therefore those critical points are spiral sinks.

Since this system is 2-d, it is possible to give a phase portrait and show you that this linearized system is accurate.

Example #11:

Find the stability of the all the critical points of

$ \begin{bmatrix} x'\\ y'\end{bmatrix}=\begin{bmatrix}2x-y-x^2\\ x-2y+y^2\end{bmatrix} $

Solution:

For critical points, we have (0,0) and (1,1). Now, let's do the Jacobian.

$ J(x',y')=\begin{bmatrix}2-2x & -1\\ 1 & -2+2y\end{bmatrix}, $

$ Case 1) :x=0,~~~y=0~~~~\longrightarrow J(x',y')=\begin{bmatrix}2 & -1\\ 1 & -2\end{bmatrix} $

$ Case 2) :x=1,~~~y=1~~~~ \longrightarrow J(x',y')=\begin{bmatrix}0 & 1\\ 1 & 0\end{bmatrix} $

In case 1, we get eigenvalues of 1.732 and -1.732. So, that point is a saddle.

In case 2, we get eigenvalues of -i and i. This is important because this is an exception to the linearization precision so far. Eigenvalues of -i and i would tell us that the critical point is a center. However, for this case only, the linearization will not be precise, and the actual graph may not have this critical point as a center.

Here's a phase diagram to show this:

Sources:

The Spring 2013 Math Squad 2013 was supported by an anonymous gift to Project Rhea. If you enjoyed reading these tutorials, please help Rhea "help students learn" with a donation to this project. Your contribution is greatly appreciated.