m |

|||

| (One intermediate revision by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | == Example | + | == Example. Two jointly distributed random variables == |

| − | Two joinly distributed random variables <math>\mathbf{X}</math> and <math>\mathbf{Y}</math> have joint pdf | + | |

| + | Two joinly distributed random variables <math>\mathbf{X}</math> and <math>\mathbf{Y}</math> have joint pdf | ||

<math> | <math> | ||

| Line 8: | Line 9: | ||

0 & ,\textrm{ elsewhere.} | 0 & ,\textrm{ elsewhere.} | ||

\end{array}\end{cases} | \end{array}\end{cases} | ||

| − | </math> | + | </math> |

| − | === (a) === | + | === (a) === |

| − | + | ||

| − | [[Image: | + | Find the constant <math>c</math> such that <math>f_{\mathbf{XY}}(x,y)</math> is a valid pdf. |

| + | |||

| + | [[Image:ECE600 Example Two jointly distributed random variables1.jpg]] | ||

<math>\iint_{\mathbf{R}^{2}}f_{\mathbf{XY}}\left(x,y\right)=c\cdot Area=1</math> where <math>Area=\frac{1}{2}</math>. | <math>\iint_{\mathbf{R}^{2}}f_{\mathbf{XY}}\left(x,y\right)=c\cdot Area=1</math> where <math>Area=\frac{1}{2}</math>. | ||

| Line 21: | Line 23: | ||

</math> | </math> | ||

| − | === (b) === | + | === (b) === |

| − | Find the conditional density of <math>\mathbf{Y}</math> conditioned on <math>\mathbf{X}=x</math>. | + | |

| + | Find the conditional density of <math>\mathbf{Y}</math> conditioned on <math>\mathbf{X}=x</math>. | ||

<math> | <math> | ||

f_{\mathbf{Y}}\left(y|\left\{ \mathbf{X}=x\right\} \right)=\frac{f_{\mathbf{XY}}\left(x,y\right)}{f_{\mathbf{X}}(x)}. | f_{\mathbf{Y}}\left(y|\left\{ \mathbf{X}=x\right\} \right)=\frac{f_{\mathbf{XY}}\left(x,y\right)}{f_{\mathbf{X}}(x)}. | ||

| − | </math> | + | </math> |

<math> | <math> | ||

f_{\mathbf{X}}(x)=\int_{-\infty}^{\infty}f_{\mathbf{XY}}\left(x,y\right)dy=\int_{0}^{1-x}2dy=2\left(1-x\right)\cdot\mathbf{1}_{\left[0,1\right]}(x). | f_{\mathbf{X}}(x)=\int_{-\infty}^{\infty}f_{\mathbf{XY}}\left(x,y\right)dy=\int_{0}^{1-x}2dy=2\left(1-x\right)\cdot\mathbf{1}_{\left[0,1\right]}(x). | ||

| − | </math> | + | </math> |

<math> | <math> | ||

f_{\mathbf{Y}}\left(y|\left\{ \mathbf{X}=x\right\} \right)=\frac{f_{\mathbf{XY}}\left(x,y\right)}{f_{\mathbf{X}}(x)}=\frac{2}{2\left(1-x\right)}=\frac{1}{1-x}\textrm{ where }0\leq y\leq1-x\Longrightarrow\frac{1}{1-x}\cdot\mathbf{1}_{\left[0,1-x\right]}\left(y\right). | f_{\mathbf{Y}}\left(y|\left\{ \mathbf{X}=x\right\} \right)=\frac{f_{\mathbf{XY}}\left(x,y\right)}{f_{\mathbf{X}}(x)}=\frac{2}{2\left(1-x\right)}=\frac{1}{1-x}\textrm{ where }0\leq y\leq1-x\Longrightarrow\frac{1}{1-x}\cdot\mathbf{1}_{\left[0,1-x\right]}\left(y\right). | ||

| − | </math> | + | </math> |

| + | |||

| + | === (c) === | ||

| − | + | Find the minimum mean-square error estimator <math>\hat{y}_{MMS}\left(x\right)</math> of <math>\mathbf{Y}</math> given that <math>\mathbf{X}=x</math>. | |

| − | Find the minimum mean-square error estimator <math>\hat{y}_{MMS}\left(x\right)</math> of <math>\mathbf{Y}</math> | + | |

<math> | <math> | ||

\hat{y}_{MMS}\left(x\right)=E\left[\mathbf{Y}|\left\{ \mathbf{X}=x\right\} \right]=\int_{\mathbf{R}}yf_{\mathbf{Y}}\left(y|\left\{ \mathbf{X}=x\right\} \right)dy=\int_{0}^{1-x}\frac{y}{1-x}dy=\frac{y^{2}}{2\left(1-x\right)}\biggl|_{0}^{1-x}=\frac{1-x}{2}. | \hat{y}_{MMS}\left(x\right)=E\left[\mathbf{Y}|\left\{ \mathbf{X}=x\right\} \right]=\int_{\mathbf{R}}yf_{\mathbf{Y}}\left(y|\left\{ \mathbf{X}=x\right\} \right)dy=\int_{0}^{1-x}\frac{y}{1-x}dy=\frac{y^{2}}{2\left(1-x\right)}\biggl|_{0}^{1-x}=\frac{1-x}{2}. | ||

| − | </math> | + | </math> |

| + | |||

| + | === (d) === | ||

| − | |||

Find a maximum aposteriori probability estimator. | Find a maximum aposteriori probability estimator. | ||

<math> | <math> | ||

\hat{y}_{MAP}\left(x\right)=\arg\max_{y}\left\{ f_{Y}\left(y|\left\{ \mathbf{X}=x\right\} \right)\right\} | \hat{y}_{MAP}\left(x\right)=\arg\max_{y}\left\{ f_{Y}\left(y|\left\{ \mathbf{X}=x\right\} \right)\right\} | ||

| − | </math> | + | </math> |

but <math>f_{Y}\left(y|\left\{ \mathbf{X}=x\right\} \right)=\frac{1}{1-x}\cdot\mathbf{1}_{\left[0,1-x\right]}\left(y\right)</math>. Any <math>\hat{y}\in\left[0,1-x\right]</math> is a MAP estimator. The MAP estimator is '''NOT''' unique. | but <math>f_{Y}\left(y|\left\{ \mathbf{X}=x\right\} \right)=\frac{1}{1-x}\cdot\mathbf{1}_{\left[0,1-x\right]}\left(y\right)</math>. Any <math>\hat{y}\in\left[0,1-x\right]</math> is a MAP estimator. The MAP estimator is '''NOT''' unique. | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | == Example. Two jointly distributed independent random variables == | ||

| + | |||

| + | Let <math>\mathbf{X}</math> and <math>\mathbf{Y}</math> be two jointly distributed, independent random variables. The pdf of <math>\mathbf{X}</math> is | ||

| + | |||

| + | <math>f_{\mathbf{X}}\left(x\right)=xe^{-x^{2}/2}\cdot\mathbf{1}_{\left[0,\infty\right)}\left(x\right),</math> | ||

| + | |||

| + | and <math>\mathbf{Y}</math> is a Gaussian random variable with mean 0 and variance 1. Let <math>\mathbf{U}</math> and <math>\mathbf{V}</math> be two new random variables defined as <math>\mathbf{U}=\sqrt{\mathbf{X}^{2}+\mathbf{Y}^{2}}</math> and <math>\mathbf{V}=\lambda\mathbf{Y}/\mathbf{X}</math> where <math>\lambda</math> is a positive real number. | ||

| + | |||

| + | === (a) === | ||

| + | Find the joint pdf of <math>\mathbf{U}</math> and <math>\mathbf{V}</math>. (Direct pdf method) | ||

| + | |||

| + | <math> | ||

| + | f_{\mathbf{UV}}\left(u,v\right)=f_{\mathbf{XY}}\left(x\left(u,v\right),y\left(u,v\right)\right)\left|\frac{\partial\left(x,y\right)}{\partial\left(u,v\right)}\right| | ||

| + | </math> | ||

| + | |||

| + | Solving for <math>x</math> and <math>y</math> in terms of <math>u</math> and <math>v</math>, we have <math>u^{2}=x^{2}+y^{2}</math> and <math>v^{2}=\frac{\lambda^{2}y^{2}}{x^{2}}\Longrightarrow y^{2}=\frac{v^{2}x^{2}}{\lambda^{2}}</math>. | ||

| + | |||

| + | Now, | ||

| + | <math> | ||

| + | u^{2}=x^{2}+y^{2}=x^{2}+\frac{v^{2}x^{2}}{\lambda^{2}}=x^{2}\left(1+v^{2}/\lambda^{2}\right)\Longrightarrow x=\frac{u}{\sqrt{1+v^{2}/\lambda^{2}}}\Longrightarrow x\left(u,v\right)=\frac{u}{\sqrt{1+v^{2}/\lambda^{2}}}. | ||

| + | </math> | ||

| + | |||

| + | Thus, | ||

| + | <math> | ||

| + | y=\frac{vx}{\lambda}=\frac{vu}{\lambda\sqrt{1+v^{2}/\lambda^{2}}}\Longrightarrow y\left(u,v\right)=\frac{vu}{\lambda\sqrt{1+v^{2}/\lambda^{2}}}. | ||

| + | </math> | ||

| + | |||

| + | Computing the Jacobian. | ||

| + | |||

| + | <math> | ||

| + | \frac{\partial\left(x,y\right)}{\partial\left(u,v\right)}=\left|\begin{array}{ll} | ||

| + | \frac{\partial x}{\partial u} & \frac{\partial x}{\partial v}\\ | ||

| + | \frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} | ||

| + | \end{array}\right|=\left|\begin{array}{cc} | ||

| + | \frac{1}{\sqrt{1+v^{2}/\lambda^{2}}} & \frac{-uv}{\lambda^{2}\left(1-v^{2}/\lambda^{2}\right)^{\frac{3}{2}}}\\ | ||

| + | \frac{v}{\lambda\sqrt{1+v^{2}/\lambda^{2}}} & \frac{u}{\lambda\sqrt{1+v^{2}/\lambda^{2}}}-\frac{uv^{2}}{\lambda^{3}\left(1+v^{2}/\lambda^{2}\right)^{\frac{3}{2}}} | ||

| + | \end{array}\right| | ||

| + | </math> | ||

| + | |||

| + | <math> | ||

| + | =\frac{1}{\sqrt{1+v^{2}/\lambda^{2}}}\left[\frac{u}{\lambda\sqrt{1+v^{2}/\lambda^{2}}}-\frac{uv^{2}}{\lambda^{3}\left(1+v^{2}/\lambda^{2}\right)^{\frac{3}{2}}}\right]-\frac{-uv^{2}}{\lambda^{3}\left(1-v^{2}/\lambda^{2}\right)^{2}} | ||

| + | </math> | ||

| + | |||

| + | <math> | ||

| + | =\frac{u}{\lambda\left(1+v^{2}/\lambda^{2}\right)}=\frac{\lambda u}{\lambda^{2}+v^{2}}. | ||

| + | </math> | ||

| + | |||

| + | This is larger than or equal to zero because <math>u</math> is non-negative. | ||

| + | |||

| + | Because <math>\mathbf{X}</math> and <math>\mathbf{Y}</math> are statistically independent | ||

| + | |||

| + | <math> | ||

| + | f_{\mathbf{XY}}\left(x,y\right)=f_{\mathbf{X}}\left(x\right)f_{\mathbf{Y}}\left(y\right)=xe^{-x^{2}/2}\cdot\mathbf{1}_{\left[0,\infty\right)}\left(x\right)\cdot\frac{1}{\sqrt{2\pi}}e^{-y^{2}/2}=\frac{x}{\sqrt{2\pi}}e^{-\left(x^{2}+y^{2}\right)/2}\cdot\mathbf{1}_{\left[0,\infty\right)}\left(x\right). | ||

| + | </math> | ||

| + | |||

| + | Substituting these quantities, we get | ||

| + | |||

| + | <math> | ||

| + | f_{\mathbf{UV}}\left(u,v\right)=f_{\mathbf{XY}}\left(x\left(u,v\right),y\left(u,v\right)\right)\left|\frac{\partial\left(x,y\right)}{\partial\left(u,v\right)}\right|=\frac{u}{\sqrt{1+v^{2}/\lambda^{2}}}\cdot\frac{1}{\sqrt{2\pi}}e^{-u^{2}/2}\cdot\frac{\lambda u}{\lambda^{2}+v^{2}}\cdot\mathbf{1}_{\left[0,\infty\right)}\left(u\right) | ||

| + | </math> | ||

| + | |||

| + | <math> | ||

| + | =\frac{\lambda^{2}}{\sqrt{2\pi}}u^{2}e^{-u^{2}/2}\cdot\mathbf{1}_{\left[0,\infty\right)}\left(u\right)\cdot\frac{1}{\left(\lambda^{2}+v^{2}\right)^{\frac{3}{2}}}. | ||

| + | </math> | ||

| + | |||

| + | === (b) === | ||

| + | Are <math>\mathbf{U}</math> and <math>\mathbf{V}</math> statistically independent? Justify your answer. | ||

| + | |||

| + | <math>\mathbf{U}</math> and <math>\mathbf{V}</math> are statistically independent iff <math>f_{\mathbf{UV}}\left(u,v\right)=f_{\mathbf{U}}\left(u\right)f_{\mathbf{V}}\left(v\right)</math>. | ||

| + | |||

| + | Now from part (a), we see that | ||

| + | <math> | ||

| + | f_{\mathbf{UV}}\left(u,v\right)=c_{1}g_{1}\left(u\right)\cdot c_{2}g_{2}\left(v\right) | ||

| + | </math> | ||

| + | where | ||

| + | <math> | ||

| + | g_{1}\left(u\right)=u^{2}e^{-u^{2}/2}\cdot\mathbf{1}_{\left[0,\infty\right)}\left(u\right) | ||

| + | </math> | ||

| + | and | ||

| + | <math>g_{2}\left(v\right)=\frac{1}{\left(\lambda^{2}+v^{2}\right)^{\frac{3}{2}}}</math> with <math>c_{1}</math> and <math>c_{2}</math> selected such that <math>f_{\mathbf{U}}\left(u\right)=c_{1}g_{1}\left(u\right)</math> and <math>f_{\mathbf{V}}\left(v\right)=c_{2}g_{2}\left(v\right)</math> are both valid pdfs. | ||

| + | |||

| + | <math>\therefore</math> <math>\mathbf{U}</math> and <math>\mathbf{V}</math> are statistically independent. | ||

Latest revision as of 04:27, 15 November 2010

Contents

Example. Two jointly distributed random variables

Two joinly distributed random variables $ \mathbf{X} $ and $ \mathbf{Y} $ have joint pdf

$ f_{\mathbf{XY}}\left(x,y\right)=\begin{cases} \begin{array}{ll} c & ,\textrm{ for }x\geq0,y\geq0,\textrm{ and }x+y\leq1\\ 0 & ,\textrm{ elsewhere.} \end{array}\end{cases} $

(a)

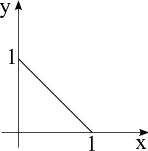

Find the constant $ c $ such that $ f_{\mathbf{XY}}(x,y) $ is a valid pdf.

$ \iint_{\mathbf{R}^{2}}f_{\mathbf{XY}}\left(x,y\right)=c\cdot Area=1 $ where $ Area=\frac{1}{2} $.

$ \therefore c=2 $

(b)

Find the conditional density of $ \mathbf{Y} $ conditioned on $ \mathbf{X}=x $.

$ f_{\mathbf{Y}}\left(y|\left\{ \mathbf{X}=x\right\} \right)=\frac{f_{\mathbf{XY}}\left(x,y\right)}{f_{\mathbf{X}}(x)}. $

$ f_{\mathbf{X}}(x)=\int_{-\infty}^{\infty}f_{\mathbf{XY}}\left(x,y\right)dy=\int_{0}^{1-x}2dy=2\left(1-x\right)\cdot\mathbf{1}_{\left[0,1\right]}(x). $

$ f_{\mathbf{Y}}\left(y|\left\{ \mathbf{X}=x\right\} \right)=\frac{f_{\mathbf{XY}}\left(x,y\right)}{f_{\mathbf{X}}(x)}=\frac{2}{2\left(1-x\right)}=\frac{1}{1-x}\textrm{ where }0\leq y\leq1-x\Longrightarrow\frac{1}{1-x}\cdot\mathbf{1}_{\left[0,1-x\right]}\left(y\right). $

(c)

Find the minimum mean-square error estimator $ \hat{y}_{MMS}\left(x\right) $ of $ \mathbf{Y} $ given that $ \mathbf{X}=x $.

$ \hat{y}_{MMS}\left(x\right)=E\left[\mathbf{Y}|\left\{ \mathbf{X}=x\right\} \right]=\int_{\mathbf{R}}yf_{\mathbf{Y}}\left(y|\left\{ \mathbf{X}=x\right\} \right)dy=\int_{0}^{1-x}\frac{y}{1-x}dy=\frac{y^{2}}{2\left(1-x\right)}\biggl|_{0}^{1-x}=\frac{1-x}{2}. $

(d)

Find a maximum aposteriori probability estimator.

$ \hat{y}_{MAP}\left(x\right)=\arg\max_{y}\left\{ f_{Y}\left(y|\left\{ \mathbf{X}=x\right\} \right)\right\} $

but $ f_{Y}\left(y|\left\{ \mathbf{X}=x\right\} \right)=\frac{1}{1-x}\cdot\mathbf{1}_{\left[0,1-x\right]}\left(y\right) $. Any $ \hat{y}\in\left[0,1-x\right] $ is a MAP estimator. The MAP estimator is NOT unique.

Example. Two jointly distributed independent random variables

Let $ \mathbf{X} $ and $ \mathbf{Y} $ be two jointly distributed, independent random variables. The pdf of $ \mathbf{X} $ is

$ f_{\mathbf{X}}\left(x\right)=xe^{-x^{2}/2}\cdot\mathbf{1}_{\left[0,\infty\right)}\left(x\right), $

and $ \mathbf{Y} $ is a Gaussian random variable with mean 0 and variance 1. Let $ \mathbf{U} $ and $ \mathbf{V} $ be two new random variables defined as $ \mathbf{U}=\sqrt{\mathbf{X}^{2}+\mathbf{Y}^{2}} $ and $ \mathbf{V}=\lambda\mathbf{Y}/\mathbf{X} $ where $ \lambda $ is a positive real number.

(a)

Find the joint pdf of $ \mathbf{U} $ and $ \mathbf{V} $. (Direct pdf method)

$ f_{\mathbf{UV}}\left(u,v\right)=f_{\mathbf{XY}}\left(x\left(u,v\right),y\left(u,v\right)\right)\left|\frac{\partial\left(x,y\right)}{\partial\left(u,v\right)}\right| $

Solving for $ x $ and $ y $ in terms of $ u $ and $ v $, we have $ u^{2}=x^{2}+y^{2} $ and $ v^{2}=\frac{\lambda^{2}y^{2}}{x^{2}}\Longrightarrow y^{2}=\frac{v^{2}x^{2}}{\lambda^{2}} $.

Now, $ u^{2}=x^{2}+y^{2}=x^{2}+\frac{v^{2}x^{2}}{\lambda^{2}}=x^{2}\left(1+v^{2}/\lambda^{2}\right)\Longrightarrow x=\frac{u}{\sqrt{1+v^{2}/\lambda^{2}}}\Longrightarrow x\left(u,v\right)=\frac{u}{\sqrt{1+v^{2}/\lambda^{2}}}. $

Thus, $ y=\frac{vx}{\lambda}=\frac{vu}{\lambda\sqrt{1+v^{2}/\lambda^{2}}}\Longrightarrow y\left(u,v\right)=\frac{vu}{\lambda\sqrt{1+v^{2}/\lambda^{2}}}. $

Computing the Jacobian.

$ \frac{\partial\left(x,y\right)}{\partial\left(u,v\right)}=\left|\begin{array}{ll} \frac{\partial x}{\partial u} & \frac{\partial x}{\partial v}\\ \frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \end{array}\right|=\left|\begin{array}{cc} \frac{1}{\sqrt{1+v^{2}/\lambda^{2}}} & \frac{-uv}{\lambda^{2}\left(1-v^{2}/\lambda^{2}\right)^{\frac{3}{2}}}\\ \frac{v}{\lambda\sqrt{1+v^{2}/\lambda^{2}}} & \frac{u}{\lambda\sqrt{1+v^{2}/\lambda^{2}}}-\frac{uv^{2}}{\lambda^{3}\left(1+v^{2}/\lambda^{2}\right)^{\frac{3}{2}}} \end{array}\right| $

$ =\frac{1}{\sqrt{1+v^{2}/\lambda^{2}}}\left[\frac{u}{\lambda\sqrt{1+v^{2}/\lambda^{2}}}-\frac{uv^{2}}{\lambda^{3}\left(1+v^{2}/\lambda^{2}\right)^{\frac{3}{2}}}\right]-\frac{-uv^{2}}{\lambda^{3}\left(1-v^{2}/\lambda^{2}\right)^{2}} $

$ =\frac{u}{\lambda\left(1+v^{2}/\lambda^{2}\right)}=\frac{\lambda u}{\lambda^{2}+v^{2}}. $

This is larger than or equal to zero because $ u $ is non-negative.

Because $ \mathbf{X} $ and $ \mathbf{Y} $ are statistically independent

$ f_{\mathbf{XY}}\left(x,y\right)=f_{\mathbf{X}}\left(x\right)f_{\mathbf{Y}}\left(y\right)=xe^{-x^{2}/2}\cdot\mathbf{1}_{\left[0,\infty\right)}\left(x\right)\cdot\frac{1}{\sqrt{2\pi}}e^{-y^{2}/2}=\frac{x}{\sqrt{2\pi}}e^{-\left(x^{2}+y^{2}\right)/2}\cdot\mathbf{1}_{\left[0,\infty\right)}\left(x\right). $

Substituting these quantities, we get

$ f_{\mathbf{UV}}\left(u,v\right)=f_{\mathbf{XY}}\left(x\left(u,v\right),y\left(u,v\right)\right)\left|\frac{\partial\left(x,y\right)}{\partial\left(u,v\right)}\right|=\frac{u}{\sqrt{1+v^{2}/\lambda^{2}}}\cdot\frac{1}{\sqrt{2\pi}}e^{-u^{2}/2}\cdot\frac{\lambda u}{\lambda^{2}+v^{2}}\cdot\mathbf{1}_{\left[0,\infty\right)}\left(u\right) $

$ =\frac{\lambda^{2}}{\sqrt{2\pi}}u^{2}e^{-u^{2}/2}\cdot\mathbf{1}_{\left[0,\infty\right)}\left(u\right)\cdot\frac{1}{\left(\lambda^{2}+v^{2}\right)^{\frac{3}{2}}}. $

(b)

Are $ \mathbf{U} $ and $ \mathbf{V} $ statistically independent? Justify your answer.

$ \mathbf{U} $ and $ \mathbf{V} $ are statistically independent iff $ f_{\mathbf{UV}}\left(u,v\right)=f_{\mathbf{U}}\left(u\right)f_{\mathbf{V}}\left(v\right) $.

Now from part (a), we see that $ f_{\mathbf{UV}}\left(u,v\right)=c_{1}g_{1}\left(u\right)\cdot c_{2}g_{2}\left(v\right) $ where $ g_{1}\left(u\right)=u^{2}e^{-u^{2}/2}\cdot\mathbf{1}_{\left[0,\infty\right)}\left(u\right) $ and $ g_{2}\left(v\right)=\frac{1}{\left(\lambda^{2}+v^{2}\right)^{\frac{3}{2}}} $ with $ c_{1} $ and $ c_{2} $ selected such that $ f_{\mathbf{U}}\left(u\right)=c_{1}g_{1}\left(u\right) $ and $ f_{\mathbf{V}}\left(v\right)=c_{2}g_{2}\left(v\right) $ are both valid pdfs.

$ \therefore $ $ \mathbf{U} $ and $ \mathbf{V} $ are statistically independent.