m |

(→Titleless Section) |

||

| (5 intermediate revisions by the same user not shown) | |||

| Line 29: | Line 29: | ||

Further reading/Reference: | Further reading/Reference: | ||

http://projecteuclid.org/DPubS?service=UI&version=1.0&verb=Display&handle=euclid.aos/1079120131 | http://projecteuclid.org/DPubS?service=UI&version=1.0&verb=Display&handle=euclid.aos/1079120131 | ||

| + | |||

| + | Feature extraction can also be performed using intuition, with varying degrees of success. One example is found automotive intelligence applications such as automatic lane departure warning systems. Here, three features are chosen to identify lanes in a stream of images from a video camera. | ||

| + | |||

| + | Further reading/Reference: | ||

| + | [http://myfreefilehosting.com/f/a82757365d_0.48MB Flexible Low Cost Lane Departure Warning System] | ||

| + | |||

| + | Oster, K., Fornero M., Lingenfelter, D., Litkouhi, S., Lingenfelter, D., Ong, R., “Flexible Low Cost Lane Departure Warning System,” presented at the World Congress of the Society of Automotive Engineers, Detroit, MI, April 2007. | ||

[[Category:ECE662]] | [[Category:ECE662]] | ||

Latest revision as of 09:40, 24 April 2008

Feature extractor should extract "distinguishing features that are invariant to irrelevant transformations of the input" such as translation, rotation, scale, occlusion (the effect of one object blocking another object from view), projective distortion, rate and deformation.

Explanation

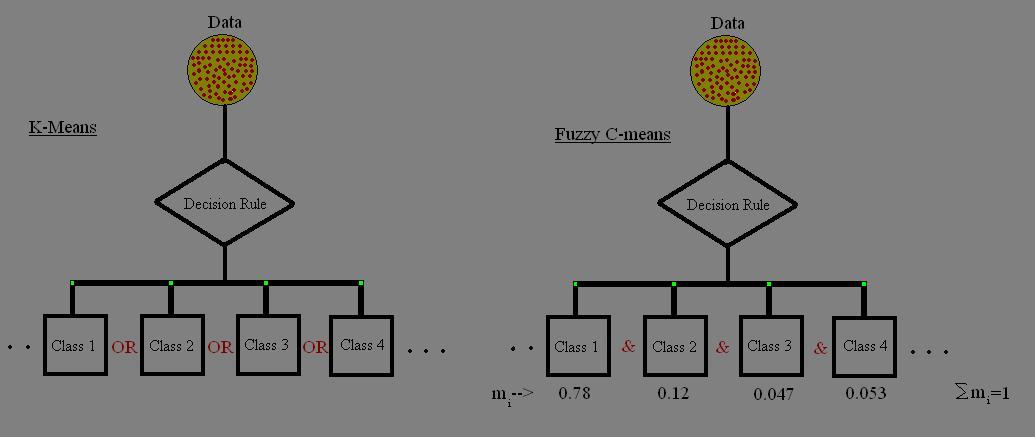

When we set out to classify or identify objects/patterns, it is instructive to find some characteristics/features "that set apart" one object from another. Hence, the process of extracting any feature or a set of features that help in attaching a uniqueness to a particular object so as to set it apart from the rest is called feature extraction. To make things clearer, let us look at this with the help of diagrams.

Titleless Section

Fisher's linear discriminant is a classification method that projects high-dimensional data onto a line and performs classification in this one-dimensional space. The projection maximizes the distance between the means of the two classes while minimizing the variance within each class.

Further reading/Reference: http://www.soe.ucsc.edu/research/compbio/genex/genexTR2html/node12.html

The minimum distance classifier is used to classify unknown image data to classes which minimize the distance between the image data and the class in multi-feature space. The distance is defined as an index of similarity so that the minimum distance is identical to the maximum similarity.

Further reading/Reference: http://www.profc.udec.cl/~gabriel/tutoriales/rsnote/cp11/cp11-6.htm

Artificial neural networks are relatively crude electronic networks of "neurons" based on the neural structure of the brain. They process records one at a time, and "learn" by comparing their classification of the record (which, at the outset, is largely arbitrary) with the known actual classification of the record.

Further reading/Reference: http://www.resample.com/xlminer/help/NNC/NNClass_intro.htm

A global optimization technique is introduced for statistical classifier design to minimize the probability of classification error.

Further reading/Reference: http://projecteuclid.org/DPubS?service=UI&version=1.0&verb=Display&handle=euclid.aos/1079120131

Feature extraction can also be performed using intuition, with varying degrees of success. One example is found automotive intelligence applications such as automatic lane departure warning systems. Here, three features are chosen to identify lanes in a stream of images from a video camera.

Further reading/Reference: Flexible Low Cost Lane Departure Warning System

Oster, K., Fornero M., Lingenfelter, D., Litkouhi, S., Lingenfelter, D., Ong, R., “Flexible Low Cost Lane Departure Warning System,” presented at the World Congress of the Society of Automotive Engineers, Detroit, MI, April 2007.