| (36 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

| − | <b><font size="+2">The Birth of | + | <b><font size="+4">AI Art</font></b> |

| + | <br /> | ||

| + | <br /> | ||

| + | [[File:Colorado_fair.jpg|1200px|||alt text]] | ||

| + | <br /> | ||

| + | <i>(Jason Allen) The AI generated art that won the Colorado State Fair in 2022.</i> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <b><font size="+2">The Birth of AI Art and a Quick Rundown</font></b> | ||

| + | <br /> | ||

| + | Before we can explore how AI art came to fruition, let's explore what AI art is. AI art refers to art that is generated with the help of artificial intelligence. The field of artificial intelligence focuses on training models powered by algorithms that try to emulate human intelligence. These models are trained by funneling large amounts of data into complex mathematical equations with the end goal of the model “teaching itself” how to properly generate a desired output. But for the model to generate a photo of said topic, the model needs to be trained with large amounts of data (images etc) with their corresponding topics to train the model on what is a "desirable" output. AI art got its big start in 2014 when a researcher published a paper on generative adversarial networks (GANs) and their impact on AI. The researcher, Ian Goodfellow, coined the term GAN in an essay in 2014 theorizing that the machine learning model type GAN could be the next step in neural networks because they could be used to produce completely new images. Without getting too technical, GANs work in a two-step process. First, the “generative” step, where the algorithm attempts to create something, for example, an image of a flower. The second step, the “adversarial” part, where a second algorithm that has learned how to differentiate between two things, for example, images of flowers and images of non-flowers, gives feedback on if the image looks like a flower. These steps continue until the second algorithm is fooled, for example when it can’t tell the difference between images of flowers created by the algorithm and real images of flowers. Once it was shown that computers could generate real looking images, it was only a matter of time until AI art would take the world by storm. | ||

| − | <b><font size="+2">How is | + | <br /> |

| + | <b><font size="+2">How is AI Art Generated?</font></b> | ||

*General steps: | *General steps: | ||

| + | **The user inputs any text they want. | ||

**Text is processed and turned into usable data by the model, and the semantic elements are analyzed as well. | **Text is processed and turned into usable data by the model, and the semantic elements are analyzed as well. | ||

**Text goes through a “diffusion process”, which essentially adds noise to the text, making it so the art generator will make a different thing every time. | **Text goes through a “diffusion process”, which essentially adds noise to the text, making it so the art generator will make a different thing every time. | ||

| − | **AI uses the new data to create the art. The art is usually made using GAN(Generative adversarial networks), which is a style of algorithm | + | **AI uses the new data to create the art. The art is usually made using GAN(Generative adversarial networks), which is a style of algorithm that is made up of two parts, one part which creates something, and another part which judges it. The GAN creates a feedback loop where the creator makes a piece of art, then the judge decides whether or not the thing matches the prompt, and if it doesn’t the creator tries again based on the feedback, and the process repeats until the judge decides that it matches the prompt. |

| − | ** The model will then take the k best images, where k is a number provided to the model, and how good an image | + | ** The model will then take the k best images, where k is a number provided to the model, and how good an image is determined by the judge, and provides the images as output. |

*Additional Information: | *Additional Information: | ||

**Usually this process takes about 5 minutes on an average computer, so there are websites that do all the backend processing for you, making it a lot faster. | **Usually this process takes about 5 minutes on an average computer, so there are websites that do all the backend processing for you, making it a lot faster. | ||

| Line 12: | Line 24: | ||

**The process of transferring the art style of one piece to another piece is called NST(neural style transfer). | **The process of transferring the art style of one piece to another piece is called NST(neural style transfer). | ||

| − | <b><font size="+2"> | + | <br /> |

| + | <b><font size="+2">Math of Neural Networks</font></b> | ||

| + | <br /> | ||

| + | In just a second, we will explain a specific example of an AI art model, but when we say model what do we mean? In most cases, especially in recent years, we are referring to a neural network or a combination of neural networks. For example, the GAN that we mentioned earlier is composed of 2 neural networks. Neural networks can get quite complicated, but at a basic level, all they are is a collection of neurons that take in data, do something with it, and then output that data. An example of one of these neurons is shown below: | ||

| − | <b><font size="+2"> | + | [[File:Neuron.jpg|500px]] |

| − | = | + | |

| − | Most algorithms that power AI art use data pulled from the internet, and the internet is a place filled with biases. The algorithms then generate images that have certain biases depending on the prompt. Taking DALL-E 2 as an example, the prompt “restaurant” will generate images that depict a western setting and styles and the prompt “nurse” will generate images of people who are female-passing. Generating these types of images will further | + | Each neuron has a number of inputs <math> x_1, x_2, …, x_n </math>, and each of those inputs has a weight associated with it. We can then calculate the total input into the neuron as the sum of each of the inputs times its associated weight. Which would look like <math> x_1*w_1 + x_2*w_2 + … + x_n*w_n </math>, however, this is most often computed using the dot product of the input and weight vectors. We then add some bias to the total input to get the adjusted input. The bias essentially works like a y-intercept, you just add it's value to the total input, and it's used to make the network more flexible. Finally, we put this adjusted input through a function called an activation function to get the output of the neuron. This activation function is often the sigmoid function, which is defined as sigmoid(x) <math> = \frac{1}{1+e^{-x}} </math>. We use this activation function to keep the neuron from being a purely linear operation which would restrict what we could calculate. We can then adjust the weights and the basis of the neuron so that it does different things depending on the different inputs. A neuron by itself can’t do a lot, but when you combine a bunch of them you can get some pretty interesting results. This is where the network part of neural networks comes in. The network is composed of a bunch of neurons combined into layers, with one input layer, where we input our data, any number of hidden layers, where the processing is done, and then an output layer, where we get the result that we are looking for. An example of what this looks like is shown below: |

| − | + | ||

| − | Since the models pull images from the internet, innumerable artworks are used without the artists’ permission. This can lead to images that are generated directly in an artist’s | + | [[File:Neural_network.jpg|500px]] |

| − | + | ||

| − | + | Here is an example of the full computation of a simple neural network with 10 inputs, and 1 output layer with 1 neuron. Our input vector is <math> \begin{bmatrix} 0 \\ 0.1 \\ 0.2 \\ 0.3 \\ 0.4 \\ 0.5 \\ 0.6 \\ 0.7 \\ 0.8 \\ 0.9 \end{bmatrix} </math>. The weights for the neuron in the output layer are <math> \begin{bmatrix} 0.1 \\ 0.1 \\ 0.1 \\ 0.1 \\ 0.1 \\ 0.1 \\ 0.1 \\ 0.1 \\ 0.1 \\ 0.1 \end{bmatrix} </math> with a bias of .1. We start by finding the total inputs for each of the neurons in the hidden layer, we can do this through a vector multiplication of the matrix composed of all of the weights for the neurons and the input vector since this is equivalent to adding up all of the inputs times the weights for the each of the inputs for the neurons: | |

| + | |||

| + | <math> | ||

| + | \begin{bmatrix} | ||

| + | 0.5 & 0.5 & 0.5 & 0.5 & 0.5 & 0.5 & 0.5 & 0.5 & 0.5 & 0.5 \\ | ||

| + | \end{bmatrix} * | ||

| + | \begin{bmatrix} | ||

| + | 0 \\ | ||

| + | 0.1 \\ | ||

| + | 0.2 \\ | ||

| + | 0.3 \\ | ||

| + | 0.4 \\ | ||

| + | 0.5 \\ | ||

| + | 0.6 \\ | ||

| + | 0.7 \\ | ||

| + | 0.8 \\ | ||

| + | 0.9 | ||

| + | \end{bmatrix} = | ||

| + | 2.25 | ||

| + | </math> | ||

| + | |||

| + | Next, we add the bias to this: | ||

| + | |||

| + | <math> | ||

| + | 2.25 + 0.1 = 2.35 | ||

| + | </math> | ||

| + | |||

| + | Finally, we run the sigmoid function on the output: | ||

| + | |||

| + | <math> | ||

| + | sigmoid(2.35) = 0.9129 | ||

| + | </math> | ||

| + | |||

| + | Then the output of the neural network is : | ||

| + | |||

| + | 0.9129 | ||

| + | |||

| + | With random numbers, this doesn't mean much, but if we had the input being the percentages that a student got on the first 10 homework assignments and the output being the likelihood that they will pass the class, then the output now has context and can be used to determine students that might need extra assistance. Furthermore, while the example shown here doesn't use a hidden layer, most complex models use a ton of hidden layers. For example, DALL-E 2, a model that we will talk about next has at least 1.7 billion nodes spread out over about 100 hidden layers. With this increase in nodes and hidden layers, there is a massive increase in what the neural network can learn. The question then becomes how does one go about adjusting the weights and biases of the nodes to get the neural network to output what we want it to. That question is a little beyond what we can explain here, but if you are interested look up backpropagation. | ||

| + | |||

| + | |||

| + | Since the example network didn't contain any hidden layers, here is a quick explanation of how they work; Each neuron in a hidden layer works essentially the same as the output neuron did, except there is no rounding at the end. Each neuron in the hidden layer will have inputs and associated weights from the previous layer, as well as outputs and associated weights to the next layer. Each neuron will add up its total input, add the bias, and then apply the sigmoid function to the adjusted input and output it. This is repeated over and over again for each hidden layer until the output layer is eventually reached. | ||

| + | |||

| + | <br /> | ||

| + | <b><font size="+2">Specific AI Art Example</font></b> | ||

| + | <br /> | ||

| + | [[File:Corgi_head.jpg|300px]] | ||

| + | <br /> | ||

| + | “a corgi’s head depicted as the explosion of a nebula” | ||

| + | <br /> | ||

| + | Here is an example of a piece of AI art created by the DALL-E 2 modal created by OpenAI. The process used to create it is illustrated in the graphic below. | ||

| + | <br /> | ||

| + | [[File:Whole_model.jpg|700px]] | ||

| + | <br /> | ||

| + | Let’s take a closer look at exactly how DALL-E 2 goes about creating this image from the text caption. There are 3 main steps: encoding the text caption, translating this encoding into an image encoding, and decoding the image encoding into an image. The first step, encoding the text caption, consists of taking the text and encoding it into a vector that represents the text. This vector points to a place in a latent space where points that are closer to each other represent similar text captions. So for example, the vectors representing “dog” and “cat” would be closer to each other than the vectors representing “dog” and “building”. Then the text encoding is turned into an image encoding, which is another vector that represents an image, again where vectors that are closer to each other represent similar images. This step is done through the use of CLIP, another model by OpenAI, which is trained to relate text encoding and image encodings such that we can determine how well a text encoding matches an image encoding. The model then starts with an average image encoding and then over time updates it such that it gets closer and closer to the text encoding that we provide. Finally, this image encoding is decoded into an actual image. This is done through the use of something called a diffusion model, specifically, a model called GLIDE which was also developed by OpenAI. In essence, what a diffusion model does is takes an “average” image, one that looks like colorful static, and step by step transforms it into an image that we would recognize. An example of this is shown below. | ||

| + | <br /> | ||

| + | [[File:Diffusion_model.png|1000px]] | ||

| + | <br /> | ||

| + | This diffusion model is trained by slowly turning an image into noise and then learns to turn it back into the original image. We can control what image it creates by conditioning each step on the data from the image encoding we created. Condition in the statistical sense, ie given this image encoding, what should the image look more like. As you can see, at each step the image gets slightly less noisy until in the end we are left with a coherent image. This step is also one of the reasons why DALL-E 2 can generate multiple different images from the same text prompt. The reason is that this diffusion step is not deterministic, that is to say, if you run the process multiple times you will get a different result every time you run it. While DALL-E 2 might seem like a monolithic entity, as you can see, it is actually composed of a number of smaller models that work together to create its result. | ||

| + | |||

| + | <br /> | ||

| + | <b><font size="+2">Effects of AI Art on Society</font></b> | ||

| + | |||

| + | '''Perpetuates existing biases and stereotypes''' | ||

| + | <br /> | ||

| + | Most algorithms that power AI art use data pulled from the internet, and the internet is a place filled with biases. The algorithms then generate images that have certain biases depending on the prompt. Taking DALL-E 2 as an example, the prompt “restaurant” will generate images that depict a western setting and styles and the prompt “nurse” will generate images of people who are female-passing. Generating these types of images will further proliferate biased images on the internet, creating a feedback loop and homogenizing AI art as a whole. | ||

| + | |||

| + | '''Copyright issues with using artwork in ai training data''' | ||

| + | <br /> | ||

| + | Since the models pull images from the internet, innumerable artworks are used without the artists’ permission. This can lead to images that are generated directly in an artist’s art style; all that is needed is to put the artist’s name in the prompt.Take artist Greg Rutkowski as an example. His fantastical and ethereal style is one of the most commonly used prompts, and people can easily create “Rutkowski art” without ever consulting him. Also, since a model can create mimetic art based on its inputs, if a company sees an image they want to use, they can input it into the database of an AI art model and quickly generate a similar image while bypassing copyright laws. The solution to the legal issues is currently not clear. | ||

| + | |||

| + | '''Impact on the art industry'''<br /> | ||

| + | The increase in the prevalence of AI art may eliminate the need for artists since people can generate art rather than hire an artist. AI art undermines the creative process of traditional artists since it generates art with a click of a button. However, just like the invention of the camera or photoshop, AI generated art can be used as a tool; artists can use AI art as a starting point for creativity rather than a finished product. It may decrease the entry point of artists since it is much easier to have a working product from the beginning. The effects of ai art on the art industry will be determined by the users themselves, not the technology. | ||

| + | |||

| + | <br /> | ||

| + | <b><font size="+2">Refrences</font></b> | ||

| + | |||

| + | “AI Creating ‘Art’ Is An Ethical And Copyright Nightmare.” Kotaku, 25 Aug. 2022, https://kotaku.com/ai-art-dall-e-midjourney-stable-diffusion-copyright-1849388060. | ||

| + | |||

| + | AI-Generated Art: From Text to Images & Beyond [Examples]. https://www.v7labs.com/blog/ai-generated-art, https://www.v7labs.com/blog/ai-generated-art. | ||

| + | |||

| + | “How DALL-E 2 Actually Works.” News, Tutorials, AI Research, 19 Apr. 2022, https://www.assemblyai.com/blog/how-dall-e-2-actually-works/. | ||

| + | |||

| + | Knight, Will. “When AI Makes Art, Humans Supply the Creative Spark.” Wired. www.wired.com, https://www.wired.com/story/when-ai-makes-art/. | ||

| + | |||

| + | Ramesh, Aditya, et al. Hierarchical Text-Conditional Image Generation with CLIP Latents. arXiv, 12 Apr. 2022. arXiv.org, https://doi.org/10.48550/arXiv.2204.06125. | ||

| + | |||

| + | Roose, Kevin. “An A.I.-Generated Picture Won an Art Prize. Artists Aren’t Happy.” The New York Times, 2 Sept. 2022. NYTimes.com, https://www.nytimes.com/2022/09/02/technology/ai-artificial-intelligence-artists.html. | ||

| + | |||

| + | “The Algorithm: AI-Generated Art Raises Tricky Questions about Ethics, Copyright, and Security.” MIT Technology Review, https://www.technologyreview.com/2022/09/20/1059792/the-algorithm-ai-generated-art-raises-tricky-questions-about-ethics-copyright-and-security/. | ||

| + | |||

| + | “Weights & Biases.” W&B, https://wandb.ai/dalle-mini/dalle-mini/reports/DALL-E-mini-Generate-images-from-any-text-prompt--VmlldzoyMDE4NDAy. | ||

| + | |||

| + | Whisperer, The Jasper. “How Do AI Art Generators Create Original Artwork?” MLearning.Ai, 7 Oct. 2022, https://medium.com/mlearning-ai/how-do-ai-art-generators-create-original-artwork-df665e02bd19. | ||

| + | |||

| + | Wong, Elliot. “AI Art Promises Innovation, but Does It Reflect Human Bias Too?” SuperRare Magazine, 18 Oct. 2022, https://superrare.com/magazine/2022/10/18/ai-art-promises-innovation-does-it-reflect-human-bias-instead/. | ||

| + | |||

| + | [[Category:MA279Fall2022Swanson]] | ||

Latest revision as of 10:25, 29 November 2022

AI Art

(Jason Allen) The AI generated art that won the Colorado State Fair in 2022.

The Birth of AI Art and a Quick Rundown

Before we can explore how AI art came to fruition, let's explore what AI art is. AI art refers to art that is generated with the help of artificial intelligence. The field of artificial intelligence focuses on training models powered by algorithms that try to emulate human intelligence. These models are trained by funneling large amounts of data into complex mathematical equations with the end goal of the model “teaching itself” how to properly generate a desired output. But for the model to generate a photo of said topic, the model needs to be trained with large amounts of data (images etc) with their corresponding topics to train the model on what is a "desirable" output. AI art got its big start in 2014 when a researcher published a paper on generative adversarial networks (GANs) and their impact on AI. The researcher, Ian Goodfellow, coined the term GAN in an essay in 2014 theorizing that the machine learning model type GAN could be the next step in neural networks because they could be used to produce completely new images. Without getting too technical, GANs work in a two-step process. First, the “generative” step, where the algorithm attempts to create something, for example, an image of a flower. The second step, the “adversarial” part, where a second algorithm that has learned how to differentiate between two things, for example, images of flowers and images of non-flowers, gives feedback on if the image looks like a flower. These steps continue until the second algorithm is fooled, for example when it can’t tell the difference between images of flowers created by the algorithm and real images of flowers. Once it was shown that computers could generate real looking images, it was only a matter of time until AI art would take the world by storm.

How is AI Art Generated?

- General steps:

- The user inputs any text they want.

- Text is processed and turned into usable data by the model, and the semantic elements are analyzed as well.

- Text goes through a “diffusion process”, which essentially adds noise to the text, making it so the art generator will make a different thing every time.

- AI uses the new data to create the art. The art is usually made using GAN(Generative adversarial networks), which is a style of algorithm that is made up of two parts, one part which creates something, and another part which judges it. The GAN creates a feedback loop where the creator makes a piece of art, then the judge decides whether or not the thing matches the prompt, and if it doesn’t the creator tries again based on the feedback, and the process repeats until the judge decides that it matches the prompt.

- The model will then take the k best images, where k is a number provided to the model, and how good an image is determined by the judge, and provides the images as output.

- Additional Information:

- Usually this process takes about 5 minutes on an average computer, so there are websites that do all the backend processing for you, making it a lot faster.

- Models typically have at least 2 parts, the creator and judge, but sometimes they can have more. For example, a model might have an image encoder to turn images into numbers, a part that turns text into images(creator), and a part that judges the images(judge).

- The process of transferring the art style of one piece to another piece is called NST(neural style transfer).

Math of Neural Networks

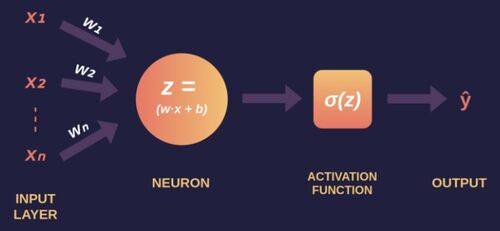

In just a second, we will explain a specific example of an AI art model, but when we say model what do we mean? In most cases, especially in recent years, we are referring to a neural network or a combination of neural networks. For example, the GAN that we mentioned earlier is composed of 2 neural networks. Neural networks can get quite complicated, but at a basic level, all they are is a collection of neurons that take in data, do something with it, and then output that data. An example of one of these neurons is shown below:

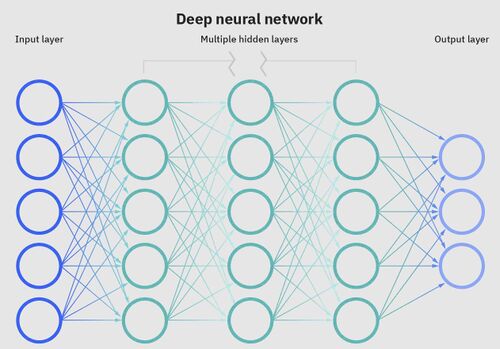

Each neuron has a number of inputs $ x_1, x_2, …, x_n $, and each of those inputs has a weight associated with it. We can then calculate the total input into the neuron as the sum of each of the inputs times its associated weight. Which would look like $ x_1*w_1 + x_2*w_2 + … + x_n*w_n $, however, this is most often computed using the dot product of the input and weight vectors. We then add some bias to the total input to get the adjusted input. The bias essentially works like a y-intercept, you just add it's value to the total input, and it's used to make the network more flexible. Finally, we put this adjusted input through a function called an activation function to get the output of the neuron. This activation function is often the sigmoid function, which is defined as sigmoid(x) $ = \frac{1}{1+e^{-x}} $. We use this activation function to keep the neuron from being a purely linear operation which would restrict what we could calculate. We can then adjust the weights and the basis of the neuron so that it does different things depending on the different inputs. A neuron by itself can’t do a lot, but when you combine a bunch of them you can get some pretty interesting results. This is where the network part of neural networks comes in. The network is composed of a bunch of neurons combined into layers, with one input layer, where we input our data, any number of hidden layers, where the processing is done, and then an output layer, where we get the result that we are looking for. An example of what this looks like is shown below:

Here is an example of the full computation of a simple neural network with 10 inputs, and 1 output layer with 1 neuron. Our input vector is $ \begin{bmatrix} 0 \\ 0.1 \\ 0.2 \\ 0.3 \\ 0.4 \\ 0.5 \\ 0.6 \\ 0.7 \\ 0.8 \\ 0.9 \end{bmatrix} $. The weights for the neuron in the output layer are $ \begin{bmatrix} 0.1 \\ 0.1 \\ 0.1 \\ 0.1 \\ 0.1 \\ 0.1 \\ 0.1 \\ 0.1 \\ 0.1 \\ 0.1 \end{bmatrix} $ with a bias of .1. We start by finding the total inputs for each of the neurons in the hidden layer, we can do this through a vector multiplication of the matrix composed of all of the weights for the neurons and the input vector since this is equivalent to adding up all of the inputs times the weights for the each of the inputs for the neurons:

$ \begin{bmatrix} 0.5 & 0.5 & 0.5 & 0.5 & 0.5 & 0.5 & 0.5 & 0.5 & 0.5 & 0.5 \\ \end{bmatrix} * \begin{bmatrix} 0 \\ 0.1 \\ 0.2 \\ 0.3 \\ 0.4 \\ 0.5 \\ 0.6 \\ 0.7 \\ 0.8 \\ 0.9 \end{bmatrix} = 2.25 $

Next, we add the bias to this:

$ 2.25 + 0.1 = 2.35 $

Finally, we run the sigmoid function on the output:

$ sigmoid(2.35) = 0.9129 $

Then the output of the neural network is :

0.9129

With random numbers, this doesn't mean much, but if we had the input being the percentages that a student got on the first 10 homework assignments and the output being the likelihood that they will pass the class, then the output now has context and can be used to determine students that might need extra assistance. Furthermore, while the example shown here doesn't use a hidden layer, most complex models use a ton of hidden layers. For example, DALL-E 2, a model that we will talk about next has at least 1.7 billion nodes spread out over about 100 hidden layers. With this increase in nodes and hidden layers, there is a massive increase in what the neural network can learn. The question then becomes how does one go about adjusting the weights and biases of the nodes to get the neural network to output what we want it to. That question is a little beyond what we can explain here, but if you are interested look up backpropagation.

Since the example network didn't contain any hidden layers, here is a quick explanation of how they work; Each neuron in a hidden layer works essentially the same as the output neuron did, except there is no rounding at the end. Each neuron in the hidden layer will have inputs and associated weights from the previous layer, as well as outputs and associated weights to the next layer. Each neuron will add up its total input, add the bias, and then apply the sigmoid function to the adjusted input and output it. This is repeated over and over again for each hidden layer until the output layer is eventually reached.

Specific AI Art Example

“a corgi’s head depicted as the explosion of a nebula”

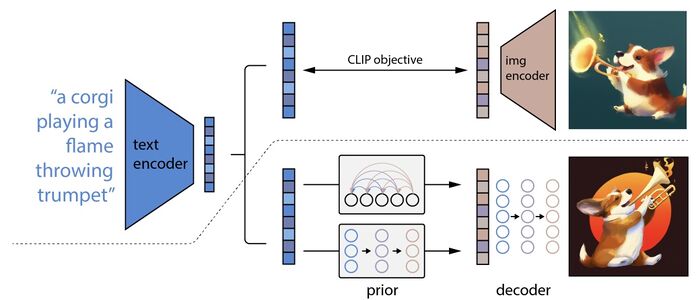

Here is an example of a piece of AI art created by the DALL-E 2 modal created by OpenAI. The process used to create it is illustrated in the graphic below.

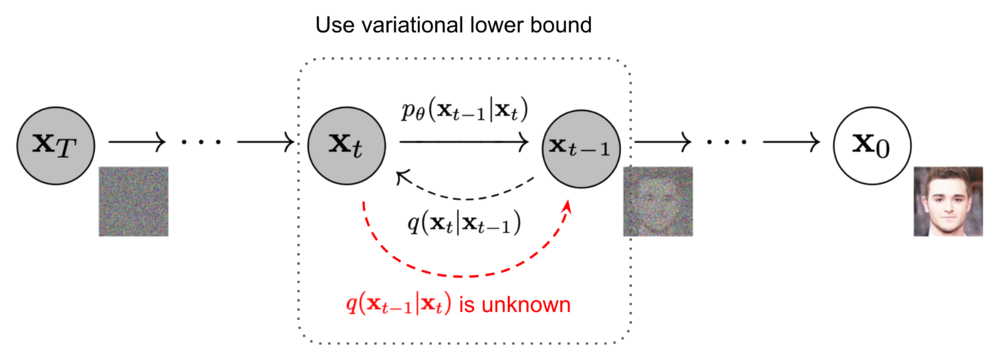

Let’s take a closer look at exactly how DALL-E 2 goes about creating this image from the text caption. There are 3 main steps: encoding the text caption, translating this encoding into an image encoding, and decoding the image encoding into an image. The first step, encoding the text caption, consists of taking the text and encoding it into a vector that represents the text. This vector points to a place in a latent space where points that are closer to each other represent similar text captions. So for example, the vectors representing “dog” and “cat” would be closer to each other than the vectors representing “dog” and “building”. Then the text encoding is turned into an image encoding, which is another vector that represents an image, again where vectors that are closer to each other represent similar images. This step is done through the use of CLIP, another model by OpenAI, which is trained to relate text encoding and image encodings such that we can determine how well a text encoding matches an image encoding. The model then starts with an average image encoding and then over time updates it such that it gets closer and closer to the text encoding that we provide. Finally, this image encoding is decoded into an actual image. This is done through the use of something called a diffusion model, specifically, a model called GLIDE which was also developed by OpenAI. In essence, what a diffusion model does is takes an “average” image, one that looks like colorful static, and step by step transforms it into an image that we would recognize. An example of this is shown below.

This diffusion model is trained by slowly turning an image into noise and then learns to turn it back into the original image. We can control what image it creates by conditioning each step on the data from the image encoding we created. Condition in the statistical sense, ie given this image encoding, what should the image look more like. As you can see, at each step the image gets slightly less noisy until in the end we are left with a coherent image. This step is also one of the reasons why DALL-E 2 can generate multiple different images from the same text prompt. The reason is that this diffusion step is not deterministic, that is to say, if you run the process multiple times you will get a different result every time you run it. While DALL-E 2 might seem like a monolithic entity, as you can see, it is actually composed of a number of smaller models that work together to create its result.

Effects of AI Art on Society

Perpetuates existing biases and stereotypes

Most algorithms that power AI art use data pulled from the internet, and the internet is a place filled with biases. The algorithms then generate images that have certain biases depending on the prompt. Taking DALL-E 2 as an example, the prompt “restaurant” will generate images that depict a western setting and styles and the prompt “nurse” will generate images of people who are female-passing. Generating these types of images will further proliferate biased images on the internet, creating a feedback loop and homogenizing AI art as a whole.

Copyright issues with using artwork in ai training data

Since the models pull images from the internet, innumerable artworks are used without the artists’ permission. This can lead to images that are generated directly in an artist’s art style; all that is needed is to put the artist’s name in the prompt.Take artist Greg Rutkowski as an example. His fantastical and ethereal style is one of the most commonly used prompts, and people can easily create “Rutkowski art” without ever consulting him. Also, since a model can create mimetic art based on its inputs, if a company sees an image they want to use, they can input it into the database of an AI art model and quickly generate a similar image while bypassing copyright laws. The solution to the legal issues is currently not clear.

Impact on the art industry

The increase in the prevalence of AI art may eliminate the need for artists since people can generate art rather than hire an artist. AI art undermines the creative process of traditional artists since it generates art with a click of a button. However, just like the invention of the camera or photoshop, AI generated art can be used as a tool; artists can use AI art as a starting point for creativity rather than a finished product. It may decrease the entry point of artists since it is much easier to have a working product from the beginning. The effects of ai art on the art industry will be determined by the users themselves, not the technology.

Refrences

“AI Creating ‘Art’ Is An Ethical And Copyright Nightmare.” Kotaku, 25 Aug. 2022, https://kotaku.com/ai-art-dall-e-midjourney-stable-diffusion-copyright-1849388060.

AI-Generated Art: From Text to Images & Beyond [Examples]. https://www.v7labs.com/blog/ai-generated-art, https://www.v7labs.com/blog/ai-generated-art.

“How DALL-E 2 Actually Works.” News, Tutorials, AI Research, 19 Apr. 2022, https://www.assemblyai.com/blog/how-dall-e-2-actually-works/.

Knight, Will. “When AI Makes Art, Humans Supply the Creative Spark.” Wired. www.wired.com, https://www.wired.com/story/when-ai-makes-art/.

Ramesh, Aditya, et al. Hierarchical Text-Conditional Image Generation with CLIP Latents. arXiv, 12 Apr. 2022. arXiv.org, https://doi.org/10.48550/arXiv.2204.06125.

Roose, Kevin. “An A.I.-Generated Picture Won an Art Prize. Artists Aren’t Happy.” The New York Times, 2 Sept. 2022. NYTimes.com, https://www.nytimes.com/2022/09/02/technology/ai-artificial-intelligence-artists.html.

“The Algorithm: AI-Generated Art Raises Tricky Questions about Ethics, Copyright, and Security.” MIT Technology Review, https://www.technologyreview.com/2022/09/20/1059792/the-algorithm-ai-generated-art-raises-tricky-questions-about-ethics-copyright-and-security/.

“Weights & Biases.” W&B, https://wandb.ai/dalle-mini/dalle-mini/reports/DALL-E-mini-Generate-images-from-any-text-prompt--VmlldzoyMDE4NDAy.

Whisperer, The Jasper. “How Do AI Art Generators Create Original Artwork?” MLearning.Ai, 7 Oct. 2022, https://medium.com/mlearning-ai/how-do-ai-art-generators-create-original-artwork-df665e02bd19.

Wong, Elliot. “AI Art Promises Innovation, but Does It Reflect Human Bias Too?” SuperRare Magazine, 18 Oct. 2022, https://superrare.com/magazine/2022/10/18/ai-art-promises-innovation-does-it-reflect-human-bias-instead/.