| Line 13: | Line 13: | ||

Interestingly enough, this implies that the variations between individual pitch caused by differences in vocal cord size (which affects the frequency of the vocal folds when voicing signals) does NOT affect the resonant frequencies of the vocal tract. | Interestingly enough, this implies that the variations between individual pitch caused by differences in vocal cord size (which affects the frequency of the vocal folds when voicing signals) does NOT affect the resonant frequencies of the vocal tract. | ||

| − | Think of the vocal tract as a garden hose with a variable-spray nozzle. The water pressure/flow is your vocal cords applying an input to your nozzle, or vocal tract. Your nozzle (vocal tract) then selects which spray settings it wants to shape the water flow into before it spreads out from the end of the hose, which is seen the same by both yourself and the outside world | + | Think of the vocal tract as a garden hose with a variable-spray nozzle. The water pressure/flow is your vocal cords applying an input to your nozzle, or vocal tract. Your nozzle (vocal tract) then selects which spray settings it wants to shape the water flow into before it spreads out from the end of the hose, which is seen the same by both yourself and the outside world: the same as your speech! |

----- | ----- | ||

| Line 37: | Line 37: | ||

==Methodology== | ==Methodology== | ||

| + | # Convert the audio to single-channel | ||

| + | # Plot time-domain representations of voiced phonemes | ||

| + | # Create Spectographic Representations of each signal by applying a shifting N-point DFT window along the length of the input signal and converting the resulting DFT into a column of an output matrix (wideband spectogram) | ||

| + | # Identify formant frequencies F1 and F2 at the beginning and end of speech | ||

| + | # Compare formant frequencies of custom signals to commonly accepted values of voiced phonemes | ||

----- | ----- | ||

==Matlab Code== | ==Matlab Code== | ||

Revision as of 11:29, 23 November 2019

Contents

Formant Analysis Using Wideband Spectographic Representation

By user:BJH (Brandon Henman)

Topic Background

Like most systems in the real world, the human vocal tract can be broken down and modeled as a system that takes real inputs (vibration of vocal cords) and transforms them (via the vocal tract) into real outputs (human speech).

The vocal system itself resonates certain frequencies, called formant frequencies, depending on how a person changes the shape of their vocal tract in order to pronounce certain phonemes, or "building blocks" of sounds.

This makes sense logically, as our brains classify different phonemes and speech to be different ranges of frequencies in order to process speech signals, otherwise all sounds would mean the same thing!

In order for our brains to have universally recognized phonemes, it follows that a phoneme is roughly the same frequency for everyone, e.g. "you" sounds like "yoo" for everyone (within the same language, that's an entirely different topic).

Interestingly enough, this implies that the variations between individual pitch caused by differences in vocal cord size (which affects the frequency of the vocal folds when voicing signals) does NOT affect the resonant frequencies of the vocal tract.

Think of the vocal tract as a garden hose with a variable-spray nozzle. The water pressure/flow is your vocal cords applying an input to your nozzle, or vocal tract. Your nozzle (vocal tract) then selects which spray settings it wants to shape the water flow into before it spreads out from the end of the hose, which is seen the same by both yourself and the outside world: the same as your speech!

Audio Files

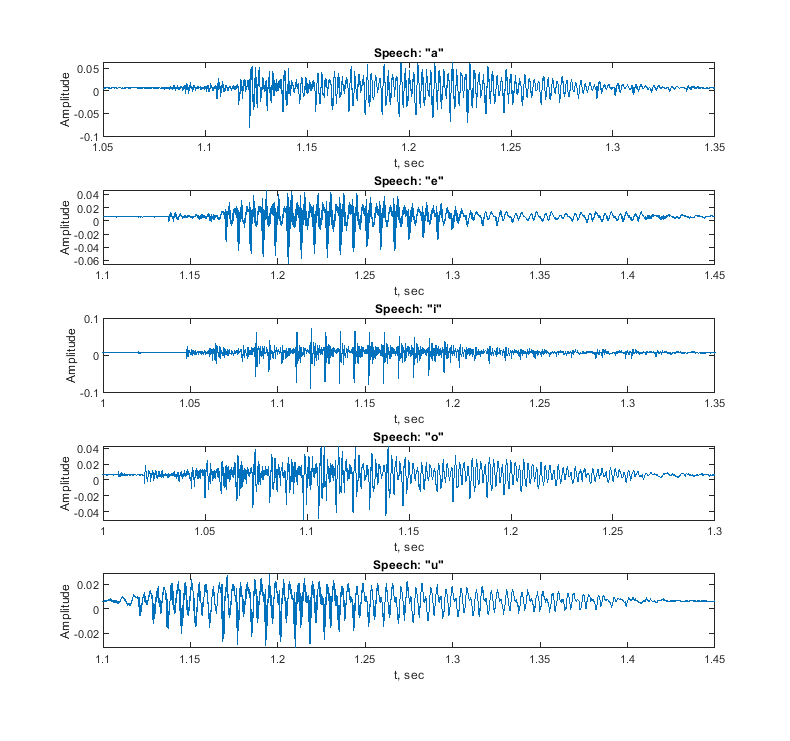

Here are some examples of voiced phonemes that will be analyzed for their formant frequencies:

-Custom-recorded, 16kHz sampling rate

Me saying "a" :Media:Vowels_voiced_a.wav ("ay")

Me saying "e" :Media:Vowels_voiced_e.wav ("ee")

Me saying "i" :Media:Vowels_voiced_i.wav ("eye")

Me saying "o" :Media:Vowels_voiced_o.wav ("oh")

Me saying "u" :Media:Vowels_voiced_u.wav ("yoo")

Time Domain Representation of Recorded Signals:

Methodology

- Convert the audio to single-channel

- Plot time-domain representations of voiced phonemes

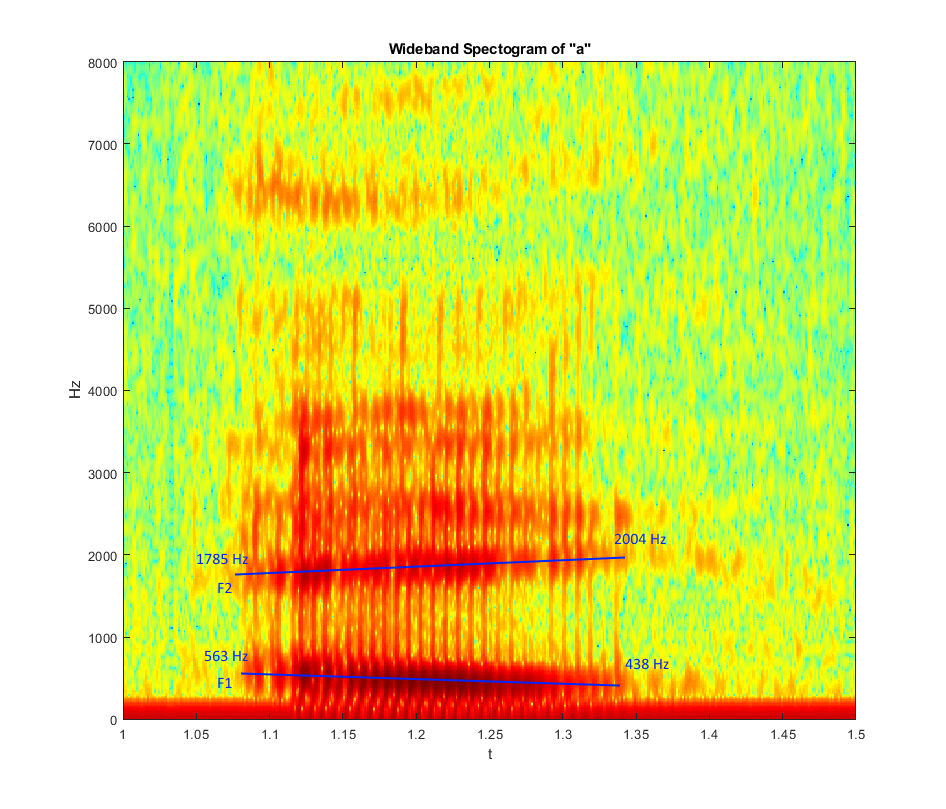

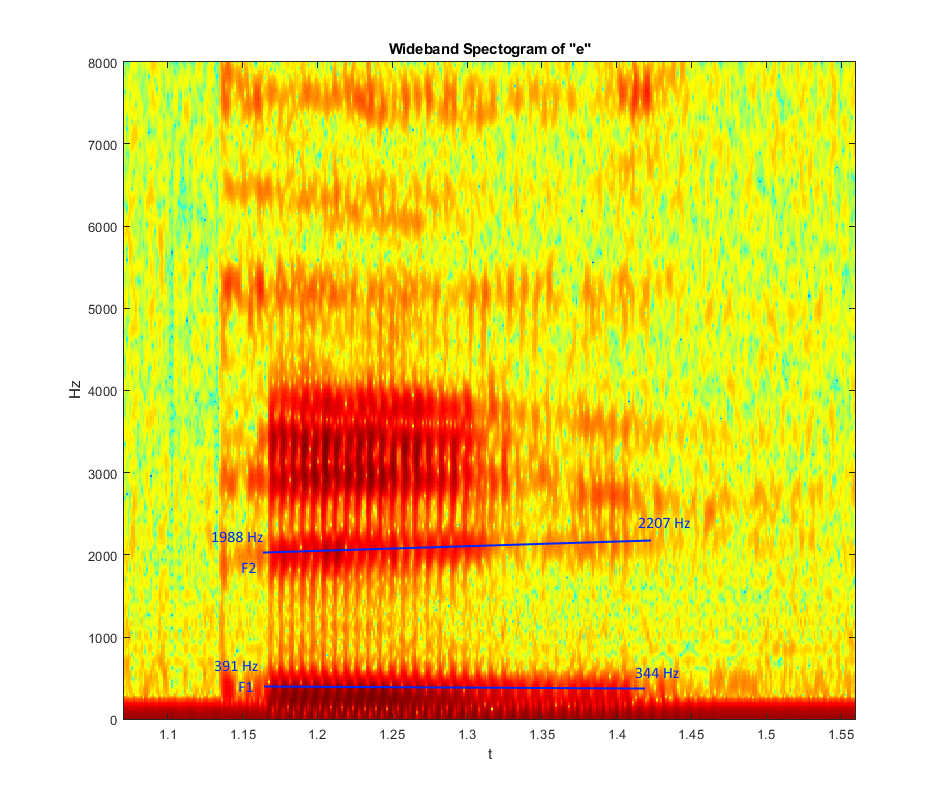

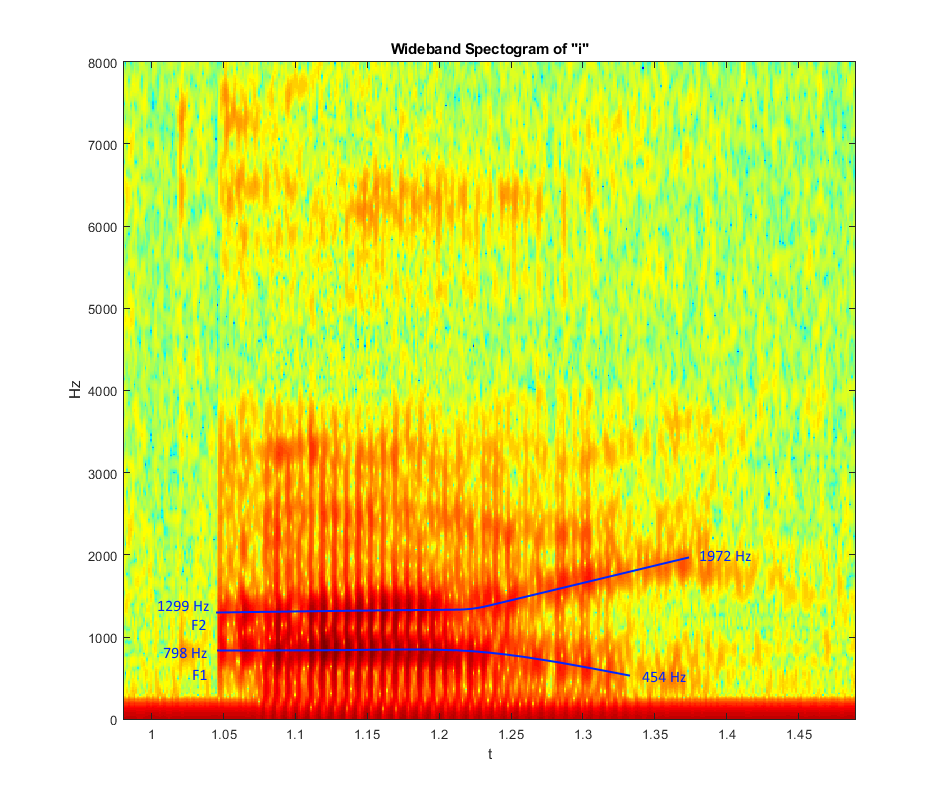

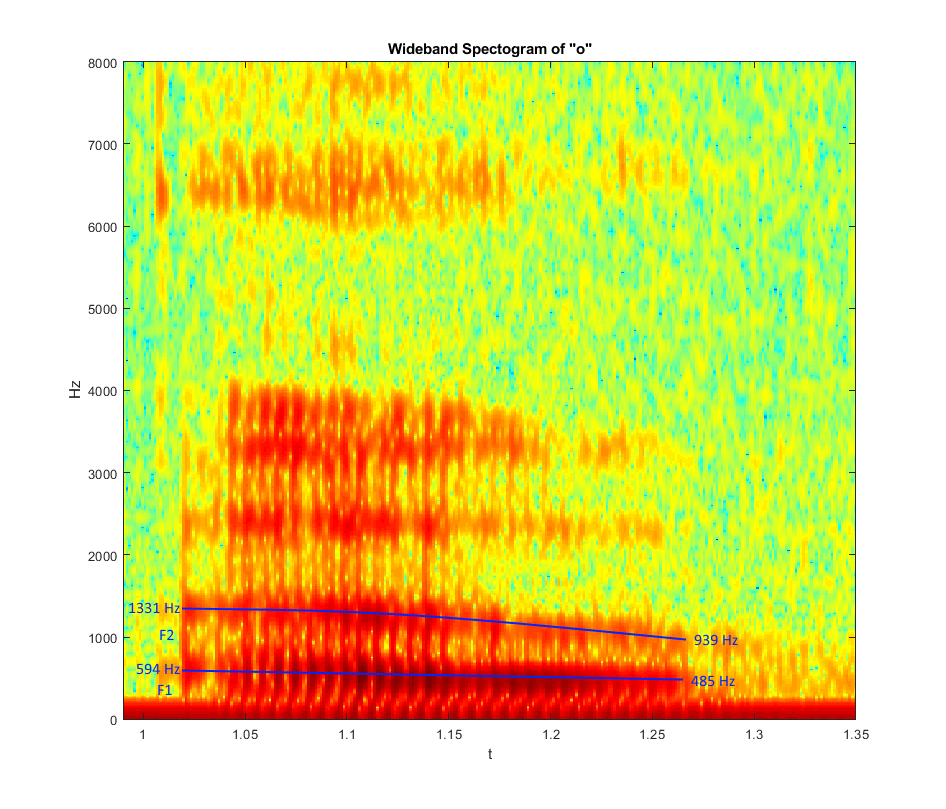

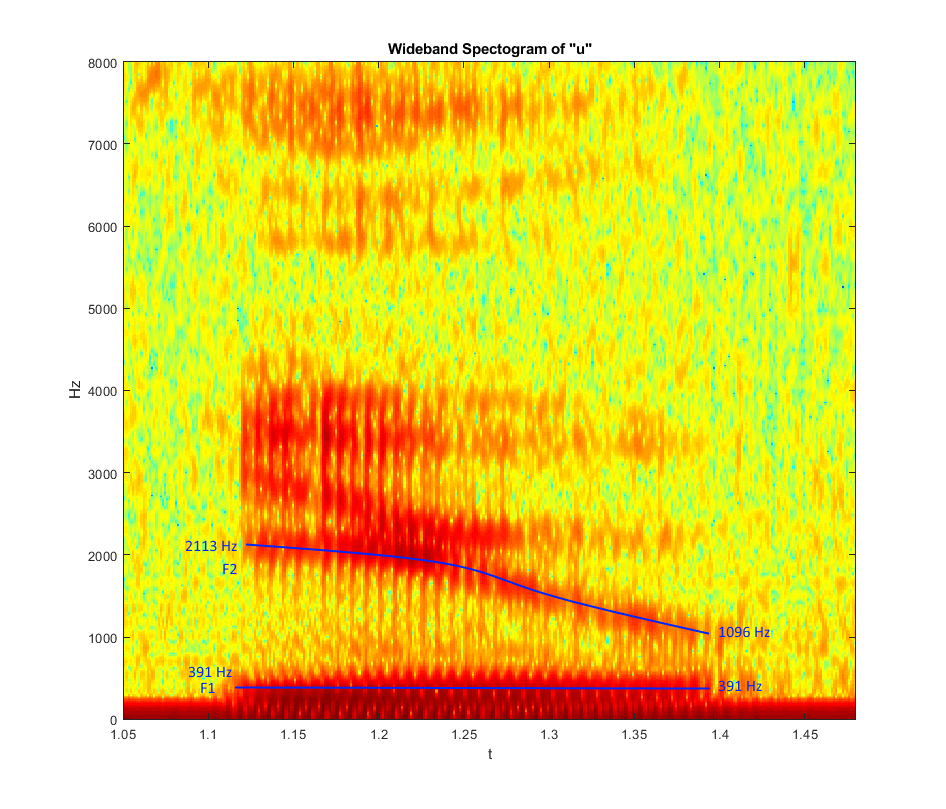

- Create Spectographic Representations of each signal by applying a shifting N-point DFT window along the length of the input signal and converting the resulting DFT into a column of an output matrix (wideband spectogram)

- Identify formant frequencies F1 and F2 at the beginning and end of speech

- Compare formant frequencies of custom signals to commonly accepted values of voiced phonemes

Matlab Code

Spectogram Results & Formant Analysis

Wideband Spectogram of A:

Wideband Spectogram of E:

Wideband Spectogram of I:

Wideband Spectogram of O:

Wideband Spectogram of U: