| (11 intermediate revisions by 2 users not shown) | |||

| Line 6: | Line 6: | ||

<center><font size= 4> | <center><font size= 4> | ||

| − | Logistic regression | + | Introduction to Logistic regression and its implementation |

</font size> | </font size> | ||

| − | A [ | + | A [http://www.projectrhea.org/learning/slectures.php slecture] by [[ECE]] student Borui Chen |

| − | Partly based on the [[ | + | Partly based on the [[2014_Spring_ECE_662_Boutin_Statistical_Pattern_recognition_slectures|ECE662 Spring 2014 lecture]] material of [[user:mboutin|Prof. Mireille Boutin]]. |

</center> | </center> | ||

---- | ---- | ||

| Line 17: | Line 17: | ||

== Introduction == | == Introduction == | ||

| − | In the field of machine learning, one | + | In the field of machine learning, one major topic is classification problem. Linear classifier is a class of algorithms that make the classification decision on a new test data point base on a linear combination of the features. |

| − | There are two classes of linear classifier: Generative model and Discriminative model: | + | There are mainly two classes of linear classifier: Generative model and Discriminative model: |

* The generative model measures the joint distribution of the data and class. | * The generative model measures the joint distribution of the data and class. | ||

| Line 34: | Line 34: | ||

<center>[[Image:Cbr_intuition.png]]</center> | <center>[[Image:Cbr_intuition.png]]</center> | ||

| − | Clearly if a person's hair length is comparably long, being a female is more likely. If there is another person with hair length 10, we could say it's more likely to be a female. However, we don't have a description about how long is long enough to say a person is female. So we introduce the probability. | + | Clearly if a person's hair length is comparably long, being a female is more likely. If there is another person with hair length 10, we could say it's more likely to be a female. However, we don't have a description about how long is long enough to say a person is female. So we introduce the probability. We want to say that given a hair length is 10 inches, the probability of the person being a female is close to 1. |

The intuition of logistic regression, in this example, is to assign a continuous probability to every possible value of hair length so that for longer hair length the probability of being a female is close to 1 and for shorter hair length the probability of being a female is close to 0. It is done by taking the linear combination of the feature and a constant and feeding it into a logistic function: | The intuition of logistic regression, in this example, is to assign a continuous probability to every possible value of hair length so that for longer hair length the probability of being a female is close to 1 and for shorter hair length the probability of being a female is close to 0. It is done by taking the linear combination of the feature and a constant and feeding it into a logistic function: | ||

| Line 79: | Line 79: | ||

<center><math>\beta^T x_i = log\frac{Pr(female)}{Pr(male)}=log\frac{Pr(female)}{1-Pr(female)}</math></center> | <center><math>\beta^T x_i = log\frac{Pr(female)}{Pr(male)}=log\frac{Pr(female)}{1-Pr(female)}</math></center> | ||

| − | Having this setup, the goal is to find a <math>\beta</math> to let the curve fit the data optimally. | + | Having this setup, the goal is to find a <math>\beta</math> to let the curve fit the data optimally. To solve the problem, it comes about Maximum Likelihood Estimation and Newton's method. |

---- | ---- | ||

| Line 133: | Line 133: | ||

<center><math> | <center><math> | ||

\begin{align} | \begin{align} | ||

| − | \frac{\partial}{\partial\beta_j}\log Pr(y|x)&=\sum_{i=1}^n y_ix_{ij}(1-\alpha_i) | + | \frac{\partial}{\partial\beta_j}\log Pr(y|x)&=\sum_{i=1}^n y_ix_{ij}(1-\alpha_i)+(1-y_i)x_{ij}\alpha_i\\ |

| − | + | ||

&= \sum_{i=1}^n (y_i - \alpha_i)x_{ij} | &= \sum_{i=1}^n (y_i - \alpha_i)x_{ij} | ||

\end{align} | \end{align} | ||

| Line 140: | Line 139: | ||

If we set the above equation to zero, there is no close form solution, so we are going to use numerical optimization method to maximized the likelihood, namely, Newton's method. | If we set the above equation to zero, there is no close form solution, so we are going to use numerical optimization method to maximized the likelihood, namely, Newton's method. | ||

| + | |||

| + | '''Note''' | ||

| + | |||

| + | The goal is to maximize the above equation, however, in Newton's method it's easier to minimize the negative of the log likelihood function. In this case the derivatives becomes | ||

| + | |||

| + | <center><math> | ||

| + | \frac{\partial}{\partial\beta_j}\log Pr(y|x)=\sum_{i=1}^n (\alpha_i-y_i)x_{ij} | ||

| + | </math></center> | ||

| + | |||

---- | ---- | ||

| Line 145: | Line 153: | ||

To apply the Newton's method, we need a gradient function of log likelihood with respect to <math>\beta</math>, together with a Hessian matrix. from the last part, we've already computed the derivative for each <math>\beta_i</math>, now we need to write it in matrix form. | To apply the Newton's method, we need a gradient function of log likelihood with respect to <math>\beta</math>, together with a Hessian matrix. from the last part, we've already computed the derivative for each <math>\beta_i</math>, now we need to write it in matrix form. | ||

| + | |||

| + | <center><math> | ||

| + | \text{let }A= | ||

| + | \begin{bmatrix} | ||

| + | x_{11} & \cdots & x_{1d}\\ | ||

| + | \vdots & \ddots & \vdots \\ | ||

| + | x_{n1} & \cdots & x_{nd} | ||

| + | \end{bmatrix} | ||

| + | </math></center> | ||

| + | |||

| + | where d is the dimension of the feature vector, n is size of training data set. | ||

| + | |||

| + | The gradient can be written as: | ||

| + | |||

| + | <center><math> | ||

| + | g(x,\beta) = \nabla \log Pr(y|x) = (\alpha-y)^T A | ||

| + | </math></center> | ||

| + | |||

| + | for the Hessian matrix, consider taking the derivative of the log likelihood function with respect to <math>\beta_j \text{ and }\beta_k</math>. | ||

| + | |||

| + | <center><math> | ||

| + | \begin{align} | ||

| + | \frac{\partial^2}{\partial\beta_j \partial\beta_k}\log Pr(y|x)&=\frac{\partial}{\partial\beta_k}\sum_{i=1}^n \alpha_ix_{ij}\\ | ||

| + | &=\sum_{i=1}^n x_{ij}x_{ik}\alpha_i(1-\alpha_i)\\ | ||

| + | &\triangleq z_j^T B z_k | ||

| + | \end{align} | ||

| + | </math></center> | ||

| + | |||

| + | where <math>z_j = (x_{1j},\cdots,x_{nj})^T, B = diag(\alpha_1(1-\alpha_1),\cdots,\alpha_n(1-\alpha_n))</math>. Then, can write Hessian matrix: | ||

| + | |||

| + | <center><math> | ||

| + | H(x,\beta) = \nabla^2 \log Pr(y|x) = A^TBA | ||

| + | </math></center> | ||

| + | |||

| + | With the equations of Gradient and Hessian matrix, we plug them into Newton's method iteration: | ||

| + | |||

| + | <center><math> | ||

| + | \beta_{k+1} = \beta_k - H^{-1}g | ||

| + | </math></center> | ||

| + | |||

| + | with some initialization <math>\beta_0</math>. | ||

| + | |||

| + | ---- | ||

| + | |||

| + | == Example == | ||

| + | |||

| + | To show the performance of the implementation, 2-D uniform distributed training data is used. Following the implementation procedure described above, we get the following result: | ||

| + | |||

| + | <center>[[Image:Cbr_boundary.png]]</center> | ||

| + | |||

| + | In the plot, x and o represent two different classes. | ||

| + | |||

| + | Observations: | ||

| + | |||

| + | *initial decision boundary is defined by equation | ||

| + | |||

| + | <center><math>(0,0,1)(1,x_1,x_2)^T=0</math></center> | ||

| + | |||

| + | *The system converges fast, 4-th iteration is close enough to 50-th iteration. | ||

| + | |||

| + | The following graph shows the logistic curve of the algorithm. It represents <math>Pr(class=1|data)</math> | ||

| + | |||

| + | <center>[[Image:Cbr_logistic_curve.png]]</center> | ||

| + | |||

| + | ---- | ||

| + | |||

| + | == Summary == | ||

| + | |||

| + | Logistic regression is an effective method that produces a linear classifier from labeled training data. Given a training data set, it tries estimate parameters <math>\beta</math> in order to maximize the conditional log-likelihood function with a logistic probability model. then use Newton's method to find the best fit. | ||

| + | |||

| + | == Reference == | ||

| + | [1] Mireille Boutin, “ECE662: Statistical Pattern Recognition and Decision Making Processes,” Purdue University, Spring 2014<br> | ||

| + | [2] http://www.stat.cmu.edu/~cshalizi/uADA/12/lectures/ch12.pdf <br> | ||

| + | [3] Chong and Żak, An Introduction to Optimization, Fourth Edition, 2013 | ||

| + | |||

| + | ---- | ||

| + | ==[[slecture_CBR_logistic_regression_review|Questions and comments]]== | ||

| + | If you have any questions, comments, etc. please post them on [[slecture_CBR_logistic_regression_review|this page]]. | ||

Latest revision as of 09:56, 22 January 2015

Introduction to Logistic regression and its implementation

A slecture by ECE student Borui Chen

Partly based on the ECE662 Spring 2014 lecture material of Prof. Mireille Boutin.

Contents

Introduction

In the field of machine learning, one major topic is classification problem. Linear classifier is a class of algorithms that make the classification decision on a new test data point base on a linear combination of the features.

There are mainly two classes of linear classifier: Generative model and Discriminative model:

- The generative model measures the joint distribution of the data and class.

- Examples are Naive Bayes Classifier, Linear Discriminant Analysis.

- The discriminivative model makes no assumption on the joint distribution of the data. Instead, it takes the data as given and tries to maximize the conditional density (Prob(class|data)) directly.

- Examples are Logistic Regression, Perceptron and Support Vector Machine.

Intuition and derivation of Logistic Regression

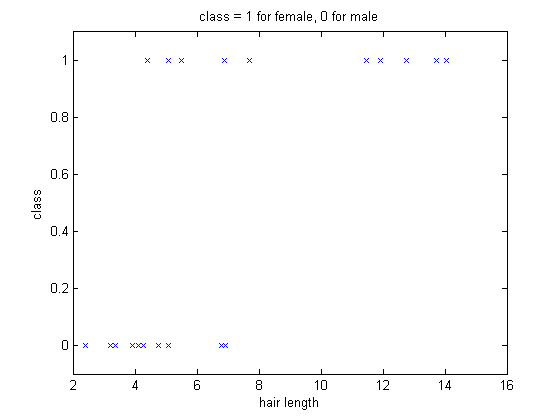

Consider a simple classification problem. The goal is to tell whether a person is male or female base on one feature: hair length. The data is given as $ (x_i,y_i) $ where i is the index number of the training set, and $ x_i $ is hair length in inches and $ y_i=1 $ indicates the person is male and 0 if female. Assume women has longer hair length the distribution of training data will look like this:

Clearly if a person's hair length is comparably long, being a female is more likely. If there is another person with hair length 10, we could say it's more likely to be a female. However, we don't have a description about how long is long enough to say a person is female. So we introduce the probability. We want to say that given a hair length is 10 inches, the probability of the person being a female is close to 1.

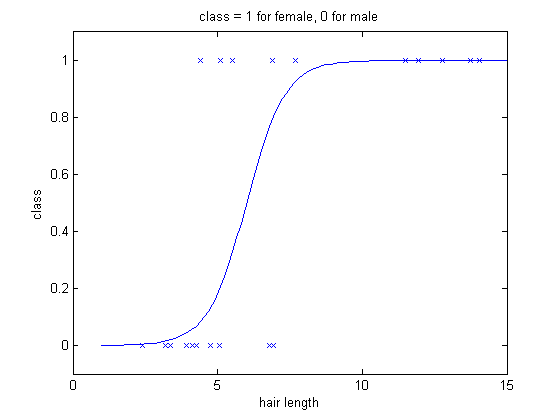

The intuition of logistic regression, in this example, is to assign a continuous probability to every possible value of hair length so that for longer hair length the probability of being a female is close to 1 and for shorter hair length the probability of being a female is close to 0. It is done by taking the linear combination of the feature and a constant and feeding it into a logistic function:

Then the logistic function of $ x_i $ would be:

After some fitting optimization algorithm, the curve looks like the following:

Having this curve, we could develop an decision rule:

More generally, the feature can be more than one dimension

Note:

- the decision boundary is linear:

- For 1-D it's a point, 2-D it's a line and etc.

- $ \beta^T x_i $ is the log of the odd ratio:

Having this setup, the goal is to find a $ \beta $ to let the curve fit the data optimally. To solve the problem, it comes about Maximum Likelihood Estimation and Newton's method.

Maximum Likelihood Estimation

For the logistic regression, we need to figure out a best fit of the curve to the training data. To do this, we choose $ \beta $ such that the likelihood of the joint distribution of the training data

is maximized.

From the likelihood function:

For simple notation, let

Take the log of the likelihood function:

where

and

Taking the derivative of the log likelihood with respect to $ \beta $:

If we set the above equation to zero, there is no close form solution, so we are going to use numerical optimization method to maximized the likelihood, namely, Newton's method.

Note

The goal is to maximize the above equation, however, in Newton's method it's easier to minimize the negative of the log likelihood function. In this case the derivatives becomes

Numerical optimization - Newton's method

To apply the Newton's method, we need a gradient function of log likelihood with respect to $ \beta $, together with a Hessian matrix. from the last part, we've already computed the derivative for each $ \beta_i $, now we need to write it in matrix form.

where d is the dimension of the feature vector, n is size of training data set.

The gradient can be written as:

for the Hessian matrix, consider taking the derivative of the log likelihood function with respect to $ \beta_j \text{ and }\beta_k $.

where $ z_j = (x_{1j},\cdots,x_{nj})^T, B = diag(\alpha_1(1-\alpha_1),\cdots,\alpha_n(1-\alpha_n)) $. Then, can write Hessian matrix:

With the equations of Gradient and Hessian matrix, we plug them into Newton's method iteration:

with some initialization $ \beta_0 $.

Example

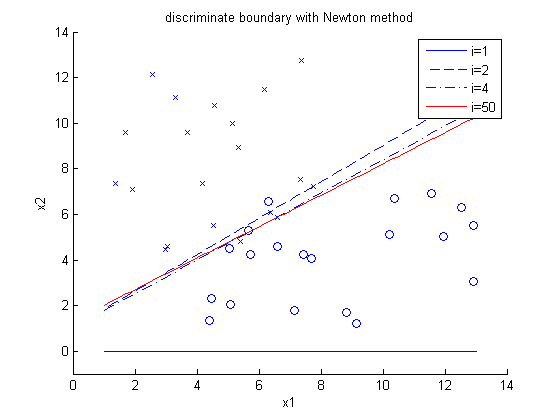

To show the performance of the implementation, 2-D uniform distributed training data is used. Following the implementation procedure described above, we get the following result:

In the plot, x and o represent two different classes.

Observations:

- initial decision boundary is defined by equation

- The system converges fast, 4-th iteration is close enough to 50-th iteration.

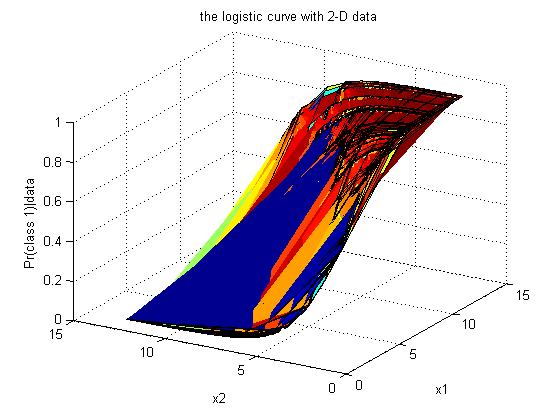

The following graph shows the logistic curve of the algorithm. It represents $ Pr(class=1|data) $

Summary

Logistic regression is an effective method that produces a linear classifier from labeled training data. Given a training data set, it tries estimate parameters $ \beta $ in order to maximize the conditional log-likelihood function with a logistic probability model. then use Newton's method to find the best fit.

Reference

[1] Mireille Boutin, “ECE662: Statistical Pattern Recognition and Decision Making Processes,” Purdue University, Spring 2014

[2] http://www.stat.cmu.edu/~cshalizi/uADA/12/lectures/ch12.pdf

[3] Chong and Żak, An Introduction to Optimization, Fourth Edition, 2013

Questions and comments

If you have any questions, comments, etc. please post them on this page.