| (26 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | [ | + | <center><font size= 4> |

| + | '''Upper Bounds for Bayes Error''' <br /> | ||

| + | </font size> | ||

| + | <font size=2> | ||

| + | A [http://www.projectrhea.org/learning/slectures.php slecture] by G.M. Dilshan Godaliyadda | ||

| − | + | Partly based on the [[2014_Spring_ECE_662_Boutin_Statistical_Pattern_recognition_slectures|ECE662 Spring 2014 lecture]] material of [[User:Mboutin|Prof. Mireille Boutin]]. | |

| + | </font size> | ||

| + | </center> | ||

| + | [[Media:Slecture_Upper_Bounds_for_Bayes_error.pdf| click here for PDF version]] | ||

| + | [[Media:Presentation_Upper_Bounds_for_Bayes_error.pdf| click here for presentation]] | ||

| − | + | ---- | |

| + | == '''Introduction''' == | ||

| + | This lecture is dedicated to explain upper bounds for Bayes Error. In order to motivate the problem we will first discuss what Bayes rule is, and how it is used to classify continuously valued data. Then we will present the probability of error that results from using Bayes rule. | ||

| + | When Bayes rule is used the resulting probability of error is the smallest possible error, and therefore becomes a very important quantity to know. Although, in many cases computing this error is intractable and therefore having an upper bound to the Bayes error becomes useful. The '''Chernoff Bound''' is one such upper bound which is reasonably easy to compute in many cases. Therefore we will present this bound, and then discuss a few results that can be attained for certain types of distributions. | ||

| + | ---- | ||

| + | == Bayes rule for classifying continuously valued data == | ||

| + | Let's assume that the features <math> x </math> are continuously valued and that <math>x \in \mathbb{R}^{N}</math> . We will also assume that the data belongs to two different classes, <math> \omega_1</math> and <math> \omega_2</math>. Then our objective is to classify the data in to these two classes. | ||

| + | We can classify <math> x </math> to be in <math> \omega_1</math> if the probability of class <math> 1</math> given the feature vector <math> x </math> is larger than the probability of class <math>2 </math> given the feature vector <math> x </math> . Hence, | ||

| − | [[ | + | <center><math> |

| + | Prob(\omega_1 | x) \geq Prob(\omega_2 | x) \text{......Eq.(1)} | ||

| + | </math></center> | ||

| + | |||

| + | then that particular feature vector <math> x </math> belongs in class <math> 1 </math>, and vise versa. Although, in many cases calculating <math>\rho(\omega_i | x)</math> is impossible, or extremely difficult. Therefore, we use Bayes theorem to simplify our problem. | ||

| + | |||

| + | Bayes theorem states, | ||

| + | |||

| + | <center><math> | ||

| + | \rho(\omega_i | x) = \frac{\rho(x|\omega_i) Prob(\omega_i)}{\rho(x) } \text{......Eq.(2)} | ||

| + | </math></center> | ||

| + | |||

| + | Here, <math>\rho(x|\omega_i) </math> is the probability density of <math>x</math> given it belongs to class <math>i</math>, <math>Prob(\omega_i)</math> known as the prior probability is the probability of class <math>i</math>, and <math>\rho(x)</math> is the probability density of <math>x</math>. Using equation (2), inequality (1) can be re-written as, | ||

| + | |||

| + | <center><math> | ||

| + | \frac{\rho(x|\omega_1) Prob(\omega_1)}{\rho(x) } \geq \frac{\rho(x|\omega_2) Prob(\omega_2)}{\rho(x) } | ||

| + | </math></center> | ||

| + | |||

| + | <center><math> | ||

| + | \rho(x|\omega_1) Prob(\omega_1) \geq \rho(x|\omega_2) Prob(\omega_2).\text{......Eq.(3)} | ||

| + | </math></center> | ||

| + | |||

| + | ---- | ||

| + | |||

| + | == Probability of Error when using Bayes rule == | ||

| + | |||

| + | When using a certain method for classifying data, it is important to know the probability of making an error, because the ultimate goal of classification is to minimize this error. When we use Bayes rule to classify data, the probability of error is, | ||

| + | |||

| + | <center><math> | ||

| + | Prob \left[ Error \right] = \int_{\mathbb{R}^N} \! \rho \left( Error,x\right) \, \mathrm{d}x \text{......Eq.(4)} | ||

| + | </math></center> | ||

| + | |||

| + | Then using the definition of conditional probability we can rewrite this equation as, | ||

| + | |||

| + | <center><math> | ||

| + | Prob \left[ Error \right] = \int_{\mathbb{R}^N} \! Prob \left( Error \vert x \right) \rho \left( x \right) \, \mathrm{d}x\text{......Eq.(5)} | ||

| + | </math></center> | ||

| + | |||

| + | Now let us look at an example to understand how we can write this integral in terms of <math>Prob \left( \omega_1 \vert x\right)</math> and <math>Prob \left( \omega_1 \vert x\right)</math>. | ||

| + | |||

| + | From equation (2) we know that <math>Prob \left( \omega_i \vert x\right) \propto \rho(x|\omega_i) Prob(\omega_i)</math>, and therefore <math>\rho(x|\omega_i) Prob(\omega_i)</math> and <math>Prob \left( \omega_i \vert x\right)</math> will have the same shape. Therefore, we plot <math>\rho(x|\omega_1) Prob(\omega_1)</math> and <math>\rho(x|\omega_2) Prob(\omega_2)</math> on the same plot as shown in Figure 1. | ||

| + | |||

| + | <center>[[Image:Upper_bounds_Bayes_error_Pic1.jpg|700 px|thumb|left| Figure 1: <math>\rho(x|\omega_1) Prob(\omega_1)</math> and <math>\rho(x|\omega_2) Prob(\omega_2)</math>]] </center><br /> | ||

| + | |||

| + | |||

| + | From this we can see that errors happen in the regions where the two curves intersect. For example, if we consider a point in the region where <math>\rho(x|\omega_1) Prob(\omega_1)</math> and <math>\rho(x|\omega_2) Prob(\omega_2)</math> intersect, and <math>\rho(x|\omega_1) Prob(\omega_1) \geq \rho(x|\omega_2) Prob(\omega_2)</math>, it will be classified as Class <math>1</math>. | ||

| + | |||

| + | Now since <math>Prob \left( \omega_i \vert x\right) \propto \rho(x|\omega_i) Prob(\omega_i)</math>, our expression for the probability of error can be written as, | ||

| + | <center><math> | ||

| + | Prob \left[ Error \right] = \int_{\mathbb{R}^N} \! \min \left( Prob \left( \omega_1 \vert x \right),Prob \left( \omega_2 \vert x \right) \right) \, \mathrm{d}x\text{......Eq.(6)} | ||

| + | </math></center> | ||

| + | |||

| + | It is important to note that the above example is a trivial case when there is just one intersection between the curves. There can be any number of intersections in the general case, but even still the same equation holds. In this case the probability of error will be the summation of the integrals over the lesser of the <math>Prob \left( \omega_i \vert x \right)</math>'s in the different regions, which once more is given by equation (6). | ||

| + | |||

| + | By using Bayes Theorem, we can simplify further so that, | ||

| + | |||

| + | <center><math> | ||

| + | Prob \left[ Error \right] = \int_{\mathbb{R}^N} \! \min \left( \rho \left( x \vert \omega_1 \right) Prob \left( \omega_1 \right) ,\rho \left( x \vert \omega_2 \right) Prob \left( \omega_2 \right) \right) \, \mathrm{d}x\text{......Eq.(7)} | ||

| + | </math></center> | ||

| + | |||

| + | In most cases solving either integral is intractable because these could be integrals in large dimensions. Therefore the need for an approximate answer becomes necessary. | ||

| + | |||

| + | Of course our goal will always be to get the smallest possible probability of error. Although, we know Bayes error is almost impossible to calculate in many cases. So instead what we can do is find an upper bound to the Bayes error. | ||

| + | |||

| + | For example let us assume that we want the probability of error to be lower than <math>0.05</math>. Now if we find an upper bound to Bayes error that is less than <math>0.05</math>, we definitely know that Bayes error is less than <math>0.05</math>. In the next section we will explore a single parameter family of upper bounds to Bayes error, the 'Chernoff Bound. | ||

| + | |||

| + | ---- | ||

| + | |||

| + | == Chernoff Bound == | ||

| + | |||

| + | To formulate the Chernoff bound we use the following mathematical property: | ||

| + | If <math>a,b \in \mathbb{R}_{\geq 0} </math>, and <math>0 \leq \beta \leq 1</math> then, | ||

| + | |||

| + | <center><math> | ||

| + | min \left\lbrace a,b \right\rbrace \leq a^{\beta} b^{1-\beta}\text{......Eq.(8)} | ||

| + | </math></center> | ||

| + | |||

| + | Since equation (6) has probabilities and they are non-negative, we can use inequality (7) and get the following upper bound for the probability of error, | ||

| + | |||

| + | <center><math> | ||

| + | Prob \left[ Error \right] \leq \int_{\mathbb{R}^N} \! \left[ Prob \left( \omega_1 \vert x \right) \right]^{\beta} \left[ Prob \left( \omega_2 \vert x \right) \right]^{1-\beta} \, \mathrm{d}x\text{......Eq.(9)} | ||

| + | </math></center> | ||

| + | |||

| + | <center><math> | ||

| + | Prob \left[ Error \right] \leq \int_{\mathbb{R}^N} \! \left[ \rho \left( x \vert \omega_1 \right) Prob \left( \omega_1 \right) \right]^{\beta} \left[ \rho \left( x \vert \omega_2 \right) Prob \left( \omega_2 \right) \right] ^{1-\beta} \, \mathrm{d}x\text{......Eq.(10)} | ||

| + | </math></center> | ||

| + | This family of upper bounds parameterized by <math>\beta</math> is known as the Chernoff bound (<math>\varepsilon_{\beta}</math>). | ||

| + | |||

| + | <center>[[Image:Upper_bounds_Bayes_error_Pic2.jpg|700 px|thumb|left| Figure 2: This figure illustrates how the knowledge of the Chernoff bound is sufficient in some cases to determine if the classification method is able to attain a certain Desired Prob[error]. So if for some values of <math>\beta</math> the curve <math>\varepsilon_{\beta}</math> dips below the Desired Prob[error], we know the method we are using can attain the accuracy we require without knowing us having to calculate the Actual (Bayes) Prob[error]]] </center><br /> | ||

| + | |||

| + | If needed it is possible to find the smallest error bound by solving, | ||

| + | |||

| + | <center><math> | ||

| + | \varepsilon_{min} = \min_{\beta \in [0,1]} \varepsilon_{\beta}.\text{......Eq.(11)} | ||

| + | </math></center> | ||

| + | |||

| + | Although, this is not necessarily an easy problem to solve, because the optimization problem might not be convex and might have local minimums. For the purpose of evaluating a classification method one can try with different values for <math>\beta</math>, and compare the least value of <math>\varepsilon_{\beta}</math> with the desired error probability. | ||

| + | |||

| + | In the special case where <math>\beta = \frac{1}{2}</math>, the Chernoff bound, <math>\varepsilon_{\frac{1}{2}}</math>, is known as the Bhattacharyya bound. | ||

| + | |||

| + | ----- | ||

| + | ===Chernoff bound for Normally distributed data=== | ||

| + | |||

| + | When <math>\rho (x\vert \omega_i)</math> is Normally distributed and has mean <math>\mu_i</math> and covariance <math> \Sigma_i</math>, the Chernoff bound has the following closed form solution, | ||

| + | |||

| + | <center><math>\varepsilon_{\beta} = Prob(\omega_1)^{\beta} Prob(\omega_2)^{1-\beta} e^{-f(\beta)}\text{......Eq.(12)}</math></center> | ||

| + | |||

| + | where, | ||

| + | |||

| + | <math>f(\beta) = \frac{\beta(1-\beta)}{2} (\mu_2 - \mu_1)^t \left[ \beta \Sigma_1 + (1-\beta) \Sigma_2 \right]^{-1}(\mu_2 - \mu_1)+ \frac{1}{2} \ln \left( \frac{\vert \beta \Sigma_1 + (1-\beta) \Sigma_2 \vert}{\vert \Sigma_1 \vert^{\beta} \vert \Sigma_2 \vert^{1-\beta}} \right).</math> | ||

| + | |||

| + | |||

| + | In this case the Bhattacharyya bound becomes, | ||

| + | |||

| + | <center><math>\varepsilon_{\frac{1}{2}} = \sqrt{Prob(\omega_1) Prob(\omega_2)} e^{f \left(\frac{1}{2}\right)}\text{......Eq.(13)}</math> </center> | ||

| + | |||

| + | |||

| + | where, | ||

| + | |||

| + | <center><math>f\left(\frac{1}{2}\right) = \frac{1}{8} (\mu_2 - \mu_1)^t \left[ \frac{\Sigma_1 + \Sigma_2 }{2}\right]^{-1}(\mu_2 - \mu_1)+ \frac{1}{2} \ln \left( \frac{\vert \frac{\Sigma_1 + \Sigma_2 }{2} \vert}{\vert \Sigma_1 \vert^{\frac{1}{2}} \vert \Sigma_2 \vert^{\frac{1}{2}}} \right).</math></center> | ||

| + | |||

| + | Notice that if<math> \mu_2=\mu_1</math>, then the first term in <math>f \left(\frac{1}{2}\right)</math> disappears. This term basically describes the separability due to the "distance" between the two mean values. Here the "distance" is described by metric defined by the matrix <math>\left[ \frac{\Sigma_1 + \Sigma_2 }{2}\right]^{-1}</math>. | ||

| + | |||

| + | The second term disappears if the covariance matrices are equal, i.e. <math>\Sigma_1 = \Sigma_2</math>. Also, in this case it can be shown that, <math>\varepsilon_{\frac{1}{2}} = \varepsilon_{min}</math>. | ||

| + | |||

| + | '''Proof:''' | ||

| + | We want to minimize <math>\varepsilon_{\beta}</math> , which means we want maximize <math>f(\beta)</math>. | ||

| + | |||

| + | <center><math>f(\beta) = \frac{\beta(1-\beta)}{2} (\mu_2 - \mu_1)^t \left[ \beta \Sigma_1 + (1-\beta) \Sigma_2 \right]^{-1}(\mu_2 - \mu_1)</math></center> | ||

| + | |||

| + | Since this is convex in <math>\beta</math> we know that there is only one maximum and that maximum is the global maximum. So we take the derivative w.r.t. <math>\beta</math>, and set it equal to zero to find the maximum. | ||

| + | |||

| + | |||

| + | <center><math>\frac{\partial}{\partial \beta} f(\beta) = 0</math></center> | ||

| + | <center><math>\frac{\partial}{\partial \beta} f(\beta) = \frac{(1-2\beta)}{2}(\mu_2 - \mu_1)^t \left[ \beta \Sigma_1 + (1-\beta) \Sigma_2 \right]^{-1}(\mu_2 - \mu_1)</math></center> | ||

| + | |||

| + | if <math>\mu_1 = \mu_2</math>, then <math>f(\beta) =0</math>, and <math>\varepsilon_{\beta} = constant</math>. This means <math>\varepsilon_{min} = \varepsilon_{\frac{1}{2}}</math>. | ||

| + | |||

| + | if <math>\mu_1 \neq \mu_2</math>, then the <math>\beta</math> that maximizes <math>f(\beta)</math> is <math>\beta = \frac{1}{2}</math>. Then <math>\varepsilon_{min} = \varepsilon_{\frac{1}{2}}</math> . | ||

| + | |||

| + | |||

| + | == Summary and Conclusions == | ||

| + | |||

| + | In this lecture we have shown that the probability of error ($Prob \left[ Error \right] $) when using Bayes error, is upper bounded by the Chernoff Bound. Therefore, | ||

| + | |||

| + | <center><math>Prob \left[ Error \right] \leq \varepsilon_{\beta}</math></center> | ||

| + | |||

| + | for <math>\beta \in \left[ 0, 1 \right]</math>. | ||

| + | |||

| + | When <math>\beta =\frac{1}{2}</math> then <math>\varepsilon_{\frac{1}{2}}</math> in known as the Bhattacharyya bound. | ||

| + | ---- | ||

| + | |||

| + | == References == | ||

| + | |||

| + | [1]. Duda, Richard O. and Hart, Peter E. and Stork, David G., "Pattern Classication (2nd Edition)," Wiley-Interscience, 2000. | ||

| + | |||

| + | [2]. [https://engineering.purdue.edu/~mboutin/ Mireille Boutin], "ECE662: Statistical Pattern Recognition and Decision Making Processes," Purdue University, Spring 2014. | ||

| + | ---- | ||

| + | |||

| + | == [[Upper_Bounds_for_Bayes_Error_Questions_and_comment|Questions and comments]]== | ||

| + | |||

| + | If you have any questions, comments, etc. please post them On [[Upper_Bounds_for_Bayes_Error_Questions_and_comment|this page]]. | ||

Latest revision as of 09:45, 22 January 2015

Upper Bounds for Bayes Error

Partly based on the ECE662 Spring 2014 lecture material of Prof. Mireille Boutin.

Contents

Introduction

This lecture is dedicated to explain upper bounds for Bayes Error. In order to motivate the problem we will first discuss what Bayes rule is, and how it is used to classify continuously valued data. Then we will present the probability of error that results from using Bayes rule.

When Bayes rule is used the resulting probability of error is the smallest possible error, and therefore becomes a very important quantity to know. Although, in many cases computing this error is intractable and therefore having an upper bound to the Bayes error becomes useful. The Chernoff Bound is one such upper bound which is reasonably easy to compute in many cases. Therefore we will present this bound, and then discuss a few results that can be attained for certain types of distributions.

Bayes rule for classifying continuously valued data

Let's assume that the features $ x $ are continuously valued and that $ x \in \mathbb{R}^{N} $ . We will also assume that the data belongs to two different classes, $ \omega_1 $ and $ \omega_2 $. Then our objective is to classify the data in to these two classes.

We can classify $ x $ to be in $ \omega_1 $ if the probability of class $ 1 $ given the feature vector $ x $ is larger than the probability of class $ 2 $ given the feature vector $ x $ . Hence,

then that particular feature vector $ x $ belongs in class $ 1 $, and vise versa. Although, in many cases calculating $ \rho(\omega_i | x) $ is impossible, or extremely difficult. Therefore, we use Bayes theorem to simplify our problem.

Bayes theorem states,

Here, $ \rho(x|\omega_i) $ is the probability density of $ x $ given it belongs to class $ i $, $ Prob(\omega_i) $ known as the prior probability is the probability of class $ i $, and $ \rho(x) $ is the probability density of $ x $. Using equation (2), inequality (1) can be re-written as,

Probability of Error when using Bayes rule

When using a certain method for classifying data, it is important to know the probability of making an error, because the ultimate goal of classification is to minimize this error. When we use Bayes rule to classify data, the probability of error is,

Then using the definition of conditional probability we can rewrite this equation as,

Now let us look at an example to understand how we can write this integral in terms of $ Prob \left( \omega_1 \vert x\right) $ and $ Prob \left( \omega_1 \vert x\right) $.

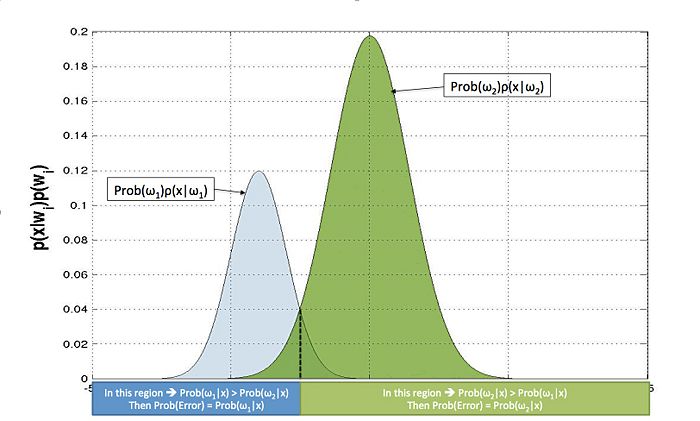

From equation (2) we know that $ Prob \left( \omega_i \vert x\right) \propto \rho(x|\omega_i) Prob(\omega_i) $, and therefore $ \rho(x|\omega_i) Prob(\omega_i) $ and $ Prob \left( \omega_i \vert x\right) $ will have the same shape. Therefore, we plot $ \rho(x|\omega_1) Prob(\omega_1) $ and $ \rho(x|\omega_2) Prob(\omega_2) $ on the same plot as shown in Figure 1.

From this we can see that errors happen in the regions where the two curves intersect. For example, if we consider a point in the region where $ \rho(x|\omega_1) Prob(\omega_1) $ and $ \rho(x|\omega_2) Prob(\omega_2) $ intersect, and $ \rho(x|\omega_1) Prob(\omega_1) \geq \rho(x|\omega_2) Prob(\omega_2) $, it will be classified as Class $ 1 $.

Now since $ Prob \left( \omega_i \vert x\right) \propto \rho(x|\omega_i) Prob(\omega_i) $, our expression for the probability of error can be written as,

It is important to note that the above example is a trivial case when there is just one intersection between the curves. There can be any number of intersections in the general case, but even still the same equation holds. In this case the probability of error will be the summation of the integrals over the lesser of the $ Prob \left( \omega_i \vert x \right) $'s in the different regions, which once more is given by equation (6).

By using Bayes Theorem, we can simplify further so that,

In most cases solving either integral is intractable because these could be integrals in large dimensions. Therefore the need for an approximate answer becomes necessary.

Of course our goal will always be to get the smallest possible probability of error. Although, we know Bayes error is almost impossible to calculate in many cases. So instead what we can do is find an upper bound to the Bayes error.

For example let us assume that we want the probability of error to be lower than $ 0.05 $. Now if we find an upper bound to Bayes error that is less than $ 0.05 $, we definitely know that Bayes error is less than $ 0.05 $. In the next section we will explore a single parameter family of upper bounds to Bayes error, the 'Chernoff Bound.

Chernoff Bound

To formulate the Chernoff bound we use the following mathematical property: If $ a,b \in \mathbb{R}_{\geq 0} $, and $ 0 \leq \beta \leq 1 $ then,

Since equation (6) has probabilities and they are non-negative, we can use inequality (7) and get the following upper bound for the probability of error,

This family of upper bounds parameterized by $ \beta $ is known as the Chernoff bound ($ \varepsilon_{\beta} $).

If needed it is possible to find the smallest error bound by solving,

Although, this is not necessarily an easy problem to solve, because the optimization problem might not be convex and might have local minimums. For the purpose of evaluating a classification method one can try with different values for $ \beta $, and compare the least value of $ \varepsilon_{\beta} $ with the desired error probability.

In the special case where $ \beta = \frac{1}{2} $, the Chernoff bound, $ \varepsilon_{\frac{1}{2}} $, is known as the Bhattacharyya bound.

Chernoff bound for Normally distributed data

When $ \rho (x\vert \omega_i) $ is Normally distributed and has mean $ \mu_i $ and covariance $ \Sigma_i $, the Chernoff bound has the following closed form solution,

where,

$ f(\beta) = \frac{\beta(1-\beta)}{2} (\mu_2 - \mu_1)^t \left[ \beta \Sigma_1 + (1-\beta) \Sigma_2 \right]^{-1}(\mu_2 - \mu_1)+ \frac{1}{2} \ln \left( \frac{\vert \beta \Sigma_1 + (1-\beta) \Sigma_2 \vert}{\vert \Sigma_1 \vert^{\beta} \vert \Sigma_2 \vert^{1-\beta}} \right). $

In this case the Bhattacharyya bound becomes,

where,

Notice that if$ \mu_2=\mu_1 $, then the first term in $ f \left(\frac{1}{2}\right) $ disappears. This term basically describes the separability due to the "distance" between the two mean values. Here the "distance" is described by metric defined by the matrix $ \left[ \frac{\Sigma_1 + \Sigma_2 }{2}\right]^{-1} $.

The second term disappears if the covariance matrices are equal, i.e. $ \Sigma_1 = \Sigma_2 $. Also, in this case it can be shown that, $ \varepsilon_{\frac{1}{2}} = \varepsilon_{min} $.

Proof: We want to minimize $ \varepsilon_{\beta} $ , which means we want maximize $ f(\beta) $.

Since this is convex in $ \beta $ we know that there is only one maximum and that maximum is the global maximum. So we take the derivative w.r.t. $ \beta $, and set it equal to zero to find the maximum.

if $ \mu_1 = \mu_2 $, then $ f(\beta) =0 $, and $ \varepsilon_{\beta} = constant $. This means $ \varepsilon_{min} = \varepsilon_{\frac{1}{2}} $.

if $ \mu_1 \neq \mu_2 $, then the $ \beta $ that maximizes $ f(\beta) $ is $ \beta = \frac{1}{2} $. Then $ \varepsilon_{min} = \varepsilon_{\frac{1}{2}} $ .

Summary and Conclusions

In this lecture we have shown that the probability of error ($Prob \left[ Error \right] $) when using Bayes error, is upper bounded by the Chernoff Bound. Therefore,

for $ \beta \in \left[ 0, 1 \right] $.

When $ \beta =\frac{1}{2} $ then $ \varepsilon_{\frac{1}{2}} $ in known as the Bhattacharyya bound.

References

[1]. Duda, Richard O. and Hart, Peter E. and Stork, David G., "Pattern Classication (2nd Edition)," Wiley-Interscience, 2000.

[2]. Mireille Boutin, "ECE662: Statistical Pattern Recognition and Decision Making Processes," Purdue University, Spring 2014.

Questions and comments

If you have any questions, comments, etc. please post them On this page.