This page describes the steepest descent algorithm one which is used to compute the minimum error, in training a neural network. It essentially searches for the best path, which is the one that leads it to the point of minimum error.

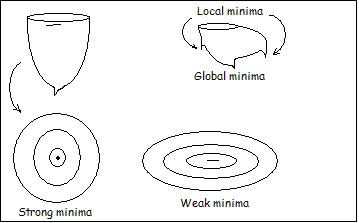

The concept involves finding out the path of minimum energy. One can visualize this as, when a ball is "simply" dropped on an arbitrary surface, assuming that the point at which it is dropped is not tightly bound on both sides, then it tries to roll on the path which requires the minimum expense of energy. In rolling, it comes to a stop at points that are known as the "minima". Let us examine the different types of minima that a surface can have:

Strong minima Weak minima Local minima Global minima

The diagram below depicts all of these types. We term a minima as being weak, if it is not unique in that, inspite of it being the lowest point on the surface, it is not alone. That is, it shares this minima status with some other points, and this is shown below in the contour plot by the small line.

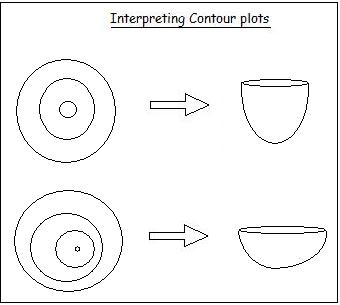

So, our aim would be to move from a given random point on the surface towards the global minimum. The contour plots are often useful in this endeavor. They first tell us as to if the surface is uniformly curved or otherwise, as shown in the figure below.

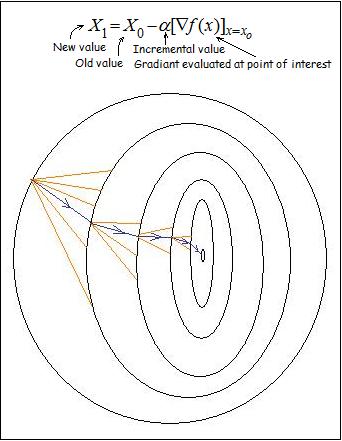

Now let us understand the working of the steepest descent algorithm. Consider the equation that is shown below. The idea is to start with an arbitrary point xo, and then find out the new value x1, subject to the minimization of error at every step. Thus, the path chosen at every point, is the one that yields minimum expense of energy, and this process is continued until the directional derivative becomes zero, which means that we have reached a minimum.