Lecture 15, ECE662: Decision Theory

Lecture notes for ECE662 Spring 2008, Prof. Boutin.

Other lectures: 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28,

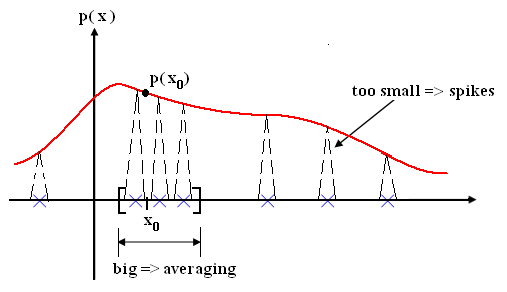

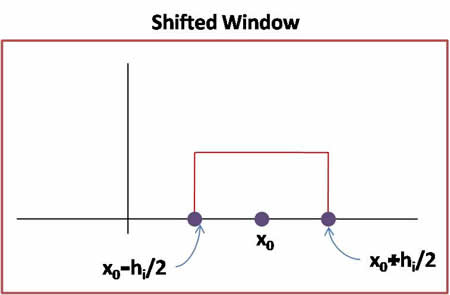

Figure 1

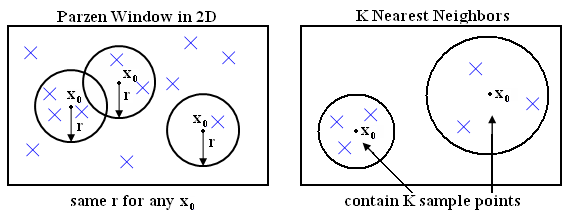

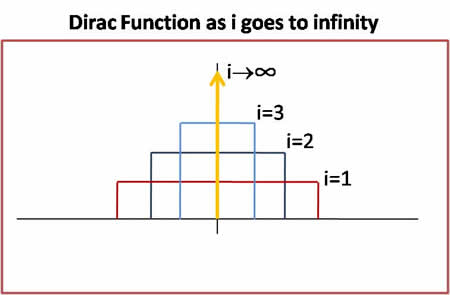

Figure 2

Parzen Window Method

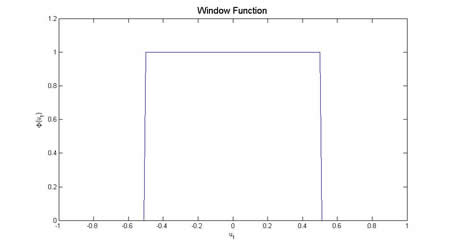

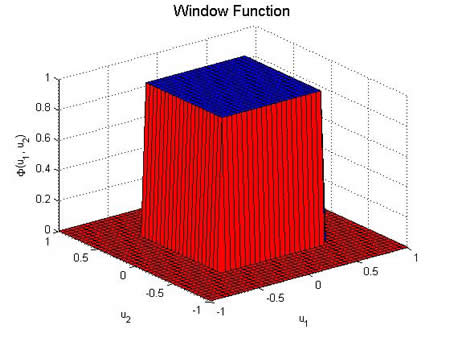

- Step 1:** Choose "shape" of your window by introducing a "window function"

e.g. if $ R_i $ is hybercube in $ \mathbb{R}^n $ with side-length $ h_i $, then the window function is $ \varphi $.

$ \varphi(\vec{u})=\varphi(u_1, u_2, \ldots, u_n)=1 $ if $ |u_i|<\frac{1}{2}, \forall i $ otherwise 0.

Examples of Parzen windows

Given the shape for parzen window by $ \varphi $, we can scale and shift it as required by the method.

$ \varphi(\frac{\vec{x}-\vec{x_0}}{h_i}) $ is window centered at $ \vec{x_0} $ scaled by a factor $ h_i $, i.e. its side-length is $ h_i $.

- Step 2:** Write the density estimate of $ p(\vec{x}) $ at $ \vec{x_0} \in R_i $ using window function, denoted by $ p_i(\vec{x_0}) $.

We have number of samples for $ \{\vec{x_1}, \vec{x_2}, \ldots, \vec{x_i}\} $ inside $ R_i $ denoted by $ K_i $

$ \sum_{l=1}^{i}\varphi(\frac{\vec{x_l}-\vec{x_0}}{h_i}) $

So, $ p_i(\vec{x_0})=\frac{k_i}{iV_i}=\frac{1}{iV_i}\sum_{l=1}^{i}\varphi(\frac{\vec{x_l}-\vec{x_0}}{h_i}) $

Let $ \delta_i(\vec{u})=\frac{1}{V_i}\varphi(\frac{\vec{u}}{h_i}) $

$ p_i(\vec{x_0})=\frac{1}{i}\sum_{l=1}^{i}\delta_i(\vec{x_l}-\vec{x_0}) $

This last equation is an average over impulses. For any l, $ \lim_{h_i->0}\delta(\vec{x_l}-\vec{x_0}) $ is [Dirac delta Function]. We do not want to average over dirac delta functions. Our objective is that $ p_i(\vec{x_0}) $ should converge to true value $ p(\vec{x}) $, as $ i\rightarrow \infty $

- What does convergence mean here?**

Observe $ \{p_i(\vec{x_0})\} $ is a sequence of random variables since $ p_i(\vec{x_0}) $ depends on random variables |sample_space_i|. What do we mean by convergence of a sequence of random variables (There are many definitions). We pick "Convergence in mean square" sense, i.e.

If $ \lim_{i\rightarrow \infty}E\{p_i(\vec{x_0})\}=p(\vec{x_0}) $

and $ \lim_{i\rightarrow \infty}Var\{p_i(\vec{x_0})\}=0 $

then we say $ p_i(\vec{x_0}) \longrightarrow p(\vec{x_0}) $ in mean square as $ i\rightarrow \infty $

- First condition:**

From the previous result, |jinha_pix0|

$ \displaystyle p_i (x_0) = \frac{1}{i} \sum_{l=1}^{i} \delta_i (\vec{x}_l - \vec{x}_0) $

We don't need an infinity number of samples to make $ E(p_i(\vec{x_o})) $ converge to $ p(\vec{x_o}) $ as $ i\to\infty $.

We just need $ h_i \to\infty $ (iie. $ V_i\to\infty $)

- To make it sure** |jinha_varpix0|, what should we do?

$ Var(p_i(x_0)) \rightarrow 0 $

$ \displaystyle Var(p_i(x_0)) = Var(\sum_{l=1}^{i} \frac{1}{i} \delta_i(\vec{x}_l - \vec{x}_0)) = \sum_{l=1}^{i} Var(\frac{1}{i} \delta_i(\vec{x}_l - \vec{x}_0)) $

$ \displaystyle = \sum_{l=1}^{i} E \left[ \left( \frac{\delta_i(\vec{x}_l - \vec{x}_0)}{i} - E\left[ \frac{\delta_i(\vec{x}_l - \vec{x}_0)}{i} \right] \right)^2 \right] = \sum_{l=1}^{i} E \left[ \left( \frac{\delta_i(\vec{x}_l - \vec{x}_0)}{i} \right)^2 \right] - \left( E\left[ \frac{\delta_i(\vec{x}_l - \vec{x}_0)}{i} \right] \right)^2 $

We know that second term is non-negative, therefore we can write

$ \displaystyle Var(p_i(x_0)) \le \sum_{l=1}^{i} E \left[ \left( \frac{\delta_i(\vec{x}_l - \vec{x}_0)}{i} \right)^2 \right] $

|jinha_varpix0_4|

$ \displaystyle \rightarrow Var(p_i(x_0)) \le \sum_{l=1}^{i} \int \left( \frac{\delta_i(\vec{x}_l - \vec{x}_0)}{i} \right)^2 p(x_l) dx_l $

$ \displaystyle \rightarrow Var(p_i(x_0)) \le \sum_{l=1}^{i} \frac{1}{i^2} \int \frac{\psi \left( \frac{\delta_i(\vec{x}_l - \vec{x}_0)}{i}\right)}{V_i} \frac{\psi \left( \frac{\delta_i(\vec{x}_l - \vec{x}_0)}{i}\right)}{V_i} p(x_l) dx_l $

$ \displaystyle \rightarrow Var(p_i(x_0)) \le \frac{1}{i V_i} sup\psi \int \sum_{l=1}^{i} \delta_i (x_l - x_0) p(x_l) dx_l $

$ \displaystyle \therefore Var(p_i(x_0)) \le \frac{1}{i V_i} sup\psi E [p_i(x_0)] $

If fixed i=d, then as $ v_i $ increased, $ var(P_i (\vec{x_0})) $ decreased.

But, if $ i V_i \rightarrow \infty $ , as $ i \rightarrow \infty $

(for example, if $ v_i= \frac{1}{\sqrt i}, v_i=\frac{13}{\sqrt i} or \frac{17}{\sqrt i} $)

then, $ var(P_i (\vec{x_0})) \rightarrow 0, as i \rightarrow \infty $

Here are some useful links to "Parzen-window Density Estimation"

http://www.cs.utah.edu/~suyash/Dissertation_html/node11.html

http://en.wikipedia.org/wiki/Parzen_window

http://www.personal.rdg.ac.uk/~sis01xh/teaching/CY2D2/Pattern2.pdf

http://www.eee.metu.edu.tr/~alatan/Courses/Demo/AppletParzen.html