Applications of Convolution: Image Blurring

The convolution forms the backbone of signal processing, but what are some direct applications of it? In this page, we will explore the application of the convolution operation in image blurring.

Convolution

In continuous time, a convolution is defined by the following integral:

$ (f*g)(t) = \int_{-\infty}^{\infty}f(t-\tau)g(\tau)d\tau $

In discrete time, a convolution is defined by the following summation:

$ [f*g][n] = \sum_{n = -\infty}^{\infty}f[n-\tau]g[\tau] $

A convolution takes one function and applies it repeatedly over the range of another through multiplication. As such, at a high level, the convolution can be thought of as having one function "smoothed" into another, or having the two functions "blended". This simple intuition offers insights into its uses in image blurring.

Image Blurring

The core purpose of blurring is to reduce the image's clarity and detail, but still maintain its content. For the most part, we still want to be able to generally tell what's inside the image. Every pixel in the image must be altered in a way such that it is noticeably different from before, but not completely garbled to the point it renders the image's content indiscernible. How can this be accomplished? One method would be to edit each pixel based on the pixels surrounding it. Intuitively, that would alter the pixel while still maintaining some information about its place in the picture, and therefore not completely garble the image. This is where convolution comes in. As stated previously, the convolution can be thought of as "blending" two function. Therefore, by convolving a pixel with the pixels surrounding it, we can set every pixel equal to a "smooth mix" of pixel information surrounding it.

Box Blur

The box blur is a simple blur in which every pixel is set equal to the average of all the pixels surrounding it. It can be expressed as a discrete convolution of two functions f[n] and g[n], where f[n] represents the discrete pixel values in the image, and g[n] is our kernel, which is a matrix represented as

$ \frac{1}{9}\begin{bmatrix} 1 & 1 & 1\\ 1 & 1 & 1\\ 1 & 1 & 1 \end{bmatrix} $

for a 3x3 kernel. The matrix is divided by the sum of all the elements (9 in this example) to normalize it. Defining h[n] as a function of blurred pixel values, "p" as the number of pixels in the image, "N" as the sum of the kernel values, and "d" as the kernel dimension, the box blur can be expressed as the following discrete convolution for a 3x3 kernel:

$ h[n] = [f*g][n] = \sum_{n=0}^{p} \frac{f[n]g[n-\tau]}{N} = \sum_{n=0}^{p} \frac{\sum_{i=0}^{d}\sum_{j=0}^{d}f_{i,j}g_{i,j}}{N} $

Application

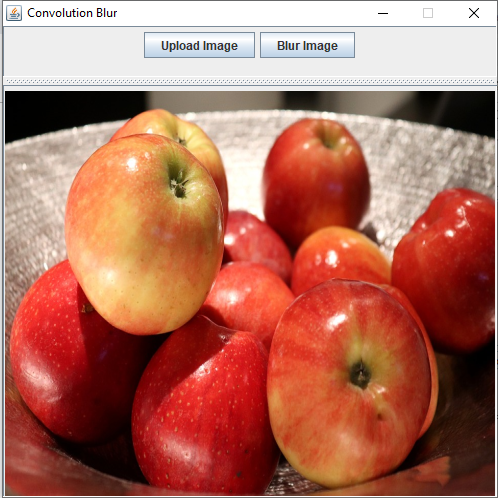

The convolution blur application can be downloaded from here: https://drive.google.com/file/d/1POWDsc9hPFBvD-LqFPHaDy8QrFIVvqeP/view?usp=sharing

This app uses a 5x5 kernel to blur the image. The image you upload will be resized to fit the window. The blurring process will take some time, so please be patient when using the app. This section will walk through certain areas of the code relevant to the direct application of the convolution.

public static BufferedImage convolve(BufferedImage image, int kernelDimension) { BufferedImage newImage = image; int width = image.getWidth(); int height = image.getHeight();

A separate function is created to convolve the image that takes in the input image and a dimension for the kernel matrix.

public static BufferedImage convolve(BufferedImage image, int kernelDimension) { BufferedImage newImage = image; int width = image.getWidth(); int height = image.getHeight(); for (int i = startX; i < width; i++) { for (int j = startY; j < height; j++) { int indexX1 = i - kernelDimension/2; int indexY1 = j - kernelDimension/2; if (indexX1 < 0) { continue; } if (indexY1 < 0) { continue; } if (indexX1 + kernelDimension >= width) { continue; } if (indexY1 + kernelDimension >= height) { continue; } int newPixel = convolvePixel(image.getSubimage(indexX1, indexY1, w, h), kernelDimension); newImage.setRGB(i, j, newPixel); } } return newImage; }

The bufferedimage is a 2-dimensional matrix of pixel values. Two for-loops are used to loop through every pixel in the image. If a pixel is too close to the edges of the image, the kernel matrix cannot be applied. The function simply skips pixels whose kernel matrix would extend outside the image. Every valid pixel is passed into a separate function that performs the actual convolution and returns a new blurred pixel.

public static int convolvePixel (BufferedImage subImage, int kernelDimension) { float kernel = 1.0f / Math.pow(kernelDimension, 2); int newPixel = 0; float convSum = 0; int subWidth = subImage.getWidth(); int subHeight = subImage.getHeight(); int newRed = 0; int newGreen = 0; int newBlue = 0; for (int i = 0; i < subWidth; i++) { for (int j = 0; j < subHeight; j++) { int p = subImage.getRGB(i,j); int Red = (p>>16) & 0xff; int Green = (p>>8) & 0xff; int Blue = p & 0xff; Red *= kernel; Green *= kernel; Blue *= kernel; newRed += Red; newBlue += Blue; newGreen += Green; } }

The convolvePixel function takes in a subset of the image that will be used for the convolution, as well as the kernel dimension. Typically, a separate 2D array would be created to represent the kernel, but since every value of the matrix is the same, we can have just one variable represent it. A color image stores pixels in a 4-byte integer, with each byte representing either the alpha, red, green, and blue hues. The alpha value, which represents the opacity of a pixel, will simply be set to 255, its maximum value. The red, green, and blue values are extracted through bit-shifting and convoluted separately.

if (newRed > 255) { newRed = 255; } else if (newRed < 0) { newRed = 0; } if (newBlue > 255) { newBlue = 255; } else if (newBlue < 0) { newBlue = 0; } if (newGreen > 255) { newGreen = 255; } else if (newGreen < 0) { newGreen = 0; } newPixel = 0x000000FF<<24 | newRed<<16 | newGreen<<8 | newBlue; return newPixel; }

All RGB values are clamped between 0-255 since those are the minimum and maximum values to represent the colors. Bitwise operations are used to form the new blurred pixel.